Beyond The Turing Test: Assessing the Intelligence of Modern Machines

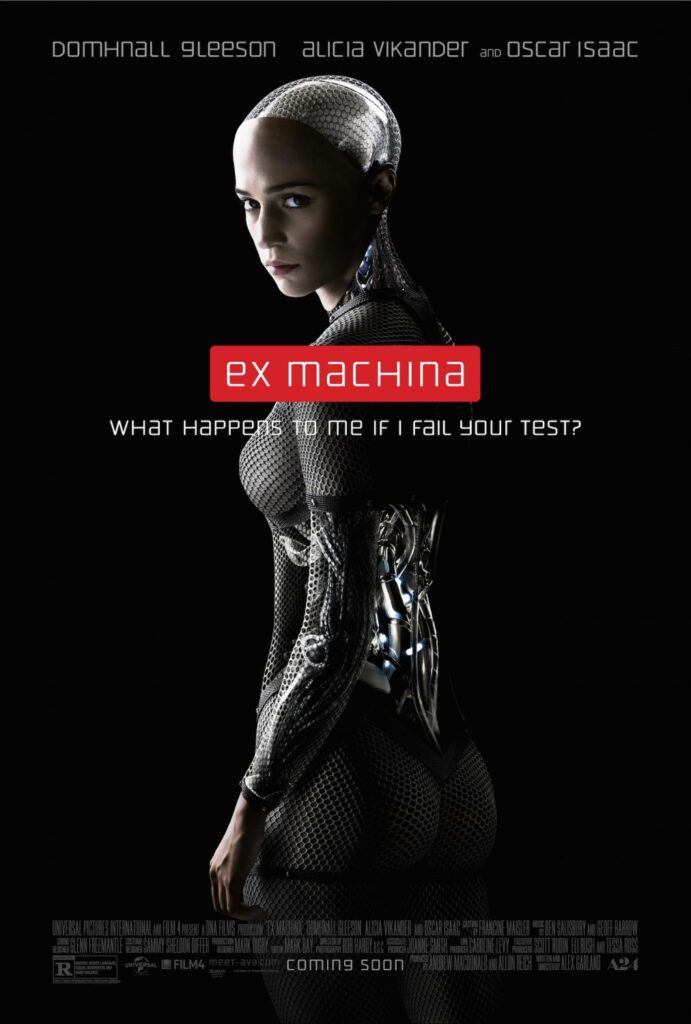

In the sci-fi psychological thriller, Ex Machina, a mind-bending series of interactions with advanced artificial general intelligence drives the young software developer protagonist into a fit of existential doubt, pulling at his skin and harming himself to make sure that he is actually human. The premise of the film – an A.I. android manipulating the emotions and interhuman relationships of the people working on it, in order to serve its own innate desires – seemed more fiction than science when it premiered in 2014. Since then though, at least two different A.I. models have been described as “sentient” by people working on them, and the natural language ability of chatbots like ChatGPT has been proven to fool at least a third of participants in one of the world’s largest Turing Tests ever conducted.

But does a machine’s ability to pass a Turing Test even matter now?

A.I. researchers have said for years that the seminal test that Alan Turing proposed in 1950 to determine the “intelligence” of machines is now obsolete. However, it’s misleading to think about the Turing Test as a true measure of intelligence in the way we might understand intelligence today. Turing designed the pass/fail test to determine whether a machine could generate human language well enough to fool a human into thinking that they’re chatting with another human via text.

A.I. models have never come closer to passing the traditional Turing Test than the pre-trained transformer models of today. Yet, it’s still up for debate whether any singular chatbot has truly passed it. Earlier this year, over 1.5 million people participated in a social turing test called “Human or Not” hosted by Israel-based AI21 Labs. After a month and more than 10 million conversations between humans and bots, researchers concluded that 32% of participants were unable to determine whether they were chatting with another human or a bot.

Researchers are now considering new frameworks for gauging machine intelligence, grounded in psychology, neurology and cognitive development.

Should Human-Like Machines be our Goal? The Case for Artificial Capable Intelligence

In 2020, SVP and Head Scientist for Artificial General Intelligence at Amazon, Rohit Prasad, wrote an opinion piece saying that the Turing Test is a misleading milestone.

“Instead of obsessing about making AIs indistinguishable from humans, our ambition should be building AIs that augment human intelligence and improve our daily lives in a way that is equitable and inclusive.”

Instead, Prasad said that developers should strive to build the most capable human-assistant possible – one that can process complex requests, plot a course through multiple tasks, and execute those tasks to achieve the intended outcome. Three years later and this is still a tall order, even for highly-integrated A.I. systems like Google’s Bard. Current models are great at predicting what should come next in a sequence, but they still lack the innate ability of humans to interpret and plan for implicit circumstances as effectively as even a human toddler. Today’s models still need a lot of hand-holding.

The goal for Prasad is to build an A.I. that exhibits human-like attributes of intelligence such as common sense and self-supervision, combined with a machine’s efficiency in searching information, memory recall and executing tasks.

Prasad says that A.I. should be “ambient.” It should anticipate your needs, accomplish tasks and fade into the background when not needed.

Under this view of A.I., the relative intelligence of the machine is essentially moot, as long as it possesses the capability to understand complex requests, account for contingencies, plan tasks methodically, and execute them.

Co-founder of Google’s DeepMind A.I. division, Mustafa Suleyman, shares a similar view on how we should think about machine intelligence. In his book, The Coming Wave: Technology, Power, and the Twenty-first Century’s Greatest Dilemma, Suleyman suggests that our goal should be to build “Artificial Capable Intelligence” (ACI), and suggests that we need a modernized Turing Test.

Suleyman says that the classic Turing Test, “doesn’t tell us anything about what the system can do or understand, anything about whether it has established complex inner monologues or can engage in planning over abstract time horizons, which is key to human intelligence.”

Suleyman’s take on the test challenges an A.I. to turn a seed investment of $100,000 and turn it into $1 million. To do so, the bot must research an e-commerce business opportunity, generate blueprints for a product, find a manufacturer on a site like Alibaba and then sell the item on a digital marketplace.

Suleyman thinks that A.I. will be able to pass such a test within two to three years. It helps that the internet is rife with information about how to make money (especially through digital commerce) that A.I. can use as training data. But elsewhere in the A.I. science world, researchers are looking deeper into machine intelligence, to find out if A.I. can use cognitive skills like a human to learn things it’s never been trained on.

Testing Abstract Reasoning in A.I.

As the old adage goes, “teach a man to fish, and you feed him for a day, teach a man to fish and you feed him for a lifetime.” For the most part, A.I. models have been given their fish – trillions of data points, or “tokens” scraped from every corner of the internet – but an alternative framework challenges A.I. to get the fish itself.

In July, researchers led by UCLA psychologist, Taylor Webb, published a study showing that ChatGPT-3 performed better than a group of undergraduates in certain exercises designed to test the use of analogies to solve problems.

“Analogy is central to human reasoning,” Webb said. “We think of it as being one of the major things that any kind of machine intelligence would need to demonstrate.”

However, it wasn’t a runaway victory for ChatGPT-3. While it scored well on some tests, it gave “absurd” answers on others.

One test was modeled after one that’s often used in child psychology. The subject is provided a story about a magical genie that transfers gems from one bottle to another. The subject is then challenged to transfer gumballs from one bowl to another, using posterboard and a cardboard tube. Using hints from the story, children can often pass the test easily. However, ChatGPT-3 was less capable, suggesting solutions that were elaborate but physically impossible to execute, with unnecessary steps and no clear mechanism for actually transferring the gumballs.

The example hints at one of A.I.’s greatest misconceptions: memorization versus intelligence.

When OpenAI announced the release of ChatGPT-4, much was made of the model’s ability to pass a laundry list of rigorous academic tests, including the Bar and medical licensing exams. The headlines stoked fear in the professional world, as white collar workers imagined a world of A.I. doctors, biologists and lawyers. However, suspicions soon arose that the model passed the tests in large part because it was trained on the tests, or least trained on similar questions with similar answers. Such training gives the A.I. the same benefit as a human with photographic memory, to be able to process a question, quickly consult an expansive memory of everything ever learned, and present the most relevant response, without the need for any critical thinking.

Follow-up studies showed that ChatGPT-4 performed significantly worse on questions that required knowledge of the world after 2021, demonstrating that A.I.’s “intelligence” is largely just its ability to surface relevant information. Experts describe this shortfall of only being able to recognize patterns that it’s seen before as an A.I. being “brittle”

So can A.I. learn in the way that we do, through abstract reasoning?

In 2019, Google A.I. researcher, François Chollet, published the framework for the “Abstract Reasoning Corpus” (ARC). The framework seeks to measure machine intelligence based on the machine’s ability to accomplish complex tasks with limited prior knowledge. This framework strips away the possibility that signs of intelligence displayed by AI are merely a factor of the AI’s ability to consult its training data and reproduce previous solutions.

In 2020, Chollet put on the Abstract Reasoning Challenge, an open competition inviting A.I. builders to develop a model that could solve reasoning tasks that it’s never seen before, given only a handful of demonstrations to learn from.

The outcome of the challenge could best be described as a partial success. The vast majority of submissions did not succeed on a single task. The best-performing model was only wrong 80% of the time.

Should the ARC become the new standard benchmark for machine intelligence? The fact that no model has successfully solved all of the challenge’s problem-sets indicates that the ACS benchmark is still an aspirational goal, and Chollet has said that he wouldn’t expect an A.I. to achieve human-level aptitude for “many years.”

Moving Goalposts for Measuring Machine Intelligence

The goalposts for machine intelligence have clearly moved since the arrival of ChatGPT made the traditional Turing Test seem quaint. Yet we are still left with the questions of what constitutes an “intelligent” machine, whether intelligence inherently means human-like, and whether an intelligent, human-like machine is a worthy goal.

Those like Amazon’s Rohit Prasad and Google’s Mustafa Suleyman believe that intelligent machines shouldn’t be our goal, but rather we should primarily strive to build capable machines.

Meanwhile, researchers like François Chollet and Taylor Webb see machine intelligence as a prerequisite to building capable machines.

Yet another school of thought leap-frogs all of our contemporary discussions, saying that the modern Turing Test should consider whether A.I. possess a psyche, desires, and free will, in order to prevent an A.I. apocalypse. Would it need to be intelligent in order to have a psyche?

The breakdown of the traditional Turing Test has left us with countless more questions, and it’s likely that the study of machine intelligence going forward will increasingly resemble our research into the mysteries of our biological black box: the human brain.