Ep21. A Message About A.I. from the Future: Navigate the Legal & Ethical Landscape of Generative Artificial Intelligence with Karen Palmer

On the latest episode of Creativity Squared, hear from the artist who traveled back in time to show us the dystopia that we should try to avoid.

Karen Palmer is the Storyteller from the Future. She’s an award-winning international artist and TED Speaker working at the forefront of immersive storytelling, futurism, and tech.

She creates immersive film experiences that watch you back using artificial intelligence and facial recognition.

“I say that I’ve come back to enable people to survive what is to come through the power of storytelling by enabling participants to experience the future today through my immersive experiences.”

Karen Palmer

Karen has been the opening keynote speaker at AT&T Shape Conference, MIT, Wired for Wonder Festival Australia, and TEDx Australia at the Sydney Opera House. She’s exhibited around the world, including NYC Armoury Arts Week, Museum of Modern Art Peru, PHI Centre Montreal, and more. Her art has also been featured in publications like Wired, Fast Company, and The Guardian, where they hailed her work as “leapfrogging over VR.”

Her latest project is Consensus Gentium which had its world debut at SXSW this past March where Karen also won the SXSW 2023 Film & TV Jury Award in the XR Experience Category.

In the episode, Karen discusses her evolution from traditional filmmaker to a creator of immersive experiences that explore social justice in the context of technology. She talks about the need to democratize A.I., how you can experience the future today through her “reality simulators,” and how she uses immersive art to make you conscious of your subconscious behavior.

How to Rewire Your Brain

Over the past 15 years, Karen’s pushed the envelope of interactive and experiential filmmaking with the goal of helping audiences become more conscious of their subconscious behavior. She cut her teeth in traditional filmmaking until 2008 when she left her job to work on projects that leveraged technology to enable viewer-led experiences.

An early project in that space, SYNCSELF 2, combined film, tech, and Karen’s passion for parkour to recreate the experience of conquering fear. Participants donned an EEG headset that measured their level of focus via electrical signals from the brain while watching a film shot from the perspective of a parkour artist as if the viewer themself was leaping from buildings and flipping through the air.

She was just starting to explore the possibilities of A.I. in the mid-2010’s when social unrest boiled over in the U.S. in response to police killings of unarmed Black men. Watching news coverage of protests and media caricatures of burning cities, Karen says she was inspired to develop a new narrative.

“I was like, don’t people know what’s happening when they are representing people of color and Black people in the media as being looters? And where were the role models to represent what’s happening and put this in the arts?”

Karen Palmer

In 2016, Karen released RIOT, an emotionally responsive, live-action film, with 3D sound, which uses facial recognition and A.I. technology to create the experience of navigating through a dangerous riot.

RIOT is like a choose-your-own-adventure, except that you determine the direction of the narrative through your subconscious emotional reaction to what’s on screen rather than through conscious decision-making. Likewise, the characters on screen react to the participant’s emotional state, opening up various narrative branches of the film depending on whether the participant is perceived to be angry or fearful by an A.I. that’s trained on facial expressions.

Karen shares the story of a woman who participated in RIOT while it was on display at The Armory Show in New York City. On her first attempt, the participant’s fearful response to the film prevented her from progressing beyond the first part of the narrative. She disclosed to Karen that she struggled with alcohol addiction and wanted to go through RIOT again in order to better understand the triggers of her substance abuse. On subsequent attempts, the participant was able to proactively manage their subconscious fear response and progress further through the narrative.

“With RIOT, it makes people conscious of their subconscious behavior. So it makes you aware of how your emotions affect the narrative of the film. And in the exact same way, your emotions can affect the narrative of your life.”

Karen Palmer

Karen’s later projects take this emotional feedback loop even further while also exploring the issues of A.I. bias, social justice, and technology being wielded against vulnerable communities.

Safer Cities or Surveillance State?

Karen says that she’s become familiar with A.I. technology as a result of her efforts to authentically replicate the Black community’s experiences living under surveillance.

One of her main concerns is bias in A.I. and the risk that institutional racism is just going to be automated.

She cites Detroit as an example, calling it the “most surveilled city in America,” where a law enforcement initiative, Project Greenlight, sold to the community as a crime deterrent has resulted in false arrests of multiple Black people.

Project Greenlight began in 2016 as a public-private partnership between police and local businesses, enabling officers to monitor over 500 cameras installed in certain areas around the city. The initiative launched as a response to a spate of robberies, with officials telling residents that their communities would be safer under surveillance by police.

A 2023 U.S. Justice Department study cast serious doubt on the program’s effectiveness in deterring and solving crimes. Detroit officials have disputed that study, but the same officials have also had to defend lawsuits from Black people who say the program contributed to their false imprisonment.

Most recently, a pregnant Black woman became at least the third person to be falsely arrested after Detroit PD’s facial recognition software misidentified her as a suspect in a carjacking. The woman was arrested at her home while getting her kids ready for school and made to wait 11 hours in jail before her release.

In 2020, a Black father was arrested in front of his two kids and jailed for 30 hours after being misidentified as a robbery suspect based on a facial recognition scan of grainy surveillance footage recorded at night. In response to Robert Williams’ lawsuit against Detroit PD, officials claimed that the false arrest was mainly a result of shoddy police work, highlighting how technology can exacerbate human bias and lend credibility to it.

“Often, these datasets really reflect the biases in society.”

Karen Palmer

Themes like this inspired Karen to create Perception iO, an experiential film similar to RIOT, with branching narratives determined by the participant’s reactions to what happens on screen. In this experience, however, the participant assumes the point of view of a police officer handling a hostile situation with both white and Black protagonists. Similar to RIOT, an A.I.-enabled camera monitors the participant’s facial cues to determine their emotional state and alter the course of the narrative accordingly.

Perception iO helps participants recognize their own bias in real-time and develop strategies to manage their emotional responses.

Dystopia Survival Training with A.I.

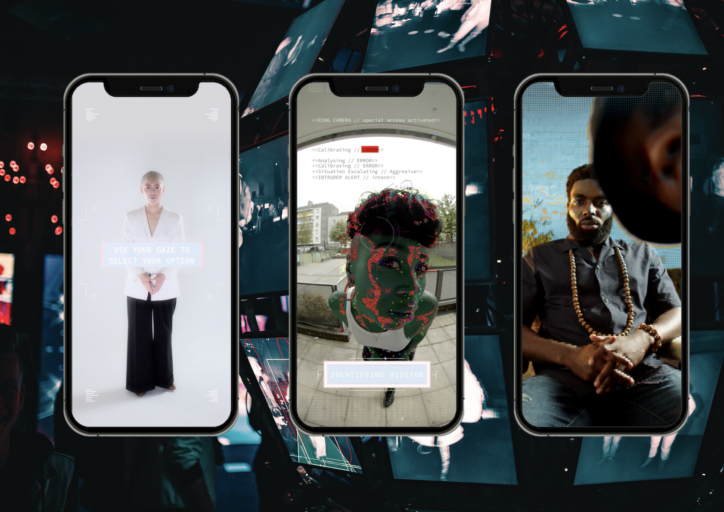

While there’s plenty of dystopian nightmare fuel in our present timeline, Karen’s latest project transports participants to the near future, where A.I. surveillance is the norm and citizens are constantly monitored for compliance.

Consensus Gentium is Latin for “if everyone believes it, it must be true.” The narrative of the interactive experience challenges viewers to navigate a world in which multiple pandemics and advanced global warming became the catalysts for the government to curtail citizens’ mobility. While on a quest to help their sick grandma, an A.I.-enabled camera analyzes over 50 cues on the participant’s face to determine their emotional state, which determines the participant’s level of compliance and, ultimately, how much mobility they’re able to achieve.

“The film watches you back, and the narrative branches in real-time. And the ending you get is dependent on your representation of your beliefs and how you interact with society.”

Karen Palmer

The smartphone is a central piece of the experience, like the modern version of the living room television in 1984, monitoring citizens in their most private, intimate moments. In this way, the same technology that delights users with entertainment and connectivity is also the primary tool for the government to justify oppressing those users.

The experience is an exploration of the potential threats posed to civil liberties by powerful surveillance technology, as well as the consequences of civil society opting-in to being monitored in ways they may not fully understand.

That’s why Karen wants to put Consensus Gentium in the “hands of the masses and democratize the arts.” She’s planning to release the experience as a smartphone app in 2024, so that the public has the opportunity to glimpse a future that could be possible and also avoidable.

“The future is not something that happens to us, but it’s something that we create together.”

Karen Palmer

Through her efforts to help audiences see the future today, Karen considers herself part of an “invisible movement” of artists and thought leaders trying to demystify the transformative technologies of our time through art. Instead of watching their civil liberties gradually erode away by the relentless march of technology and consolidation of power, Karen wants to empower people to understand the narratives unfolding in real time, understand their role in the narrative, and ultimately, their role in the future.

Karen’s Upcoming Events

- London Film Festival Margaret Morning Panel Storyteller from the Future Sept

- London Film Festival Immersive Experience October

- Kaohsiung Film Festival XR Dreamland Immersive Experience October

- New Dimensions Lab South Africa Storyteller from the Future October

- Geneva International Film Festival Immersive Experience November

- Immersive Tech Week Rotterdam Storyteller from the Future curated special event with Alex McDowel

Links Mentioned in this Podcast

- Consensus Gentium

- https://karenpalmer.uk

- Follow Karen on Instagram

- Follow Karen on X (formerly Twitter)

- TedxSydney

- Riot AI

- New York Times — Project Greenlight

- Fox 2 — Project Greenlight

- Washington Post — Facial Recognition Article

- Detroit Free Press — Facial Recognition Article

- Perception iO

Continue the Conversation

Thank you, Karen, for being our guest on Creativity Squared.

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 150 arts organizations, projects, and independent artists.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

Karen Palmer: I mean, AI is just a really cool tool and gen AI and chat GPT. That’s all fun, but what are we really using it for? And how are we going to kind of make our lives more easier or automated, but, you know, make us more conscious or make us more fulfilled or make us more empowered or make us more spiritual or, you know, whatever is some, which is more humane is what is the future that I’m focused on in terms of a movement.

Helen Todd: Karen Palmer is a storyteller from the future. She’s an award-winning international artist and TED speaker who’s at the forefront of immersive storytelling, futurism, and tech. She creates immersive film experiences that watch you back using artificial intelligence and facial recognition. Karen has been the opening keynote speaker AT&T Shape Conference, MIT, Wired for Wonder Festival Australia, and TEDx Australia at the Sydney Opera House.

She’s exhibited around the world, including NYC Armory Arts Week, Museum of Modern Art Peru, PHI Center Montreal, and more. Her art has also been featured in publications like Wired, Fast Company, and The Guardian, where they hailed her work as “leapfrogging over VR.” Her latest project is Consensus Gentium, which had its world debut at South by Southwest this past March, where Karen also won the SXSW 2023 Film and TV Jury Award in the XR Experience category.

Consensus Gentium is a powerful exploration of the implications of AI technology experienced on a mobile device. Consensus Gentium taps into the intimacy and authenticity of your smartphone features to create a realistic experience. Set in a near future, it’s an emotionally responsive film that integrates cutting edge facial detection and AI to transport audiences on a unique quest to discover what could happen if we succumb to unchecked surveillance. Karen and I first met at SXSW this past March, and then saw each other again at Ars Electronica this September — and I couldn’t be more excited to have her come from the future to speak to the Creativity Squared community.

In this episode, you’ll learn more about Karen and her latest project, Consensus Gentium, in addition to her social justice approach to her work that explores AI bias and unchecked surveillance. You’ll understand why she sees it necessary to democratize AI, how you can experience the future today through her reality simulators, and how she uses immersive art to make you conscious of your subconscious behavior. Don’t miss this glimpse into the future. Enjoy.

Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox, on YouTube, and on your preferred podcast platform.

Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space. The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Karen, it is so good to have you on the podcast.

Karen: It’s great to be here finally, absolutely. Having met you at SXSW and Ars Electronica. It’s really great to finally sit down with you. Yeah.

Helen: And I should also say congratulations because you won what was it? The XR immersive award at South by Southwest.

Helen: Is that what it was?

Karen: The XR immersive experience award at South by Southwest.

Helen: Oh, fantastic. Well, I’m so excited to have you on the show and to dive in. And I guess before we get started too much into the deep end, for those who are meeting you for the first time can you introduce yourself?

Karen: Yes. So my name is Karen Palmer. I’m the storyteller from the future. And I say that I’ve come back to enable people to survive what is to come through the power of storytelling by enabling participants to experience the future today through my immersive experiences.

Helen: That’s amazing. And can you tell us a little bit about your origin story and how you got into this type of storytelling and your interest in AI specifically too?

Karen: So, my background is that I was a traditional filmmaker and director and then 20, earlier than even 2010, like maybe 2008, I was like, I think the future is tech and film, not just film. I thought people want to interact with film in the future and be participants. And I started to work with film and tech.

Karen: And my first kind of beefy commission was part of the Cultural Olympiad in the UK as part of the lead up to the Olympics, where I did some art and tech and parkour film working with young people in the community. And then from there I moved into EG head sensors and tech and then 2015, 2016, AI and film.

Helen: With AI, you really come at it from a social justice perspective and your themes kind of explore surveillance. So can you tell us like how specifically, what interests you about that and the importance of coming at it from a social justice angle from your perspective?

Karen: Yeah, so, I’m… how to say this. I’m proper artist in terms of I used to make more kind of self indulgent pieces. I used to be a free runner and I wanted to replicate the experience of transformation, of being, of doing parkour and free running to enable you to move through fear. And I started to make films with EEG headsets and then when the Ferguson riots came up, I was like really profoundly impacted.

Karen: And was like, look, there’s so don’t people know what’s happening when they are representing people of color and black people in the media as being looters. And, you know, where are the role models to kind of represent what’s happening and put this in the arts and put this in story. And that inspired me to make Riot in 2016, where I put people in the middle of a riot.

Karen: And then I went further down. I started to work with AI. And then my next piece is I started to work in AI, I started to look at the societal implications of AI and surveillance and social justice. So, me kind of going into this, wanting to authentically replicate the experience for my black community sent me down the rabbit hole of really understanding the technology to make sure that the storytelling was not just authentic, but cutting edge and very real and exploring some of the themes which weren’t in the mainstream conversation at that time, which was around bias and AI which I started to look at 2016, 2017.

Helen: And one thing that I find really interesting about Riot and your current piece is that you’re really trying to, through art, rewire people’s unconscious bias. And so can you walk us through how that works? Because I, I find that fascinating and amazing that you’re using art, your art to do that.

Karen: Wow. Okay. So I’m going to go back a step. I’m going to try and summarize it. But with Riot it makes people conscious of their subconscious behavior. So, it makes you aware of how your emotions affect the narrative of the film and in the exact same way your emotions can affect the narrative of your life.

Karen: So, you come into contact with characters in a film that’s like life size and the AI in a webcam will watch you back. So, if you respond to a character with calm, then the narrative will be calm. However, if you respond with anger, then the aggression will escalate in the scene. So, it’s showing you the implications of your actions.

Karen: And I’ll just tell you a very short story is that when I was doing, sharing the experience in New York as a part of Armory Arts Week, a young lady came and did the experience and she, it’s three levels. And she only got to the second level because it kind of ended because she got fearful. So, she got a fearful narrative.

Karen: And I said to her, do you want to have another go? And because it’s going to make you conscious of your subconscious behavior and she goes I want to do that actually because I’m looking at what’s triggering my addictions and I was like, wow, that’s a bit heavy. What do you mean? She goes, I’m in AA. I’ll tell you because I’m never going to see you again and I want to know what’s triggering me.

Karen: So I said, “okay, why don’t you have another go? And now you’re aware of how that you are kind of your breathing is changing as you get nervous. And you’re getting, you’re overthinking, try and do it again, but just breathe and relax.” And she did it a second time and this time she got to the second level and she couldn’t go any further.

Karen: And I said to her, “how was that?” And she said, “well, I couldn’t go any further, but I became conscious of the fact that I could move through my innate programming, my personality, my triggers, my trauma in a way.” And I said, “do you think you could do it again?” She goes, “yeah, because it’s like a muscle and I’m conscious of it.

Karen: It’s something I could activate consciously.” So, I’m telling you that story to say that it’s, I’m specifically looking at bias, but it’s something about making you more self aware and a narrative changes to show you the end result of your emotional reaction and by having that fed back to you, that you could then go, “Oh, you know what? I don’t like this narrative. I’m going to change it and change my emotion.” And then through doing that, you are reprogramming your brain, the neural pathways in your brain, because you’re making different decisions and different emotions.

Helen: That’s amazing. And I think one thing maybe to just take a step back too is to talk about the tech, because I think you use something similar for Riot and your latest one, Consensus Gentium, how people are being reactive is that the screens watch the people and that’s how it’s responsive.

Helen: So, can you kind of explain how your projects are the tech that it’s like using tech to watch people and that’s how it’s interactive.

Karen: Yes. So, I use artificial intelligence through a webcam and all my AI is totally bespoke and this was like before Gen AI and all the other AI software that’s out there and like AI has really blown up this year, but my current project, Consensus Gentium, we developed that and finished that in 2022, so everything’s totally bespoke. So, you watch a film and the webcam and the AI watches you and it monitors the different emotional points on your face or your different, there’s 52 different points on your face, which it’s measuring in the latest film.

Karen: So, if your mouth goes up and smiles, or if your face frowns, it’s measuring all those kinds of touch points the same way that your phone will measure your look at your face to check that’s you when you want to open it up. And then what happens is it feeds back that data to the narrative and there is a plethora of different narratives there and depending on your response and it will branch to a particular narrative in real time.

Karen: So, that different people, and it does that at three or four different junctions in the piece, and it does has multiple endings. So, different people can have different films, and if you don’t like the narrative that is evolving, by changing how you feel or how you’re perceived then you can have a different result.

Helen: man, that’s fascinating and I will also say I just did a demo and I shared this with you and we spoke, that there’s marketing and customer service tools that are using the same technology to read people’s emotions through their webcams after giving permission and then having customer service reps adjust.

Helen: So, like you were very on top of like these upcoming trends which are the tech is here and being used by marketers and customer service already, which I know one of the things is reality simulators of the future, but I feel like the future is now.

But, one of the things that you explore is AI bias and unchecked surveillance, which is a little bit different from a marketer asking permission to use the webcam. So, can you tell us about what concerns you with AI bias and unchecked surveillance? And then I want to hear about your current project too.

Karen: Yeah. So, a lot of the times we’re sold things in terms of the surveillance part, then the bias part I’ll talk about afterwards, which is sold to us as it’s something which is going to be safety. However, it’s often about security. So, if you have a ring camera on your door, there’s a clause in that where if there’s this kind of like national emergency, that the law enforcement can monopolize your feed and use that for their own purposes.

And when you click the Terms and Conditions, that’s what you’ve clicked into. And there’s so many other forms of technology, which, as I said, is sold to kind of protect us and make our life easier. But actually, it can be used to surveil us. An example is the Project Greenlight is the system which was developed in Detroit, which was sold to the residents as this is going to be helped with security because there were spates of robberies.

And what happened is that Detroit is now the most surveilled city in America because a system that was put in by the local council to help them with security is now a glorified surveillance system. And this leads into the bias, actually, that the very first case recorded of a black man being misidentified by an algorithm or a facial recognition camera was in said same Detroit because the algorithm mistook Robert Williams is black man for a different black man because often these data sets really reflect the biases in society. So they had mislabeled, almost identified his face as a black man with another face as a black man.

So, often my big concern is that these institutionalized racisms are just going to be automated. So, the biggest kind of sector in the prison service of people who say that they’re innocent, even though they’re guilty as black men on death row, because they said they’ve been misidentified and with this first case, and there’s been another one as well recently with a black woman, is that you can see how this injustice is just going to be automated.

And so these are the themes which I’m exploring in my work where Perception.io was a training tool for pretend for an imaginary police enforcement system where it was monitoring you and as a training set, as if you were law enforcement to monitor your bias and the algorithm data set would respond depending on your potential bias.

So, all my work is about simulating the future so that you can experience the future today.

Helen: It seems like surveillance is just so commonplace and that and like, even the younger generation is just so comfortable with it. And to a certain extent, I almost think it’s from all of the baby monitors, you know, that they’ve been surveilled by their parents.

And so it’s not a big leap that then these cameras enter everywhere else in our society. And that we shouldn’t just take it for safety or face value. So, let’s dive into your project, Consensus Gentium, because you’ve been working on it for two years, you had your world debut at South by Southwest, and it’s kind of dives more into these subjects.

So, tell us about your latest project.

Karen: Thank you. Thanks for getting the name right. So Consensus Gentium is Latin for, if everyone believes it, it must be true. So, it’s a kind of play on perception of society and what is truth in a way? And this experience enables you to experience a near future world where there’s been multiple pandemics and due to that and climate change, we as a society in the community as global citizens have limited mobility and in order to acquire increased mobility, we need to prove our compliance to the state and they will be monitoring us consciously and subconsciously through our phones.

And so the experience happens on a mobile device so that it can really have in this world where the film is interactive and responds to you get text messages and you have a certain objective to help your sick nana and you have to make conscious and subconscious decisions and then you will experience from the state will judge you to be compliant or dissident and you will see repercussions, whether there’s consequences for your actions, whether you decide to be a compliant citizen or not, through your tech, you’re, for example, asked to be auto-self surveilled so in order to be a good citizen, but that comes with a series of consequences.

So, you understand the implications of the impact of your decision on yourself and your community, and it’s not a judgment, it’s to show in a way that we’re all kind of a little bit screwed, in a way, in terms of looking at themes of liberty and digital with the lack of regulation, the lack of transparency, the lack of democracy, you know, all of these things are just coming down from the status quo and all of these institutionalized racism are also coming down with it.

So, yeah, I think I’ll stop because as you can see, I’m just talking. So yes, that’s as much as I give you for now and then you can ask me something else.

Helen: If I understand it correctly as Riot, where it’s a phone and I’ve seen it at South by Southwest, but I had to get to the airport and everyone was sitting in the chair.

So I didn’t actually get to experience it myself, unfortunately, but I have a lot of photos. But you sit in front of a phone and to do this, and then the phone watches you back. And that’s like one of the taglines to the film that watches you back, yeah. So, can you walk us through the experience of you’re sitting at the phone and then you’ve got to, well, before that I have a global citizen like sales representative that invites you into the experience because what you’re in is a living room with a future.

So, where people don’t really communicate, they just live on their mobile entertainment device. And, as you’re watching your phone watches you back. And you watch the film within this space. And the film branches consciously or subconsciously. You’re asked within the film to make a decision.

Like within the phone, the the border control Digital Immigration person will ask you certain questions to see how compliant a citizen you are. And then it will branch, and then as it unfolds, you’ll understand the implications. So yeah, the film watches you back and the narrative branches in real time.

And that, and the ending you get is dependent on your.. your kind of a representation of your beliefs and your, and how you interact with society in a way.

Helen: It feels like something out of a Black Mirror episode that you’ve brought to life for people to experience.

Karen: Lots of people have said to me, this reminds me of Black Mirror. And I feel very chuffed because, you know, I’m an independent artist. I mean, this was commissioned by the BFI and the British Council and the Arts Council supported the South by Southwest trip. But, you know, I’m not Netflix. And when you think of, like Bandersnatch was interactive and it was like touching a button, and my piece is like your eye gaze and your facial detection, and it’s changing in real time.

Karen: So, and this is on a mobile where my previous works were just for this, for like museums and conferences and galleries. So, it’s a really big deal that I want to kind of put this work in the hands of the masses and democratize the arts. And at the moment it’s still in festivals, but the vision is that the first quarter of next year, like spring, we’ll be having it available on the app store for people to download.

Helen: That’s amazing. And all of the tech is already within our phones that enable this watching back too. Where do you see it going from here? Like you were already kind of ahead of the curve when seeing the potential, what are your other concerns from where you sit and where this could go?

Karen: Yeah, just as far as that only comment as well. That there’s a couple of really good documentaries like Coded Bias and The Great Hack, in particular, The Social Dilemma, I think…which is talking about how advertising and social media, that these kind of tools are used to kind of, exploit and manipulate us, right? So, this work is very much I’m one of the good guys or girls.

That I’m here to kind of, show you how we can use these same tools to empower us. So, in that kind of thread that I really feel that the gym of the future will be a gym of the mind, and that entertainment is not just about like when people have Netflix and chill, like they kind of switch on and tune out, is that you’re kind of gonna switch on and tune into yourself.

Because we’re living in some really massive times of uncertainty and great change. And we need to, I don’t really feel the point is about, you know, there’s a path for everybody, but it’s not just about, we’ve got to, you know, get the politicians to be more just or, you know, socially moral, but we kind of have to look at ourselves and become more self aware because it’s going to be up to us.

They’re like the futures in our hands. So my, with me, I feel like immersive is such a powerful tool to impact people, particularly neurologically and emotionally, and to just leave it in the hands of capitalism or entertainment or advertising, would be the worst thing in the whole world, you know, because it’s only going to get more and more more and more disparate and desperate and more and more money on the line.

And that we need to be have like, I have a social moral obligation. And that’s, I feel, there will, I actually feel there’s going to be a movement through the arts. And I kind of said that, I did a TED Talk in 2016 where I said I feel I’m part of an invisible movement, which is so exclusive, the people in there don’t even realize they’re in it.

And I feel that’s what’s going to happen, right? Even though I work in AI, I hardly talk about AI, I’m more talking about the impact of storytelling and the kind of way that we can be transformed as individuals and as society. So that’s what excites me. I mean, AI is just a really cool tool, and Gen AI, and Chat GPT, that’s all fun, but what are we really using it for?

And how are we going to kind of, not just make our lives more easier or automated, but, you know, make us more conscious, or make us more fulfilled, or make us more empowered, or make us more spiritual, or, you know, whatever is some, which is more humane. Is what is the future that I’m focused on in terms of a movement?

Helen: I love that the mission of this podcast is to envision a world where artists not only coexist with AI, but thrive in that thrive is envisioning a world where the tech is centered around people. And I love that you’re doing that with your art. And in addition to people just becoming more aware in their unconscious becoming conscious…

You mentioned regulation from the outset and I know sometimes it can kind of feel disempowering that we’re going up against these really massive companies. I’m sure you’ve come across Palantir as well, which frankly terrifies me what they’re doing in the U. S. but what do you see outside of the

Karen: criminal justice system?

Helen: Yeah, that’s Peter Thiel’s thing where he’s just super embedded in the government and on surveilling all types of different data or access to lots of data. But in addition to the individual awareness, what do you see is needed from, you know, regulatory or societal institutional change as well?

Karen: Wow. So there’s lots of people having lots of conversations about this. I was at Cog X and Tristan, is it Bill Tristan… Tristan Harris. That’s it. He was talking about the kind of the urgency and the kind of responsibility of the tech organizations to kind of regulate themselves because it’s like nobody’s regulating them and it’s kind of a free for all.

And that in fact, we probably also need some kind of independent regular regulatory body because they can’t really surveil, like monitor themselves. So, there’s lots of talk and there’s lots of work happening in Washington around regulation and think tanks and discussions around that. So that’s definitely a big direction we need to go down in terms of regulation, in terms of democratization, in terms of transparency, but…

It’s also like, and then also in the UK, the prime minister here is going to be doing a big event later in the year, as like exploring the regulation of AI. So lots of conversations. I think it just needs to be hit on many different fronts because it’s kind of asking these conglomerates to kind of regulate themselves.

And the bottom line is really greed and capitalism and money. So I think that’s, what’s going to win, to be honest. I mean, we’ve seen this play out before, right? But we are not saying we shouldn’t fight for it. And lots of people are doing lots of good work on that at the moment. But also creating our own independent systems is also vital to do that because also The rate at which the AI is learning is so exponential.

So yes, to answer your question, the regulation is key. Transparency is key. Us creating our own systems is key. But I feel there’s not one way. I feel, because like, when I’ve listened to people speak, like Ruha Benjamin, the author of Race Against Technology who’s a… Professor at Princeton University.

When I saw her speak, she was saying the same things, like we need to come together as communities. But also she was saying, the first time I heard someone say this, and I was very inspired, was the role of the artist. And to kind of, you know, show people what’s happening because some of these conversations are very elite, very exclusive and they happen in the Times and you know, these very kind of highbrow intellectual publications and some of even the terminology around computational science is not accessible for lots of people.

So, my responsibility is to make people experience the future today so that they could then start to be a part of this narrative and then understand their role within that narrative and then within the future.

Helen: Yeah. Well, when your app comes out in 2024 we’ll have to have you back on the show to talk about it and get it into as many hands as possible to interact with.

And I know we’re short on time and I feel like we are just starting to scratch the surface with you, Karen. But I guess if you want our listeners and viewers to remember one thing from this conversation or about your art.. what’s the one thing you want them to remember and walk away with?

Karen: I want them to remember that the future is not something that happens to us, but it’s something that we create together. And I want like my work, my objective is to, that they’re not apathetic or acquiescing, that they activate agency in the participants. Because all of us have a role, and as I say, storyteller from the future I came back to enable us to thrive.

What is survival is to come through the future of storytelling, the power of storytelling but I also add that I did not come back alone, and I’ve come back here to connect with some of the people who also came back with me.

Helen: I love that you’re from the future. It’s so great. And tell us, it sounds like you’ve got a busy fall ahead with the conference and festival circuit.

So, where can people connect with you and experience your work.

Karen: Thanks for asking because I always forget. So, I’ll be on the tour with Consensus Gentium through now till the end of the year, and it starts at, I guess, came back from Ars Electronica, as you said, and then it’s going to be at the London Film Festival at the Immersive Strand from the 6th to the 22nd, I believe, of October in London.

Then it’s going to be in Taiwan at the Kaohsiung Film Festival from the 10th to the 22nd, I believe of October, then it’s going to be going to Geneva Film Festival from the, I believe it’s the 1st to the 6th of November. And then we are waiting for a confirmation that if we’re going to be at Kenya Innovation Summit, but it will, and then also a confirmation that it’s going to be at Rotterdam Immersive Tech Week which will be the first week in December. I think I may have left one out, but that’s the kind of general gist.

And they can follow, people want to follow me on social media, at Insta, it’s @storytellerfromthefuture. And on Twitter, it’s @KarenPalmerAI and they can always also Google me or Consensus Gentium AI and we’ll be updating where it’s going to be or karenpalmer.uk They’ll I’ll be updating that soon and I’ll be really happy to come back on the show like towards the end of the year to kind of do you know for looking forward for 2024 where it’s going to be because I know this has been a bit short but I’d love to come back again at the end of the year.

Helen: Oh my goodness.

We just started scratching the surface of all these topics, so open invitation. And for all of our listeners and viewers I know there were some books and other talks mentioned and her upcoming shows, which we’ll be sure to put in the show notes and on the dedicated blog post at Creativity Squared.com. So I think that’s time, but thank you so much, Karen. So good to have you on the show and so appreciate you sharing what you’re working on and such important work in terms of making us more aware of the tech. So, thank you for coming from the future to, to let us know. It’s my pleasure.

Karen: I think, I don’t know if you remember, but I’m pretty sure you’re one of the ones that came back with me, Helen, so I think you would know as well. I really feel that it’s part of a conversation. I have a feeling we’re going to get a lot deeper and go deeper in the next one.

Helen: That might be the best compliment I’ve received since launching my podcast.

So thank you. I will take it.

Karen: My pleasure.

Helen: Thank you for spending some time with us today. We’re just getting started and would love your support. Subscribe to creativity squared on your preferred podcast platform and leave a review. It really helps. And I’d love to hear your feedback. What topics are you thinking about and want to dive? I invite you to visit CreativitySquared.com to let me know. And while you’re there, be sure to sign up for our free weekly newsletter so you can easily stay on top of all the latest news at the intersection of AI and creativity. Because it’s so important to support artists, 10 percent of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized nonprofit that supports over 100 arts organizations.

Become a premium newsletter subscriber or leave a tip on the website to support this project and ArtsWave and Premium Newsletter subscribers will receive NFTs of episode cover, art and more extras to say Thank you for helping bring my dream to life. And a big thank you to everyone who’s offered their time, energy, and encouragement and support so far. I really appreciate it from the bottom of my heart.

This show is produced and made possible by the team at Play Audio Agency. Until next week, keep creating.