Ep25. Don’t Trust A.I. — Verify: Explore Explainable A.I., Policy Innovation, and A.I. for Good with MidwestCon & Disrupt Art Founder Rob Richardson

How do we build trust in a technology that nobody fully understands? On the latest episode of Creativity Squared, our multi-hyphenate guest discusses the need for transparency around A.I. from his perspectives as an attorney, academic leader, political candidate, innovation advocate, and entrepreneur.

“I care deeply about technology and its implementation, making sure that it’s done in an inclusive way. Because what we build, and why we’re building it has never been more important. I believe technology is actually not neutral. It depends on who’s building it and the purpose behind the people and institutions that are building things. That’s why I think the greatest thing that people can do who want to create a better world is to be part of building in this new ecosystem of opportunity.”

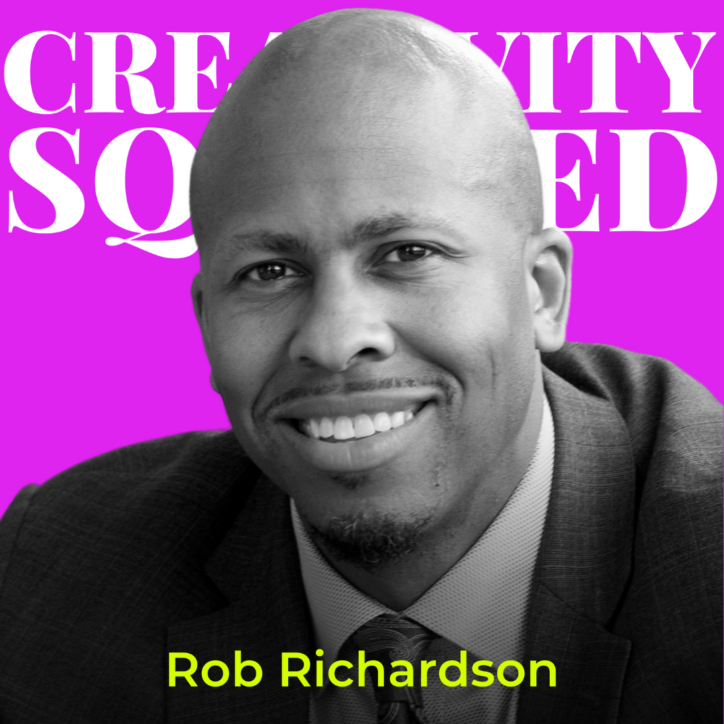

Rob Richardson

Rob Richardson is the CEO and Founder of Disrupt Art and the Founder of Disruption Now Media, a platform that includes MidwestCon where Rob heads programming and the Disruption Now Podcast which Rob hosts.

Disrupt Art helps brands enhance loyalty programs and events, transforming them into dynamic platforms that elevate brand equity and unlock invaluable customer insights. Brands unlock the ability to gamify interactions using digital collectibles and A.I., ensuring every customer’s experience is not just memorable but meaningful. Disrupt Art envisions a world where customers aren’t just consumers but co-creators and stakeholders in the process.

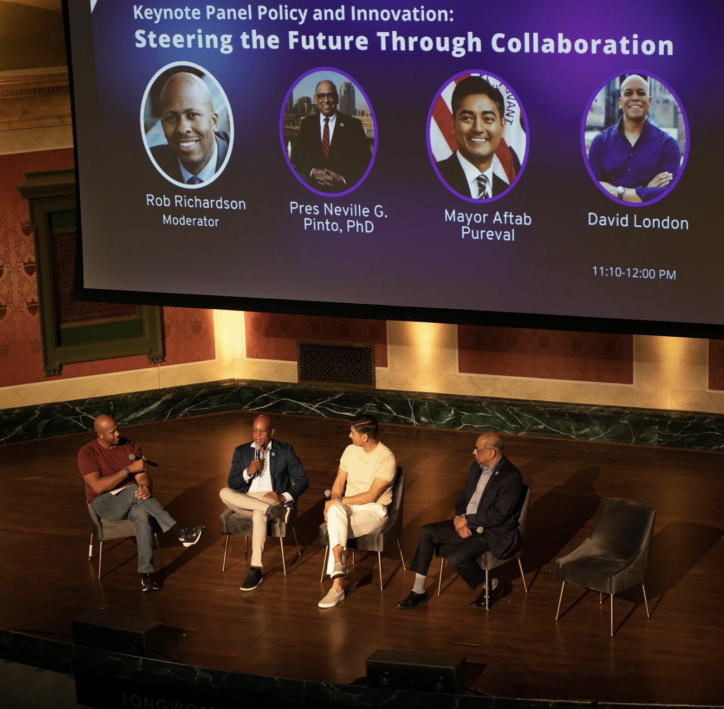

Rob is head of programming for MidwestCon, a one-of-a-kind conference and cultural experience where policy meets innovation, creators ignite change, and tech fuels social impact. He also hosts the Disruption Now Podcast where he has conversations with disruptors focused on impact.

Rob was the youngest Trustee ever appointed as Chairman of the Board at University of Cincinnati. During his chairmanship, he helped lead the University’s innovation agenda, establishing the 1819 Innovation Hub, where industry and talent collaborate to spark groundbreaking ideas. He created a leadership development and academic preparedness program for high school students. He also championed reforms to the University’s police policies.

Beyond business and academia, Rob’s also been involved in politics. He ran for public office as the Democratic nominee for Ohio treasurer in 2018. He received over 2 million votes running on the platform of inclusive innovation for Ohio. Rob has appeared on MSNBC, America this Week, and is a regular contributor to Roland Martin Unfiltered.

In today’s episode, discover how A.I. can be a force for good and why explainable artificial intelligence is crucial in today’s tech landscape. You’ll hear about the trends Rob is paying attention to when it comes to web3, blockchain, and artificial intelligence and how he’s thinking about policy innovation, trust, and transparency. You’ll also learn more about the vibrant tech scene here in Cincinnati and how the University of Cincinnati is at the forefront of innovation in explainable artificial intelligence.

Cincinnati’s Growing Tech Community

Cincinnati has always been home to innovators: The Cincinnati Reds (known as the Red Stockings back then) was the first professional baseball team in the United States, the University of Cincinnati (UC) was the first municipal university established by a U.S. city, and now that same university is helping to lead a culture of tech innovation in the Queen City.

As a board member at UC, Rob has been involved firsthand in some of the efforts to advance tech innovation in the city and broader region.

He mentions Dr. Kelly Cohen, who’s the Brian H. Rowe Endowed Chair in aerospace engineering and Director A.I. Bio Lab in Digital Futures, who’s applying fuzzy logic to develop explainable and responsible A.I. with the goal of helping A.I. reach its full economic potential by ensuring its trustworthiness. UC faculty are also researching A.I. applications in higher education, advanced manufacturing, environmental services, healthcare, and renewable energy.

“I believe the University of Cincinnati is going to be one of the key institutions really leading when it comes to A.I., when it comes to blockchain, and doing so in a way that is transparent, and that actually can help. Because everyone’s very excited about A.I., but I think we also need to be responsible about how these things are being employed.”

Rob Richardson

Rob also plays a key role in bringing critical tech conversations to the Cincinnati area through MidwestCon. Rob says that their goal with MidwestCon is to make it the South by Southwest of the Midwest. Last year’s lineup included speakers from the realms of public policy, web3, A.I., finance, fashion, advertising, and social impact.

Mitigating Bias and Building Trust with Explainable A.I.

Rob is an advocate for explainable artificial intelligence, which does not really exist yet.

All of the popular A.I. chatbot models available right now are essentially black boxes. User input is processed through a series of mysterious technological functions that nobody can define (even the developers behind the technology). We understand the concepts undergirding the process – transformers, neural networks, etc. – but understanding the actual path from input A to output B remains elusive.

Rob says that this paradigm presents significant risk for bias to infect artificial intelligence because he says that “technology is actually not neutral,” but rather it depends on who is building the A.I. and the purpose of building it.

That’s why he says that we also need to make sure that the people driving innovation represent diverse perspectives and experiences. But Rob says that the inclusion of diverse perspectives needs to go beyond Diversity, Equity, and Inclusion (DEI) corporate vanity projects. Rather, companies have to see diversity and inclusion for what it is: a fundamental component of business success.

Rob says that we should think about training A.I. like we think about raising children and striving to provide a well-rounded worldview.

“The more you teach a child bad habits and bad exposure, and when they become an adult, it’s very hard to change that. It’s the same thing with A.I. models and algorithms. And this is why it’s so important to think about the data, and how we input it and how we monitor it, and how we actually have transparency behind it.”

Rob Richardson

Rob cites an example from 2016, when Microsoft released an experimental A.I. chatbot called Tay on Twitter, encouraging users to engage in casual and playful conversation with the bot. Within 24 hours, Tay had transformed from an ambivalent machine into a conduit for all of the most vile misogyny, anti-semitism, and racism that Twitter has to offer (which is saying a lot given the dumpster fire that Twitter, now X, can be!).

Certainly A.I. has come a long way since then, with some guardrails in place on all of the major chatbots to mitigate harmful outputs. However, A.I. has been used for a long time in more serious applications such as the battlefield, where consequences could be much higher and where the public is inherently unable to scrutinize those decisions.

UC Department of Aerospace Engineering Alum, Javier Viaña, Ph.D., is among those working to develop explainable A.I, which not only provides accurate predictions but also human-understandable justifications of the results. At the MIT Kavli Institute of Astrophysics and Space Research, Javier is working to develop deep neural networks to study planetary movement.

Rob says that research like Javier’s and the quest for explainable A.I. is a crucial step to achieve the best outcomes for everyone.

“I think the most important trend is to actually build more trust. And if we don’t build more trust, for all of our prosperity, it can end up really backfiring on us. If we can’t trust what’s being put in front of us, if we can’t trust any institution to be transparent about how our data is being used, it creates dysfunction within society.”

Rob Richardson

For Rob, A.I. for good means that we understand why we’re building A.I. and builders are transparent about how it’s being built, and how it will be used to better society beyond just making profit.

Don’t Just Trust — Verify: Web3’s Applications in Combating A.I. Deception

It wasn’t long ago that Web3 was the greatest new thing in tech. Bitcoin’s massive rally in 2021, combined with the economic uncertainty introduced by the pandemic, resulted in an explosion of developers building trustless systems for almost every application imaginable. While the bubble seems to have burst for the NFT market, Rob says that it’s wrong to think that Web3 is dead.

In fact, he sees blockchain as an effective counterbalance to the new tools of deception enabled by A.I. technology.

“I see blockchain and A.I. being integrated together. And what I want to get away from is people get so lost in trends that they just say, ‘it’s only A.I. and Web3 is over.’ That’s just a fundamental misunderstanding of what’s happening. A.I. is another application of how we’re going to use the next iteration of commerce on the internet.”

Rob Richardson

Much like the way that cryptocurrency tokens such as Bitcoin enable users to instantly transfer funds without a bank acting as a middleman, blockchain can provide the infrastructure for verifying authenticity of images, videos, and even personhood in the age of artificial intelligence.

That’s the thinking behind projects such as Worldcoin, the blockchain application co-founded by OpenAI CEO, Sam Altman. The mission of Worldcoin, according to Altman, is to solve the problem of digital ownership that Altman ironically helped create. The technology works by scanning your eyeball and assigning you a unique digital ID that can’t be transferred or stolen (Note: there are A LOT of concerns with Worldcoin, but it’s worth noting as an interesting proposal that’s trying to solve an identity solution outside of governments).

In the creative world, The Content Authenticity Initiative (CAI) is leveraging blockchain in multiple ways with the goal of creating a system to verify the authenticity and provenance of images distributed across digital spaces (here at Creativity Squared, we fully support the CAI’s efforts!).

Earlier this year, the European Union adopted the world’s first comprehensive package of laws to regulate crypto assets. Rob says that the U.S. has a lot of catching up to do in order to protect users from bad actors.

Policy Innovation and A.I.

Rob sees parallels between the harms caused by social media and the risks that we’re facing with the rise of generative artificial intelligence. He points to the 2016 presidential election as the critical juncture where many started to see how machine learning algorithms distort the public dialogue by boosting the most inflammatory (and engaging) content. Now that A.I. can generate deceptive content on an industrial scale, Rob says that we need to start thinking critically about proactive regulation rather than waiting for a crisis and reactively rushing to impose half-baked regulation.

As a former political candidate and current entrepreneur, Rob recognizes that regulation needs to balance public safety concerns with the economic and national security benefits of unstifled innovation. He says that good regulation can drive innovation by establishing clear rules of the road.

“What people fail to understand is that, without a stable government, innovation fails. Otherwise, Afghanistan would be killing innovation, because they have no government. Done right, a stable government promotes innovation, not the other way around. Innovation does not promote a stable government.”

Rob Richardson

Rob says that achieving effective policy will require efforts from both tech companies and public officials. Companies have a duty to build transparent systems that can be understood and scrutinized by policymakers. While policymakers have a duty to engage in good faith and try to understand the technology they’re regulating.

But what should a regulation regime look like? Self-regulation? A civil enforcement watchdog group?

Historically, self-governance has succeeded in certain industries. Hollywood and video gaming are two examples where companies collaborated to establish their own guidelines around age-appropriate content, rather than cede that authority to government institutions. Such a regime can balance First Amendment considerations, corporate profit motives, and protecting children from inappropriate content.

However, Rob questions whether self-governance is sufficient to protect public interests against A.I. risks. Returning to the social media comparison, we’ve seen how self-governance can fall short with Meta’s controversial, “independent” Oversight Board.

Critics have lambasted the Oversight Board as a toothless “PR stunt” meant to rehabilitate the company’s image following criticism about misinformation campaigns that have proliferated on Facebook.

Rob is also skeptical about the idea of a watchdog or civil enforcement solution, like the Securities and Exchange Commission which oversees the stock market, or the Food and Drug Administration. Both agencies receive criticism for perceived deference to the companies they’re meant to regulate. Critics point to the fact that budgets for both agencies rely largely on fees paid by companies subject to regulation.

“Watchdogs are good, but generally, they probably get funded by the people that they’re watching. Which becomes part of the problem, right? And so, where you’re going to need policies, you’re going to need somebody that has at least somewhat removed from the entities that have to be monitored.”

Rob Richardson

Regardless of how government and industry decide to regulate generative A.I., Rob says that citizens and users cannot afford to take a back seat.

Our Individual and Collective Responsibilities in Adapting to A.I.

Rob says that A.I. is similar to computing and the internet in the sense that they are “general purpose” innovations. There are plenty of people who don’t use social media or cryptocurrency, but there are very few people who don’t use the internet if they have access to it. Similarly, Rob sees GenAI becoming an unavoidable and inevitable part of our lives (as A.I. is already embedded into all facets of our lives through the tech we’re already using).

While we must ensure that our institutions, laws, and corporate interests are moving in the same direction toward building transparency, Rob says we all have an individual duty to prepare ourselves for the paradigm shift as well.

“I want technology to help bring us together to create more opportunities for folks. But that requires us to be informed advocates and citizens and to not just accept what’s in front of us, be it from Google, or be it from the government. We have to be willing to disrupt and define our own path.”

Rob Richardson

Listen to the episode for more of Rob’s thoughts on A.I. regulation, protecting the creator economy, the importance of strong government institutions, and using artificial intelligence to augment human ability.

Links Mentioned in this Podcast

- Check out Rob’s marketing agency, Disrupt

- Come see Rob at MidwestCon 2024!

- Disruption Now podcast Instagram page

- UC Digital Futures Building

- UC’s 1819 Innovation Hub

- UC Researchers at the Cutting Edge of A.I. Research

- Twitter Taught Microsoft’s AI Chatbot to be a Racist

- Dr. Javier Viaña’s Personal Website

- Worldcoin

- Content Authenticity Initiative

Continue the Conversation

Thank you, Rob, for being our guest on Creativity Squared.

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 150 arts organizations, projects, and independent artists.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

[00:00:00] Rob Richardson: Because what people fail to understand is that without a stable government innovation fails otherwise Afghanistan will be killing innovation ’cause they have no government, right? A stable government promotes innovation, not the other way around. Innovation does not promote a stable government.

And if so, I want people to give me one example of a place where there’s a lack of a stable government where people are just killing innovation. I’ll wait.

[00:00:24] Helen Todd: Rob Richardson is the CEO and founder of Disrupt. Which helps brands gamify loyalty programs and envisions a world where customers aren’t just customers, but co creators and stakeholders in the process.

Rob is also the founder of DisruptionNow Media, a platform that connects entrepreneurs, creators, and leaders through immersive events and educational content. This platform includes MidwestCon, a one of a kind conference and cultural experience where policy meets innovation, creators ignite change, and tech fuels social impact.

Rob curates and is head of programming of Midwest Con. In addition to being the host of the Disruption Now podcast where he has conversations with disruptors focused on impact. When Rob was appointed chairman of the University of Cincinnati’s board of Trustees, he became the youngest person to serve in the role in the university’s history.

In his tenure, he established the 1819 Innovation Hub, where industry and talent collaborate to spark groundbreaking ideas. He created a leadership development and academic preparedness program for high school students. He also championed reforms to the university’s police policies. His education includes a Juris Doctorate and Bachelor of Science in Electrical Engineering from the University of Cincinnati.

He established the first student chapter of the NAACP at the university and was elected student body president. He received the University of Cincinnati Presidential Leadership Medal of Excellence, Jeffrey Hurwitz’s, Young Alumni Outstanding Achievement Award, Nicholas Longworth Alumni Award from the college of law and an honorary degree, Doctor of Laws.

Rob also ran for public office as the democratic nominee for Ohio Treasurer in 2018. He received over 2 million votes running on the platform of inclusive innovation for Ohio. Rob has appeared on MSNBC America this week and as a regular contributor to Roland Martin unfiltered. Rob and I met at this year’s MidwestCon where speakers included Cincinnati mayor Aftab, University of Cincinnati President, Neville Pinto, Coinbase’s Head of U. S. State and Local Public Policy, David London, and Emmy-nominated co-founder and host of What’s Trending, Shira Lazar. He’s invited me to be on the Midwest Committee for 2024’s conference happening next September. So sign up for the free CreativitySquared newsletter at creativitysquared.com to not miss when the dates are announced.

In today’s episode, discover how AI can be a force for good and why explainable artificial intelligence is crucial in today’s tech landscape. You’ll hear about the trends Rob is paying attention to when it comes to Web3, blockchain, and artificial intelligence, and how he’s thinking about policy innovation, trust, and transparency.

You’ll also learn more about the vibrant tech scene here in Cincinnati. And how the University of Cincinnati is at the forefront of innovation and explainable AI. Listen on to understand how to challenge assumptions and common narratives in order to move forward and create equity and positive impact with technology.

Enjoy.

Helen Todd: Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox, on YouTube, and on your preferred podcast platform. Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space.

The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Helen Todd: Welcome to Creativity Squared. It is so good to have you on the show.

Rob Richardson: Good to be here, Helen.

Helen Todd: We recently met at your conference, MidwestCon, this past August. But for those who are meeting you for the first time, I know you wear many hats, but can you introduce yourself?

[00:04:37] Rob Richardson: Yeah, sure. So I’m Rob Richardson.

I’m the CEO of both DisruptNow and DisruptionNow Media. I care deeply about technology and its implementation, making sure that it’s done in an inclusive way, because what we build and why we’re building it has never been more important. I believe technology is actually not neutral. It depends on who’s building it and the purpose behind the people and the institutions that are building things.

And that’s why I think the greatest thing that people can do who want to create a better world is to be part of building in this new ecosystem of opportunity.

[00:05:07] Helen Todd: I love that. And we’ll definitely dive into AI for good. In the conversation and tell us cause what, one of the reasons why I wanted to bring you onto the show also is we’re located here in Cincinnati and we’ve met up at the UC digital futures building and there’s a lot happening here —including your conference. And I don’t think a lot of people, even locals know all of the excitement happening around tech and AI and NFTs and blockchain. So I was wondering if you could kind of bring our listeners and viewers who are really international, what’s happening here locally in Cincinnati.

[00:05:43] Rob Richardson: Yeah, it’s really interesting. You know, Cincinnati, even locally, people don’t always know. I think we sometimes are the worst marketers, but you know, we have a lot of innovation happening both within the A. I. And within the block chain space, which I believe both are related because they both deal with data.

One’s data ownership. One is using data to make us more efficient. But you know, the University of Cincinnati is a huge institution at this point. Many 50, 000 students now there. It’s one of the largest research institutions in the world. University of Cincinnati just opened a huge facility, 180, 000 square foot facility called Digital Futures, as you just discussed, with the focus of using digital technology, research and innovation to help businesses and communities solve real world problems.

So, you know, I believe that universities and of course, I think the University of Cincinnati, I’m biased being that I was the chairman of the board there.. and helped lead some of this innovation that’s happening now. I believe University of Cincinnati is going to be one of the key institutions really leading when it comes to AI when it comes to block chain and doing so in a way that is transparent.

And that’s actually that can help because I think, you know, it’s Everyone’s very excited about AI What’s happening right now. But I also think in the rest of our excitement, we also need to be responsible about how these things are being deployed. We need to be transparent about it.

And actually, the University of Cincinnati through Dr Cohen and many others have been leading an AI and the conversations and the research and the technology around it. What’s called explainable ai. That’s a way of just saying, making sure we understand the algorithms and the decisions that the algorithm makes. He’s been doing that for 30 years, and AI is hot to everybody, but AI is not a new concept.

And so I think Cincinnati with its resources, with the innovators that are building in the space, you know, we’ve built a whole platform using blockchain that helps brands enhance their experience. We’ll talk more about there’s a huge blockchain center within the university of Cincinnati.

So there’s just a lot happening in this area that I think can help really lead the world. So we’re hoping that the world comes out and sees it. And obviously Midwest con is one of the activations we met at our annual activation. We are our long term goal and where we’re headed is to make that essentially the South by Southwest for the Midwest, but really focusing heavily on the areas of Web3 and AI.

So, I’m sure I’m missing a lot. There’s a lot of other stuff going on. Really? Not just Cincinnati itself, but even Ohio. There’s just a lot going on. If you look at all the data centers that have moved to Columbus from Google to Amazon, to the Intel project for the chip. All of that is related to us because, you know, Columbus is only an hour and a half away. So I see us as part of an ecosystem to help build here within the Midwest. And so all of those things are happening at the same time. And it just, there’s a lot of opportunity happening within the Midwest that I think people really underestimate.

[00:08:39] Helen Todd: Thank you for that rundown and to plug a few upcoming events just for the listeners who are local or are able to come.

We’ve got the Cincy AI meetup for humans which I’ll put the. Links down and then anyone who wants to create or learn about digital avatars or have their own avatar made that’s happening this October too. And then of course, mark your calendars for Midwest con for next year. And Rob was so nice to invite me to the MidwestCon committee.

[00:09:10] Rob Richardson: So we’re going to have, we’re going to have you speak next year and everything. So we’re looking forward to it. You’re going to leave a session, maybe we’ll lead a podcast there.

[00:09:18] Helen Todd: I would love that. And speaking of podcasts, you have your own podcast. So do you want to plug that really quick?

[00:09:23] Rob Richardson: I do. The podcast is DisruptionNow, and we’re all about disrupting common narratives and common constructs to empower people.

And so most of our stories focus around either entrepreneurs that are in the space of using technology for good or tech or founders that have overcome and you know, really hard circumstances and how they did it. And we also have social impact leaders too. So, all about those who are causing disruption in a good way, though.

[00:09:49] Helen Todd: Thank you. And well, I think one thing that you said that was funny about Cincinnati is that it’s a city that’s bad at marketing itself. And yet it’s kind of the home of branding with a P&G background. So, and I know since I’ve moved back I know since I’ve moved back to Cincinnati since 2020, like I’ve got to rediscover and refall in love with the city.

And at the grand opening of the UC Digital Futures Building, I mean, it was really impressive what they’re doing. And when I tell people about it, no one’s heard about it. So we’re helping change that one conversation at a time.

Well, one person that’s had kind of a through line through all of these whose research was featured at the UC digital futures building he was on stage at MidwestCon and on your podcast is Javier, who studied at UC and now is doing a postdoc at MIT on algorithms and transparency. So I’d love to hear from your opinion, since you’ve got to talk to him on stage and on your podcast kind of his perspective. Cause I think it’s really interesting.

[00:10:57] Rob Richardson: Yeah. Javier is such the underachiever, right? You know, research at UC, then went on and do a postdoc at MIT about explainable AI, which I didn’t even know what explainable AI was until I met him.

So what explainable AI is, it’s providing transparency behind AI. So, we also have a very good podcast, fully podcast with him. I’m sure you’re going to have them on too, but at DisruptionNow you want to check it out on YouTube or disruption now. com. You can find it or wherever you listen to podcasts, but I’ll say this.

It’s the basic part of this is like, when an algorithm makes decisions, we need, we don’t understand most of the time how it’s making decisions. So if you raise a child, let’s say you want to make sure your kid has good decisions, good habits. So, your kid will then make better decisions, right?

So, we need to understand how these just how the algorithm it is like a baby making these decisions. And the more information and habits it develops, it’ll just keep making these assumptions based on those things, right? So we got to make sure that we understand how that’s happening and so that we can kind of correct course to make sure that we don’t have we don’t make really bad decisions or we don’t have a I make really bad outcomes for us.

And I know most people are looking at AI right now and are only thinking about, Oh, I get to change my face, make myself look like I’m 10 years younger, or I get to, you know, make a cool avatar of myself. All those are really fun things, right? Cool, right? But what happens, though, when these decisions are made in the in life or death situations such as on the battlefield, which they are being made right now. I like the audience to know AI is not new at all.

It has been used a whole lot. And there’s been some abuse to that in terms of its application. And so now we’re just getting what’s new is generative AI and how, you know, it could make decisions and learn at a quicker pace where we can all use it more more efficiently, but AI as a concept, AI as an application, none of that is new, right?

So it’s already being used in the battlefield. It’s already being used in how and we make health decisions. So how we, how it makes decisions as we move to this next level of generative AI becomes very important for us to see and understand, but most people don’t have any understanding of how the AI makes decisions or how it works.

And that goes for people that actually make the algorithm, which is kind of scary.

[00:13:13] Helen Todd: yeah, thank you. And I know Ezra Klein on another podcast had talked about it’s really like on the onus of the creators to be able to explain it. And then I, and then actually one of the gentlemen who spoke at the UC Digital Futures AI panel told me afterwards because I was really confused why we don’t know this and he just explained like the complexity once you get to a certain I think he called it dimensionality of like how much input is happening.

[00:13:42] Rob Richardson: There’s an easy way to think about this, right? So this is why it’s complicated, right? If you are building an algorithm, right?

There are many things involved in the level you’re building it. It’s like, you’re almost building a nation, right? So let’s say, you and I, Helen and I are working on a on a complex algorithm. All right. None of us will completely know what’s going on in all parts of it. Just like if we were in the United States government, right?

And we might be working in Cincinnati, Ohio. There’s no way that everyone in Cincinnati, Ohio knows everything that’s connected to things happening in Los Angeles, right? And there’s no way for us to know that we all each know our part. We know our local areas. We know how the government and the institutions and the and the nuances work within our own systems that we are in charge of.

If we’re only in charge of an area of Cincinnati, Ohio, which is a big area, it’s how can we understand what’s happening with 300 million people across different institutions all across, right? So it’s like building a nation, and sometimes it’s like building a global concept. So, you have people that only work on certain concepts.

It’s impossible because it becomes so large for anybody to have complete understanding of how it all connects together. And therein becomes the challenge.

[00:14:53] Helen Todd: Yeah. Well, I’m excited to get Javier on the show to kind of delve more in like, how do you overcome this? Because it is so important. Well, and from the seat that you sit, cause one thing that I really appreciated about MidwestCon is, you know, I think every conference AI is the hot topic, but you really encompass more than just AI, like web3, crypto, NFTs.

So, I’d love to kind of hear from, you know, from a trend aspects kind of what you’re paying attention to and find interesting right now.

[00:15:25] Rob Richardson: In terms of a trend aspect, I would say it’s.. what I see is the trend is the same trend we’ve had for a long time because if you talk about web3, blockchain, whatever you want to call it, you talk about AI what are both of those things have in common, right?

We’re talking about data. So, to me, it becomes how we understand and trust. How our data is being used, how our data is being communicated to us. So I think the most important trend is to actually build more trust. And if we don’t build more trust for all of our prosperity, it can end up really backfiring on us.

Right? So if we have… If we have systems that we cannot trust anymore, if we can’t trust what’s being put in front of us, if we can’t trust any institution to be transparent about how our data is being used, it creates dysfunction and creates dysfunction within society. And I know I’m being dramatic here, but I think given the state of the government and given the state of the world, I think there’s reason to be dramatic, right?

So if one can use .. AI has such great potential, and I believe in AI, right? This is why we focus on policy innovation, right? When people don’t think of policy and innovation together, but they need to be thought of together because we need to start having conversations more and more with those who are governing us the researchers like Javier and the founders, and there needs to be real collaboration.

What often happens is there’s a big disconnect on one side. It’s if you come from the founder kind of business side, they just say like, we need no rules, just let us get out of the way and do it. And that’s only true to a point. Cause that can be naive, right? If you don’t have clear rules of the road you don’t have some protections that can lead to multiple abuses.

We’ve seen it over time. But at the same time, if government doesn’t understand. How the basic fundamentals of a lot of this technology around data works, then they’re at a loss to go about how to actually do this in an effective way. So I think the greatest trend has to be us figuring out how to move faster because innovation is moving.

You know, faster than we could really comprehend. So generally, the government is like 10 years behind, but the problem with that is that every year we moves 10, I would say now 20 or 30 years into the future. So every year we’re getting behind, we’re like 30, 40, 50 years behind. So, I think the greatest trend has to be how do institutions work with innovators to both protect the public and innovate. And this is not an easy thing to do, but I think it’s something that we have to spend more and more time doing. You know, the second part of it is I think the creator economy overall is a part of this, right? So, how we can use these tools to empower creators and not just exploit them.

So you know, I believe the writer strike being resolved, I think was very important. Because I believe in creators also getting paid for what they do. Right. And not just saying, okay, I can use your image forever using technology and never have to pay you or talk to you again.

That’s crazy to me. Right? So, but that would happen without any type of step in. Without any type of either unions or some type of protection. So, the writers stood up for that, but we’re going to have more of those conversations. What does the creative economy look like? We’ve become so accustomed to free tools that we haven’t asked what we’re trading for the free tools that we get right?

When you’re on social media, I tell people nothing’s free. If it’s free you’re the product. That’s what it is. Right. And so we need to figure out then the third trim becomes data autonomy and privacy and what that looks like. I think people would be willing to give up some of their lack of privacy if they have informed consent and we understand what we’re giving up.

But I just had this conversation with my father this morning. He was saying there was this app on Apple that allows you to record all your health data. And then it tells you, it gives you some feedback about how close you might’ve been to falling based upon your movements. Pretty cool because as you advance in age, one of the, one of the ways that people actually end up dying or get or have serious injury when they get older is from falling because your lack of balance and things like that. The dark side of that is can that be then shared with your health provider and your health provider figures out ways to charge you more See, so we’re going to have to really think about you know.. what data privacy and ownership looks like but also it can be it can do wonderful things because that can obviously prevent you from falling.

It can help you probably build case studies to help a lot of people and to make sure that others get ahead of things before things actually happen. But again, there has to be a balance with all these things. So, I think the creator economy, data ownership, and how we actually think about policy are the things that I would say we need to look towards.

I know that was a long answer.

[00:20:16] Helen Todd: I love it. It makes my job a whole lot easier as the interviewer. And it seems like also, you know, I know in Europe there’s a lot more just conscientiousness around data privacy. And it seems like in the States that people are waking up to this more and more. Do you see that and feel that too from your side?

[00:20:38] Rob Richardson: I do. And I think there, there are several things going on. So, I’ll talk about data privacy and I want to talk a little bit about blockchain when it comes to Europe, because I think their way, which is ironic, they’re way ahead of us on having clarity there. So, you know, they, they have been Europe for a long time has been focused on data privacy.

I think, you know, I think the United States really woke up during the 2016 election when folks first began to really realize how data was being manipulated, right? And I think that’s the first time that I really got appreciation. And I read a book, I’m trying to remember what the book is.

I think it’s called Everybody Lies. I can’t remember, but it’s about actually data and how it was how it’s being used to really manipulate opinion. And and, but it’s not like this intention where like Facebook is going out to say, okay, I have an agenda.. That’s not what Facebook is doing.

What Facebook is doing what the, what it thinks the user wants. Right? So, yeah. Which sounds great initially, but if you’re getting information that is reinforcing your own bias..that is not good because what it does is it puts you more into a pigeonhole, it reinforces things that half the time probably are not true —and then it and then it just reinforced that over and over again. So, we saw that in 2016 a lot and people got to, I think some appreciation for what that did. So it, you know, nothing happened, but you know, Europe’s been ahead of that. What I do think is if we don’t get some some handle around that soon, you know, how AI can be used with now deep fakes, which I’m sure your users know, but long story bearable, you can do something like to emulate my voice, take my exact image and have me say things. And then it looks like I said it. And then all of a sudden I can maybe run a a commercial on that against me, right? That’s pretty troublesome, right? That’s just one example of things that we have to be concerned about so I do think Europe is ahead on that. And I think we need to get caught up very quickly they’re also ahead though. I think in one way I think it’s very important when it comes to blockchain and Fintech, right?

They have a whole they have a set of laws that are clear about how we can use crypto, how we can use blockchain. And we’re way behind here in the United States on that because how we can transact and do business and why blockchain is so important. If I can take two seconds here..What makes it so key is that it makes it much easier for us to connect and do business.

And so, traditional banking is not available to most of the world and traditional banking takes so much longer for funds to be received. But, blockchain is most of most things are instant, right? So you can do something and just transfer over money because money is nothing more than just what we agree it to be.

And what blockchain allows us to do, I just say people, for people, those at a very basic level, blockchain is like Google Docs. Everybody knows what Google docs is. I think of it as Google docs without Google. Everybody can share data, but nobody can have central control over it. So, instead of one entity controlling something, it’s multiple peer to peer computers all across the world.

So it’s essentially a sophisticated program that allows no one to control it, but everyone to have access to it. And so that’s how money, that’s how Bitcoin and things like that started. But there’s so many more applications to how businesses can work together and move money and connect faster. So, if you think about innovation, I have many definitions for innovation.

One is it’s a rebellion against the status quo. But I also think it allows us to connect and work together without the barriers in between. And so that’s what blockchain allows us to do. It allows us to send money to one another. It allows us also to have digital ownership, right? Because… What I explained what basically crypto work, we can just have a ledger.

We all agree that this is what it is and nobody can manipulate that. Right? That’s how you’ve seen money being exchanged from one party to another without a bank having to do anything in between, which is pretty powerful. Number two, it also helps with digital ownership. Why is that important? Well, go back to what we talked about with AI.

AI at this point is, has a lot of upside and I believe in it, but there’s also things we have to keep guardrails on. Talk about ownership of your assets, of what you create, how do you verify that, you know, a blockchain that’s decentralized, we don’t have to go through right now with AI, who are the big players in AI, essentially Microsoft and Google.

So, what we’re saying right now — in Google, we trust, right. We have to then say they get to verify. What all the data is. We don’t know how they’re verifying what is what. And I’m not saying they’re doing anything nefarious. I’m just saying trust, but verify. We shouldn’t have to rely on one or two sources to figure out if this data is authentic, if this is actually Helen that produced this, or is this somebody just that AI that copied off of it?

So, digital ownership of blockchain, this is what people call fancy NFTs. There’s another way it can apply. That’s a digital fingerprint that each one of that a person can own and nobody can then change that over time. And if that’s your image and you decide that’s your digital ownership, digital copyright, it could be a way that you can trace it and — check this out —

Eventually, it could be a way that you can get royalties off of that for life and to make that automatic. So like I see blockchain and AI as being integrated together. And what I want to get people away from is people get so lost in trends that they just say. It’s only AI and web3 is over. That is wrong.

It’s just a, it’s a fundamental misunderstanding of what’s happening. But AI is another application of how we’re going to use the next iteration of commerce and the internet.

[00:26:23] Helen Todd: I love that. Don’t trust, verify. Especially as we’re coming into, you know, 2024 elections and world elections, and even you don’t even need deep fakes.

You could look at the Nancy Pelosi video that just slowed down traditional video editing to manipulate it. So, that whole, like, is it real or is it not? Yeah, don’t trust anything that you see. Verify it first. I think it will be very big.

And you know, one interesting trend on the blockchain front is, you know, I’ve had a social media agency for 13 years and Facebook, you know, now meta has kind of been what is it like the bat signal for me? Like when they announced VR, I was like, okay, I should pay attention to VR and stuff, but with their new Twitter-like platform Threads, I think something that’s really interesting is that it’s going to be partially decentralized.

And with meta being so. You know, data is their oil with everyone, but open to a decentralized application of Threads… I think that’s a really interesting signal to pay attention to as well, because they see where the trend is also going. Yeah.

[00:27:31] Rob Richardson: I mean, I agree. I think people are going to want to understand and control some of their own fate, or at least understand how They can be connected, right?

So I see the trend is going more towards authenticity, like it’s making sure that you have a way to develop and connect with your community, right? So, this is something we talked to brands about it, Disrupt Now. And part of what we do is help brands connect with their community across all platforms and see their, and really gamify loyalty experiences, gamify brand affinity.

That’s the definition of essentially what we do. But we but doing that in a way that, that enhances authenticity, right? So being able to.. you can use AI to understand your consumer. But you should be transparent about it, and then connect with them everywhere that they go in a way that builds something that they want, right?

So, right now, we kind of just brands go about trying to track and work with people by just trying to just track them and try to figure out where they are everywhere and learn their behavior. And some of that works. And I’m not saying that doesn’t work. What I’m saying is to really take that to a level where your customers are seeing this more as your community. How can you figure out ways to co build with them to make them stakeholders?

Doing that takes more upfront work, but I believe that the long term trend is that’s going to pay back more. Because those are the brands that people will connect with that will really take their not only their profits but their interactions with that consumer to the next level, because the people that are that really care about connecting with that brand and that are tied to digital experiences, they will enhance your exposure.

And so making sure that you know how to reach them and connect with them where they go is going to be really the new trend for brands. For individuals, you know, I think as AI becomes more prevalent, which it will, as we see more deep fakes as we will, as we see more cyber security tax that are going to increase, people are going to become more and more just distrusting of what they see.

So, they have to they have to find ways to have to say that they are owning their own opportunities, that they have the freedom and autonomy to be able to make their own decisions, and they’re not being manipulated in their decision making.

I do think, more and more people are going to become more skeptical, which could be a good thing, but it also could be a bad thing, right? Cause then people could then be skeptical about everything. Oh, I should never take a vaccine again. I should never do this. So, the more you build things that are the more you build institutional, the more you institutionalize manipulation, let’s say that the more you build wider distrust that can have greater effects for society.

So, I think we have to think about ways to build trust. And let, and make it clear that people are getting a chance to make their own decision, take ownership for their own data, because in the very near future that is right now, you know, your data ownership is really determining how you navigate the world.

[00:30:34] Helen Todd: There’s a really good documentary that it’s long but it’s on YouTube called Hyper Normalization by Adam Curtis. And I actually, I need to rewatch it and it was before 2016, but he weaves in the history of algorithms Trump and Assad all together in a really interesting way, but the… basically from the outset of it was a psychologist assistant and it just mirrored back what the person typed in of, I’m feeling lonely and the computer would just say, Oh, I’m sorry.

Oh, you’re feeling lonely. And within a couple of minutes, She was like, Oh, can I, you know, have this privacy with the computer? And that was like the onset of algorithms just mirroring everything back to us, which is really fascinating. But I think one policy thing that I would love to see is just media literacy in our schools because I don’t think that’s widespread enough and I feel like..easy low hanging fruit to hopefully get across the board, but I don’t know where that’s at all.

[00:31:37] Rob Richardson: We’ll see. I think you make a good point about the the reflecting of ourselves in terms of algorithms. And we have to really ask the question too, what happens then when the algorithms actually know us better than we know ourselves, right? Cause, that’s possible. I think that’s probable. Is that something that we actually want, right?

These are things that we have to think about who gets to own the algorithms and what do we want algorithms to do? Another question to ask. Do we want algorithms to be running corporations? They can do that. I’m sure they can at some point soon. And just have a few of the super elite who have access to the super algorithms. Do we want them owning property?

Sounds weird. Sounds weird, an algorithm owning property. But I’ll ask you this question, Helen. Who owns most property in the world right now? Is it is it actually people that own them?

[00:32:26] Helen Todd: I mean, there, there’s stories coming out that I think three corporations will own most houses. Yes. Like 60% of houses in the US right now, which.

I find problematic. Yeah,

[00:32:39] Rob Richardson: The answer is… the two entities that own that own property in the world. Most of them are either nations or corporations. They’re not people individually. Right? So, it’s not a stretch to say algorithms could end up owning. A lot of our a lot of our property land. And, you know, I think this is extreme, but you know, you have algorithms, one parts of the nation too.

And all these things I’m not necessarily saying are inherently bad to happen. But when I think about artificial intelligence, I think the better way to think about artificial intelligence is augmented intelligence. What it should be doing is helping us. Enhance the human experience, making better decisions alongside of humans.

What I want to be careful of is giving all of our autonomy away to technology and not involving the human experience. I think that could be a mistake. But I think that could be something that, you know, without thoughtfulness, without some regulation, that is more likely to happen than not because that will produce the most return in the quickest manner.

But again, I think we have to balance that out with what’s also good for the for society overall. And this is a balance we have to think about.

[00:33:54] Helen Todd: Yeah. And I know a friend of mine, who’s a brilliant legal mind from Europe, mentioned like something about how Europe is approaching that from a regulatory sense is that there has to be human touch in decision making that when it comes to algorithms and AI. And I don’t know the specifics, but that’s one approach that they’re doing it, that there absolutely has to have a human aspect to it.

[00:34:18] Rob Richardson: I mean, there is, and it depends on how they do that. Like you can go too far with that too. This is why this is such a hard thing to do, but I know that we have to start thinking about this now because I’m afraid if we don’t, what could happen if there’s no type of approach to this? What could end up happening is the worst case scenario is there’s rapid job loss very quickly.

And and people then build a lot of mistrust and then they try to just push back against innovation happening at all. And then we take ourselves way back because we start we don’t function well as an actual government. So, my thing is, and you know, this is just me, cause I’m, I have this weird intersection, as you said, like I’ve had experience with education. I was on the board of the University of Cincinnati for nine years and help them lead their innovation agenda. I ran for office for a period of time, and now I’m on the founder side. So, you know, I believe I have enough perspective on multiple points of views to know that we have to get our head around this and really start having more of the conversations we’re having now.

I think it’s I think it’s the most important thing we can do because what we could technology again is theoretically neutral. But, if you have I’ll give you one example, a clear one with algorithms. So Microsoft, who of course is one of the biggest investors within a chat GPT, which everyone thinks is the only model for AI, but I don’t want to go down that route, but that’s what got us popular.

It’s the Facebook of AI, right? It popularized this technology of AI and how we use it. But, before they’ve been pulling they Microsoft, but also every other technology company, they’ve been pulling from the Internet, the data and the conversations we have with each other. So before this is a few years back, Microsoft essentially created a racist bot.

Now, what I mean by how does racist bot created? Well, it’s how we What it did is it pulled conversations from Twitter. Have you been on Twitter? Right? You hear the conversations on Twitter and so it took real human conversations and it’s not like it wasn’t accurate.

It was accurate because we all have biases built in us and people are on the internet and they, a lot of times they say their unfiltered opinion that they wouldn’t say in front of you or me. They wouldn’t say these type of things in person, but people feel emboldened and empowered on the internet.

So, it took that data, it, the Microsoft bot, and then it when people started interacting it, interacting with this bot, it started saying these racist things, misogynistic things. And they’re like surprised. How are you surprised? They’re surprised because no one’s in the room like me or you, there’s the same people that have the same type of thinking, and they don’t think that all we need to account for and understand the biases behind the inputs that are being put in.

And then, so like that happened right there. Technology is neutral. But if you’re, if the inputs are bias, garbage in garbage out and that’s just one simple example of how we have to think about technology and how we’re building it. And there needs to be a thought process behind this and there needs to be diverse forms of thinking, diverse forms of perspectives, not just after the thought, which most people approach diversity, I would say 99 percent of diversity initiatives are total BS, right?

They’re just checking the box and like, Oh, We have a woman over there. We have a black person over there. We have a Hispanic over there. This is our diversity and inclusion month like whatever. No, I don’t like any of that stuff. I’m saying we don’t have to have all of these diversity inclusion efforts.

I’m saying something really disruptive now. What we need to do is make it intricate in who and how you actually make decisions, not after the fact, not aside, right? It needs to be, it needs to be understood that having a diverse perspective, having diverse leadership, having a diverse workforce produces better results.

And it is imperative when it comes to data for this one simple reason. Data builds upon itself. So, the more we have a company that has data models, it’s going to continue to build and build on that. Just like that child we talked about. If you teach this child a certain way, the more you teach a child bad habits and bad exposure, when they become an adult, it’s very hard to change that.

It’s the same thing with AI models and algorithm models. And this is why it’s so important to think about the data and how we input it, and how we monitor it and how we actually have transparency behind it.

[00:38:47] Helen Todd: Yeah. And I think it goes back to the mirror. Like these tools are just showing us who we are.

And I think from that perspective, it’s like, who do we want to be? And how do we want to shape this moving forward? How can we envision, you know, a better reflection of us instead of just the garbage in garbage out that we’ve been having. On the, you know, the regulatory side and trying to figure out the guardrails.

It’s interesting. And I’ve said this before on a few episodes that. What Meta did with their oversight board, I thought was actually interesting from a regulatory imaginative stance of like…we’re going to get ahead of this and create our own board. Of course, it’s extremely problematic because it’s derivative of Facebook and Meta and more or less a child telling a parent how to parent them on only very specific things.

But, you know, international institutions and Open AI actually just announced something to a red lining team to try to figure out, you know…what are the guardrails for this? But again, problematic because it’s, you know, it’s self governance, and we’ve seen time and time again, but that doesn’t work.

Just look at, you know, the social platforms, no regulation, their self-regulation, they always picked, you know, profits over people. And that’s kind of part of the hyper polarization of where we’re at today too.

[00:40:16] Rob Richardson: And they always will without some without setting the climate as such that, you know, it is the job of society to almost set, you know, morals and things like that.

Like if it is necessary to set like what is acceptable and what’s not within our culture. And I’m not saying I have the answer. What I do know is that those conversations have to be had more and more. The tough conversations about what that looks like has to happen because on the regulatory side, I mean, they’re generally just so lost because, you know, we’re, you know, figuring out just day to day putting out fires fversus looking at how do we prevent fires.

Right? And so we, I say, we, a society get lost in the most you know, the issues of the day. We find ways, I think, to divide, but these are issues that are going to affect everybody that do affect everybody. But they get ignored because, you know, the issues that go to the top of the day, it’s our social media thing, right.

In terms of just like, it’s the new outrage of the day that keeps us almost think distracted from the actual real opportunities to solve problems. But you know, I’m still optimistic but I’m also a pragmatic optimist to see what’s going on. And it’s going to take some… we have to be willing to challenge ourselves to really figure out how we get past this.

For all the great things we’ve created in the United States of America. You know, I do think because we don’t always deal well with ourselves critically, we have trouble. So when you you know, the first thing you learn when you go to counseling is that if you avoid a problem, if you avoid tackling a problem, it just makes it worse.

And so I think when it comes to bias in this country. When it comes to racism in this country. When it comes to the fundamental things that have still challenged the country, people don’t like having the conversation they think if we just move past and never talk about it again and pretend like it’s never an issue, then we’ll get past it.

No, it just makes it worse because then you just bury it. And then you don’t actually address it. So that’s why I say, when we have these conversations about diversity and inclusion, and they’re seen as a side effort versus an integral part of how you make decisions.. it doesn’t change things.

[00:42:24] Helen Todd: You know, one thing that another guest on the show, Gerfried Stocker pointed out also on the, you know, how do we want to envision a better world from a regulatory sense, just in general.

And one of the things that he said, which has stuck with me too, is that it’s also a very Western thing to think that we have all the answers. So, when we talk about, you know, diversity of thought and lived experiences that it can’t just be a Western perspective, you know, saying that we have the answers.

So, every time these come up the voice in his head from that interview keeps popping up. We talked about like AI for good and you’ve kind of talked about some different principles throughout just our conversation today about autonomy and whatnot. But what does AI for good mean to you?

[00:43:20] Rob Richardson: AI for good means that for everything we were building, we understand why we’re building it.

We’re transparent about how it’s being built. And I have no problem. And I believe in capitalism with regulations. But we have to understand like, what’s the purpose of building this? How are we building it? And how will we have some sense of how will this be used to a better society beyond just making profit?

Right. How will this, because what, you know, to this point, almost every technology has, even when it’s lost jobs, it has replaced them with other jobs. Right. But AI is one of the few technologies that I categorize as a general purpose technology. You know, there’s been very few. The Internet was another one and there might be one or one other, but like most technology is specific purpose, which means like, you know, take take the steamboat, right?

It just it really helped us improve transportation in some ways or take, you know, when the Luddites tried to go against some of the when things were like manufactured and it just changed the fabric industry in terms of how fabrics were made. That’s one thing, but like AI affects everything from healthcare to military to you name it…

There’s not something that AI doesn’t affect. And the truth is AI can be applied in such a way that you don’t actually need humans. You don’t, in some ways, like I guarantee you AI can make music and you will not be able to tell that a human didn’t make it. Do we still think we should protect humans and have some, I have creators have a process to be a part of it.

I do, right. I think we have to think about those things. How do we want the human experience to be? What, where do we want the human experience to be a part of this? That has to be a part of when we think about AI for good. What do we want the workforce to look like and how should we consider that?

I don’t think we should have the perspective of, well, you know, we’ll just see what happens and there’ll be other jobs for people there. I actually don’t, I think in this case, that’s less likely to be true. I think unless we’re intentional about how we think about this. There are whole industries that could go away very quickly.

And that may be okay, but then the thought is like, how are we thinking about how can AI then help us improve the lives of those people who are being replaced? Not just how much money can be made for those at the top. AI for good means. Decentralization of ownership and application of that.

Right? So, it means that we ought to be able to there shouldn’t be super algorithms that are owned by a few and that others don’t have actual access to. And so I think it’s going to be more important than ever to figure out how algorithms are used, who gets to use them, and who has and who gets how we all really can understand what’s happening behind the box.

If we don’t have transparency, if we don’t have thoughtfulness about how it applies to the human experience, then the only thing that will apply is how much money we can make and how fast if that becomes the only calculus, AI could do more damage then good with prior than prior technologies did.

I hope I’m wrong on that, by the way but from what I see, I think at a minimum. Without some thoughtfulness, at least in the for the short term for maybe for 30 or 40 years, we can see a massive disruption that causes a lot of problems. And then maybe we get more jobs on the other side. But even that is not good. So, I think we have to really think about this, you know, what we’re building. While we’re building it and how we’re going to make sure that it enhances the human experience.

[00:46:50] Helen Todd: I love that. And it goes back to something that you were saying, just like the intentionality and we don’t really know the intentionality behind a lot of the the tools that are out there aside.

That they’re mostly from for profit companies which is also kind of problematic and what their motivations may be as well.

[00:47:09] Rob Richardson: Right. Again, it’s going to be profit. There’s nothing wrong with that. Right? It’s the thing is there has to be. There has to be also consideration of the public interest in good, just like we we had this same dynamic during the industrial revolution, right?

It’s why we have laws around, you know, child labor laws. It’s why we have laws around, you know, protecting the environment. You know, it’s why we have the 40 hour work week because You know, given no regulation the standard would have been less as work people till they drop.

That’s what happens. I know companies will say they want to look out for the good, but that, but what happens is you also go up go up against competitors that will then price you out of the market. If there’s no standard set by policy, you’re almost driven to do that, right? So. That’s the reason why, you know, policy does matter, but we have to get it right.

[00:47:58] Helen Todd: Yeah. We’re such incentive driven creatures. But I do think, you know, we’re at this pivotal point and really, you know, you mentioned earlier that social media keeps us so distracted and all of this stuff. And I know the AI news is, you know, so rapid, but I invite everyone to like take a beat and actually think about, you know, how do you want to envision a better future and how do you want this tech to add value and not take away value?

[00:48:29] Rob Richardson: Yes, because we can’t opt out, we can’t opt out one. One thing I want to say very quickly on this because you made me think of something.

There are people that are listening to us right now to say, well, I don’t want any part of this, right? I don’t you. I don’t want to do it, but listen, the technology is good. It’s going to come. So I encourage everybody listening to actually learn about AI. It’s not nearly as complicated as people make it out to be. Learn how to use some tools to make your life more efficient for sure.

Like if you’re a content creator, like many on the show may be, and you like to reproduce clips, you should be using. Either I think as Opiates clips or video AI, they are, they will save you so much time and effort in video editing, do that, learn that. You should be using if you do copywriting, you should be using AI to think about to help you write faster.

I think schools should be adopting AI within their teaching, but again, it’s not about replacing the human thought process. We still we teach kids even harder now. Okay, you now need to make sure you understand how to think about things at a level of of complexity because now in order to do AI right, you have to explain things differently and understand it actually at a higher level. Otherwise you get garbage out. You could, we can do things with kids. Like, you know, I’ve seen a interesting idea that people have done AI rap battles where they’ve had, you know, kids come up and rap and then they have the computer kind of rap battle against them, but what you’re teaching kids is like, okay, you still need to understand you can put stuff into AI, but you still have to understand how to come up with basic structures, how to critically think, how to frame things, because if you don’t frame it right and understand how to critically think — you won’t get a good output from the algorithm. So I’m not of the belief that we should be telling kids, “No, don’t use any chatGPT.” I think that’s crazy. I think we should tell them. This is what you can get, but listen, as I show you, if I approach it this way, if I understand how to actually break up structures, if I understand how to think and frame a problem, I get a much better result than if I just try to put something out there.

And so we can teach our kids how to use these tools. In a way that helps them actually see that. Oh, I can use this knowledge to enhance what I can do that will help them. So, I do want everybody listening to me to say you should embrace a I’m so those who are skeptical about tools. You should embrace it for those who are founders in the space, you have a responsibility to make sure that you are creating something that is making society better. And for policymakers, they have a responsibility to actually start learning these things on a fundamental basis and figuring out how we’re going to make sure that we set an environment that incentivizes us to become better and for us not to simply just figure out how we can, you know, maximize profits quickly at the exclusion of people.

[00:51:26] Helen Todd: Yep. So, so well said. And one little anecdotal story one of your high school volunteers at Midwest con reached out to me to, I guess he formed like a, an AI high school club and wants to have me come and speak to his high school students.

[00:51:43] Rob Richardson: We got to get him involved too.

We got to get him back involved. Polish. Yeah. He’s a great guy.

[00:51:48] Helen Todd: Yeah. Yeah. That’s his name. Polish. Yeah. And I mean, it’s Harry said this on the very first episode that just to echo your point or punctuate your point about embracing AI. It’s intelligence on tap. And if you don’t embrace it, there’s going to be an intelligence divide.

It’s almost like a lot of people in the U S don’t even know how many people don’t have high speed internet, you know. It’s like not embracing internet and not giving people access to this next wave of information and intelligence. And I guess the only other thing I wanted to say relate to policy is.. you know, there’s a lot of debate over the best approach and I know I get into conversations with my friends about regulation versus watchdogs and all of this. But, I don’t think that there’s adequate watchdogs or, you know, other institutions that exist and some there’s some conversations about creating new ones.

But I did want to mention watchdogs, that there’s not, you know, like one, one silver bullet answer that, that will cover all of what we need either

[00:52:51] Rob Richardson: I mean watchdogs are good, but generally how do they get funded? They probably get funded by the people that they’re watching, which becomes part of the problem, right?

And people say it’s not a problem, but it’s always going to be a problem because we’re all human. And so like, where are you going to need policies is where you’re going to need some, somebody that has at least some, something removed from the entities that are that we, that have to be monitored. So, now that now the entities on the other side would say, we don’t need any of that.

That’s going to get in the way of innovation. I hear them. And overstepping that can be true. But right now in this space, I do think we don’t have enough. I think without some clarity, you know, it’s interesting on the blockchain side. Everybody agrees because right now we need to have a clarity because the if we don’t, then the government just tries to enforce things on its own, which is also not good. On the AI side, I think people are like, well, you know, let’s see what happens. In terms of people that are founders in the industry, but there are going to, there’s going to need to be some type of policy involved at some point. And I think right now the best approach is making sure that we, I think founders and others should create if we’re going to have a watchdog industry, then.

We ought to create what we think are the most important points that need to be incorporated into a policy, right? I think people often do these things just to say, well, we don’t need policy because we have this..and that to me is just disingenuous, right? I think the better approach is, we know policy is needed.

We think if you’re going to make sure a policy is done right, that doesn’t kill innovation, these are the factors you need to consider. And then there’ll be some back and forth there with the industry. But doing, taking that approach gets us ahead of the game versus when we have an incident (and we’re going to have an incident around AI and things like that.. it’s going to happen, right?) But guarantee it. We’re going to have some things, some national security, all those things are going to center around something with cyber, something with digital and probably something with AI. Before those things happen, how are we thinking about preventing the fire? Versus trying to put a fire out as much different, much more difficult to put out a fire than it is to try to prevent it.

So I would like us to be in this stage of, okay, what is it that from a, from an industry perspective. What are the things we want to see incorporated? What are some things we think don’t need to be incorporated? I think you have that and start having those conversations versus saying we don’t need policy or regulation because we absolutely do.

[00:55:13] Helen Todd: And I hear this all the time from the tech community and founders of that, and kind of what you said of that policy stifles innovation and it’s such…It’s also like this American except exceptionalism of like, why so many tech companies come out of the U S and why we’re such a global leader.

And that if we do anything, it will mess up this magic formula that we found that makes these like trillion dollar companies. Like what. Like, what’s the most pointed way that, or an example that’s like that policy doesn’t stifle innovation? Because I do hear this come up over and over. Even our European friends sometimes are like, we wish we had less like regulation.

You know, some of those come up every once in a while when they look to the US as a leader in a lot of these companies.

[00:56:02] Rob Richardson: Well, let’s think about what got us here. How do we build…How did we build such a massive empire in terms of creating the infrastructure that we have for the internet? How did we create so many multinational corporations?

Did we do that in an era of zero regulation? No, in fact, it was a lot of that came out of after the Great Depression during FDR, right? And so we came to this period I think it’s also important to get our facts and history straight right because we like to I think America has done some exceptional things timing also matters too, right?

So during World War II, we had all of Europe in the middle of a war and a battle that really set them behind. You had Japan as a part of that too. And then you had, you know, China had made a strategic decision to not really innovate. They’ve, of course, the sense change course. So, what I would say is that we during that time had a lot of regulation.

We had you know, we had strong unions. We had progressive taxes. Actually it was probably too progressive for even our ability to consume it right now. It was that actually in a 90 percent marginal tax rate. But during that time, that’s how we create the infrastructure for the Internet.

We created it. At that time, the largest middle class in the history of the entire world, still to this point, all during this time, we created a great infrastructure that connected us. And so part of what created innovation was the ability for people to do business, but also part of it is was the ability for us to be able to connect to each other, the infrastructure, the Internet, like we built that heavy government investment.

And we also have like people ignore that stuff. That is a fact, right? And then what innovators do, so what a government can do is help create the infrastructure and climate and the innovators then take that and create, but everything that an innovator creates, most of it is from an infrastructure where there’s a stable actual government to stuff.

So I say all that to say — yes, we built trillion dollar companies and all that stuff too recently. But what has gone down is the quality of workers, the amount of pay that workers have. If you look at the big tech three now, they have significantly less jobs, like by hundreds of thousands than GM, Ford and Chrysler when they were considered the big three at that time, hundreds of thousands of less jobs that are created than at Kodak.

So we’ve created these things, but the level of income inequality that we’ve created has exacerbated too. AI is going to take all that and take that to the moon. So we have to say, is that going to be a good thing? The answer is, I think it’s horrible for business because then you’ll have a climate where people will be responding negatively to innovation.

And then we can get something that could take us all the way back. Because what people fail to understand is that without a stable government, innovation fails. Otherwise, Afghanistan would be killing innovation because they have no government. Done right, a stable government promotes innovation, not the other way around.

Innovation does not promote a stable government. If so, I want people to give me one example of a place where there’s a lack of a stable government where people are just killing innovation. I’ll wait.

[00:59:03] Helen Todd: Very well said. I’m going to repeat that. And I mean, I’ve said many times on the show that I’m pro regulation and hopefully our listeners or watchers, if you’re not yet, you’re pro-regulation now.