Ep29. A.I. Music Videos & Movies: Move Over Celebrities! A.I.-Generated Characters & Creators Have Arrived with A.I. Artist & Full Stack CreatorBen Nash

On this episode of Creativity Squared, we delve into A.I. filmmaking with Full Stack Creator, Ben Nash, a multifaceted individual whose expertise spans digital products, websites, fabricated signage, art, music, and video content. Ben has worked primarily on web design and development up until last year, when his professional narrative took a more creative turn toward music video production with the help of artificial intelligence.

In our conversation, Ben talks about his collaborations with musicians on A.I. music videos, how he sees creators of A.I.-generated characters emerging as the next celebrities, and his thoughts on the future of immersive video. We discuss his processes and extensive toolbox of platforms and apps that he fuses together to bring his A.I art and music videos to life, plus Ben’s creative process and the democratization of creativity.

Meet Ben Nash

Ben is a Full Stack Creator based in Cincinnati, OH, who marries design, code, and artistry to craft digital products, websites, fabricated signage, art, music, and video content. Born in Ohio, raised in North Carolina, and inspired by the innovative spirits of San Francisco and Austin, Ben credits his diverse background for fuelling his creative endeavors.

An alumnus of NCSU’s School of Design, Ben started out in Industrial Design. His career trajectory took him from the multidisciplinary design firm Bolt Group in Charlotte, NC, to freelance opportunities on the West Coast, eventually leading to a passion for web design and development in the early 2000s. Ben’s career includes design roles with clients like Frog and VMWare.

Ben was nominated for a Webby award for his work on a financial educational website. Ben is also an inventor — he holds four patents, including a utility patent for a novel cup holder designed for a major beverage company.

Ben is an expert frontend developer, UX designer, and an accomplished artist using A.I. tools, embodying his mantras: “Form Follows Function, Add Style” and “Thinking Big and Iterating Daily.”

Beyond his development work, Ben contributes financially to open-source web development tools and enjoys participating in Cincinnati’s burgeoning A.I. scene. He’s also an organizer in the A.I. art scene online, hosting community discussions on X and other platforms.

Create, create, create

“About this time last year, I did my first animated video using A.I. art assets, and I was blown away. The first one probably took me seven, eight hours to do 30 seconds worth or a minute. And I really loved it. I thought man, this has got some legs. I could take this somewhere.”

Ben Nash

Inspired by a desire to move beyond static images, which Ben playfully dubs “slideshow animations,” he embarked on a 30-day challenge to explore his passion in the realm of animated videos. What began as a personal challenge evolved into a prolific journey of daily content creation. Initially committed to sharing at least a 30-second video each day, Ben found himself hooked on the creative process, and this 30-day challenge seamlessly transformed into a year-long commitment. Now, he consistently uploads up to 60 minutes of content every week to his YouTube and X channels, and considers himself an influencer in the space, with a growing online community.

“That’s one of my mantras — you create as much as you can because it’ll help not only you personally, but to publish it, will help you professionally.”

Ben Nash

His philosophy revolves around the belief that consistent content creation contributes not only to personal growth but also to professional recognition. Drawing from his firsthand experience, he emphasizes the organic growth that occurs as you share more of your creations.

“It’s about establishing a reputation through the continuous generation of valuable content.”

Ben Nash

Throughout the conversation, Ben advocates for the simplicity of initiating the process — just diving in and encouraging others to embark on their own artistic exploration. Reflecting on his own 30-day challenge and the success and motivation it’s given him, he offers this advice:

“Do one thing every day, create something, and publish it. It doesn’t have to be perfect or lengthy; it can be a short snippet. That’s how you learn — so create, create, create.”

Ben Nash

A.I. Music Artist Inspirations

In the evolving world of generative A.I. art and video animations, we discussed other artists who are alongside Ben in shaping this industry as it’s developing. Ben mentioned two that are worth keeping on our radars.

The first, Dave Villalva, spent the majority of his adult life in corporate America until A.I. beckoned him away from his traditional office job. Despite a career focused on travel and sales, Dave made the leap to dedicate himself to A.I. full-time and is, according to Ben, “really pushing the forefront of storytelling, and his artwork is amazing.” He’s making a splash in the space by hosting X Spaces throughout the week, interviewing other filmmakers and other A.I. artists, and providing resources to members of the community. Ben says he gets inspired by the sheer volume of content Dave creates (he’s produced 20,000 four-second videos) and the techniques that he is discovering and sharing daily, which he shares openly with other creators.

Another noteworthy figure in this creative realm is a close friend of Ben’s, Chet Bliss, who’s renowned for his volume of work in A.I. image generation and is now venturing into the world of video. A dedicated user of Midjourney, Chet is also exploring Stable Diffusion and has created 180,000 images in the past year. Taking on Ben’s challenge to produce one video a day, he is a prime example of Ben’s mantra that growth and exposure can be achievable through consistent creating and publishing.

Ben also highlighted Sway Molina, an ex-actor and one of the organizers of the Terminator 2 parody remake, who is now using A.I. tools to be one of the first to create elaborate and visually consistent A.I.-generated video series. Sway is also unique in creating different versions of himself in his artwork.

Terminator 2 Parody Remake

Ben is one of 50 creatives who are currently getting ready to release what may become the longest A.I.-generated film ever produced, a two-hour parody remake of the iconic Terminator 2 movie.

After their selection from a pool of dozens of A.I. filmmakers, each artist undertook the challenge of producing 2-3 segments from the original film within a tight 10-day timeframe.

“The kickoff meeting to this project was one of the most magical moments I’ve ever had online.”

Ben Nash

While some predicted by now we would see full-length feature films of this kind, this Terminator 2 project will likely be one of the first “super long” videos to come out, Ben explained. From here, the hope is that it paves the way for the growth of similar projects.

Reflecting on the creative process, Ben shared the intricacies of his work and the time and energy put into this project, particularly his own task of replicating a 42-second, one-take scene from the original movie. Instead of having a singular long JPG image that continues in either direction to serve as the scene background, he challenged himself with a much more intricate and involved technique to create the background from a continuous set of videos seamlessly scrolling from left to right. Ben jokes about how, despite the productivity promises of A.I., he probably spent more time creating his scene than the crew spent on the original movie. Ben’s rule of thumb is one hour of work for one minute of content, but he set different standards for himself for this project.

“I believe speed is one of my goals. But for this two-and-a-half-minute clip for the Terminator movie, I spent over 40 hours on it. It pushed me beyond my limits and in different tools, in areas that I’ve not ever used before.”

Ben Nash

For his second scene, Ben employed a tactic known as “rack focusing,” which he borrowed, like many other techniques, from traditional filmmaking. Rack focusing is when the focus shifts from the foreground to the background, or vice versa, without any camera movement. His use of the technique came out so well that it made this scene worthy of being featured in the trailer for the remake.

“Definitely a historic and pioneering project for this field. And I’m just so happy and honored to be a part of it.”

Ben Nash

Saying Goodbye to Traditional Visual Music as We Know It?

One of Ben’s primary passions is collaborating with bands and musicians on their music videos. He thinks A.I. presents a huge opportunity for musicians to make their own music videos and take advantage of the leverage that comes with viral videos and content on platforms such as YouTube and X. He believes that those resistant to grow and develop with A.I. will regret not learning about the new tools at their disposal early on.

“Traditional musicians who aren’t publishing themselves are going to be left behind with other people getting into music today.”

Ben Nash

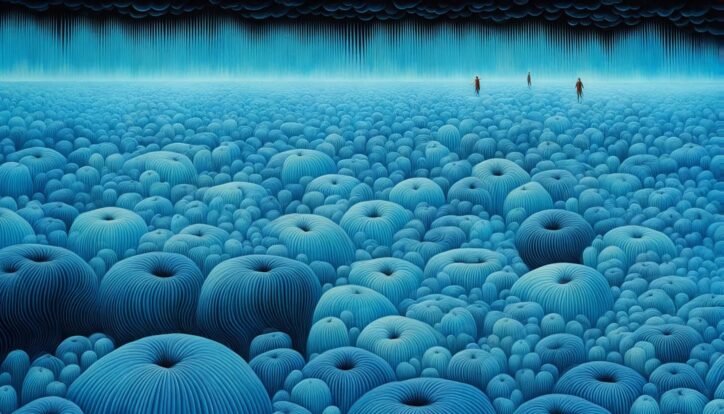

He uses his recent work with Brazilian musician DevNull as an example. Their collaboration pushed Ben to create 12 music videos using one of his favorite A.I. video tools, Neural Frames. Ben says that exposure gained from the music videos has helped grow his career in the music industry. And beyond the benefits of exposure, Ben says that the videos help communicate more meaning to the audience, especially for acoustic tracks

“Without lyrics, it’s often very hard to figure out what the song is about. But these songs are so emotional, every time I watch my own videos that I created for him, I honestly tear up because the music itself is so beautiful.”

Ben Nash

Scratching the Surface of The Process Behind the Videos

Ben has an extensive tech stack, using about six to ten different tools to complete a project, and is always experimenting with new tools. He discusses a few of the many tools he uses to make his magic, one of his favorites being Neural Frames, which is an A.I. animation generator. What sets it apart from most other A.I. video generators is that you can continuously insert prompts throughout the timeline of a track.

With Neural Frames, users can drill down and display the constituent parts of a track and then fine-tune the video animations to visually react to those different sounds, however dramatically or subtly they want. It really gives the user the full ability and creative control to tell their story throughout the video.

“I pay attention to every second, I’m constantly adjusting. For one of those three-minute videos, for example, takes about eight hours of time. That’s how much effort I put into it.”

Ben Nash

Using his recent video “Al’s in the Mix from Mac Lives” as an example, Ben explains more of the high-level creative process to get to the finished product. He’ll use ChatGPT as a resource to generate the initial lyrics in some projects, then paste those lyrics into Soma to generate audio. Out of several dozen pieces of generated audio, he’ll compile the best ones in Adobe’s audio editor and slice them together into one track. Ben generates the video elements with Moon Valley and then modulates them with Neural Frames. After hours of rewrites and tweaking, Ben puts it all together with captions and text animations in Capcut.

When it comes to prompts, Ben describes his as ambiguous and abstract, yet detailed. He shares one from a video he made with DevNull: “nostalgia layered composition, circular abstraction, detailed characters.” Although the words may sound like a crypto wallet passphrase, Ben says the verbiage is key to the output.

“When you say ‘in the style, graceful balance,’ that really does mean something to these language models, and it does pull out a specific style. So it’s the abstract elements you can still control quite a bit of; it’s not just throwing something at the wall and seeing what comes back.”

Ben Nash

As A.I. video technology evolves, Ben says he expects to see more consolidation among critical tools.

What’s on the Horizon with A.I. Music Videos

Ben says he is hopeful for the day when multiple tools are seamlessly integrated into one comprehensive platform. While he acknowledges that there might not be a singular “perfect” tool for creating A.I. videos, he anticipates improved user interfaces that are faster and more user-friendly.

As far as what else is to come, Ben notes the current and upcoming effects these A.I. tools will have on traditional filmmaking. We’re seeing A.I. technology features in traditional video tools like Adobe Premiere that can detect your speech, immediately transcribe it, and then enable you to edit video by rearranging transcribed text instead of the actual video. Ben says this is a big progression for artists.

“I think storytelling and creating, what we think of as traditional film and television shows, is going to see a major breakthrough in the next year.”

Ben Nash

Ben also expects a significant shift in featured characters in video and film as text-to-video democratizes video production. He predicts an explosion of diverse characters and storylines that might not make it onto screens otherwise.

For the last prediction, Ben talked about his big hopes for Apple’s upcoming tools and what they’ll do for the industry, especially their Apple Vision Pro AR goggles.

“It’s just going to be a super game changer. There’s always been the dream of this type of technology, VR and AR. And I think Apple’s going to finally pull it off. I’m trying new tools every day and trying to stay on the forefront of that. And if I can create content for Apple Vision Pro, and I’m assuming I’ll be one of the first doing so. So hopefully, you’re going to be seeing my name in that space next year. It’s coming soon. I love it.”

Ben Nash

He says that he can’t wait to dive into what he expects to be the first viable AR/VR ecosystem.

Links Mentioned in this Podcast

- Follow Ben on X

- Subscribe to Ben’s YouTube channel

- Learn more about Neural Frames

- “Al’s in the Mix from Mac Lives” by Ben Nash video

- Follow Chet Bliss on X

- Connect with Sway Molina on X

- Follow Dave Villalva on X

- Check out Soma

- Adobe’s audio editor

- Learn more about Moon Valley

- Check out CapCut

- Adobe Premiere

- Apple’s Vision Pro AR goggles

- The T2 Remake Parody

Continue the Conversation

Thank you, Ben, for being our guest on Creativity Squared.

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 150 arts organizations, projects, and independent artists.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

[00:00:00] Ben Nash: I thought, man, all these musicians really need to start learning how to make their own videos for their music. So I put out an open call on X this summer, and I said that because two aspects of this. One was, this is an opportunity for all these musicians to create video. And then two, if they don’t… AI visual artists like myself who dabble in music are now dabbling in music creation and there’s all kinds of AI music generators out there that are getting better and better all the time so that traditional musicians who aren’t publishing themselves are going to be left behind with other people getting into music today, creating just a good quality tomorrow.

So I put out this open call. I said, listen, do it or reach out to me. And ever since then, people have been reaching out to me.

[00:00:46] Helen Todd: Ben Nash is a visionary full stack creator based in Cincinnati, Ohio, who marries design, code, and artistic flair to craft digital products, websites, fabricated signage, and AI art.

With an adventurous backpacking spirit and a degree in industrial design honed in innovative hubs like San Francisco and Austin, Ben’s diverse background fuels his creative work. As a Webby Award nominee, and with four patents to his name, Ben’s inventive genius is patent approved. He’s an expert front end developer and UX designer by day, and an artist by night, whose mantras include form follows function, add style, and thinking big and iterating daily.

As an accomplished AI artist, Ben contributes financially to open source web tools and hosts intellectual conversations at the intersection of technology and art online, and most notably on Twitter spaces. Ben and I met through Cincinnati’s burgeoning AI scene at the CINCI AI Meetup for Humans that I co-host with Kendra Ramirez at the UC Digital Futures building, fueling his next creative and career trajectory.

I was impressed with Ben’s prolific work and AI art challenges that he gives himself. He’s also part of the upcoming AI collaboration to recreate Terminator 2 as a parody. He’s one of 50 artists who want to push the limits and showcase what is possible using the latest AI tools, which you’ll learn more about in our conversation.

In today’s episode, discover how Ben collaborates with musicians on AI music videos and how he sees AI generated characters emerging as the next celebrities and his thoughts on the future of immersive video with Apple Vision Pro. We dive more into his Dev/Null project, which pushed Ben to create 12 music videos using one of his favorite AI video tools, neural frames.

Our conversation includes other AI tools that he uses and is experimenting with, plus Ben’s creative process and the democratization of creativity. Enjoy.

Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox, on YouTube, and on your preferred podcast platform. Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space.

The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Ben, welcome to Creativity Squared. It is so great to have you on the show.

[00:03:52] Ben Nash: Thanks. Thanks, Helen. Thanks for having me. Appreciate it.

[00:03:54] Helen Todd: Well, I heard your name a lot. Kendra Ramirez, as soon as I told her about my show, she’s like, you have to meet Ben. He’s amazing. But for those who are meeting you for the first time, can you tell us who you are and your origin story?

[00:04:07] Ben Nash: Yeah, for sure. My name is Ben Nash, originally born in Ohio, not too far from Cincinnati, where we met but grew up mostly in North Carolina, went to NC State School of Design, where I got my degree in industrial design, did that for a few years, but also studied computer science at a school and did my first HTML web page back in 1993, if you can believe it.

So I had a most of my career has been in web design and development for most of the two thousands where I lived out in California, both Northern California and Southern California in L. A. And San Francisco, mostly. But also a few years in Lake Tahoe. And then besides web development and web design, I’ve had a, some career in many fabrication, manufacturing.

I had a sign company when I lived in Texas for seven years where I still use digital tools such as CNC routers, plasma cutters and laser cutters to make you know, again, digital designs turned into reality. And then recently with the you know, with the AI, I definitely combined all those skills into what seemed to be the perfect you know, perfect avenue for all of my passions have combined you know, my use of digital tools to get back into more of a artistic realm.

So I started calling myself an AI artist last year when I got into Mid Journey and other tools. And these days I’m doing a lot with video and music and consider myself a, now an influencer in that space. I’ve grown quite a bit online, especially Twitter and working on my YouTube and..yeah, that’s basically who I am today.

[00:05:50] Helen Todd: Awesome. Well, it’s so great to, to have you on the show. And we met at the in person in real life at the Cincy AI meetup for humans which was so wonderful just to see you, but also there’s such a. An emerging burgeoning community here in Cincinnati. And one of the things that you shared is that you gave yourself a 30 day challenge.

And was that kind of the thing that kicked you off on this path?

[00:06:15] Ben Nash: Yeah, for sure. You know, again, I got into Mid Journey last summer, I think, is when it was really and I wanted to do more than just static images. And so back then I called them slides, slideshow animations, where you’re just showing one image, another and adding music to the background. But yeah, it was about this time last year, end of October. That I did my first video, animated video with using AI art assets.

And I was blown away. It, the first one probably took me seven, eight hours to do 30 seconds worth or a minute, and I really loved it. And I thought, man, this has got some legs. I could take this somewhere. I did study a bit of animation back in college back in the nineties and did a lot with after effects back then.

So I had a little bit of a background with that, but did not touch that for almost two decades. So, I was really excited to get back into that realm. And so, yeah, again, that first, those first few took quite a bit of work and I thought this is, this, I have to get good at this.

So, I gave myself a 30 day challenge where every day I would publish at least once, one video, you know, as minimum of 30 seconds was my goal. Closer to a minute is what I wanted. And man, I have not stopped. I’ve gotten up to maybe sometimes 60 minutes a week, I’m publishing…which is crazy. And that’s published video, 60 minutes worth. You know, some weeks aren’t as prolific, but some have been more.

And so that 30 days is now turned into a year and I haven’t stopped. I’m going to keep going.

[00:07:51] Helen Todd: Well, congratulations.. even with these AI tools, how prolific you are is extremely impressive. In and within the AI art that you delve into… it seems like the real passion lies at AI music videos.

So can you tell us a little bit more about your interest and how you got into AI music videos specifically?

[00:08:17] Ben Nash: Yeah, I mean, the one, you know, secret is it’s actually easier than telling a story sometimes. I mean, music videos can and should have a story, to be honest. But, you know, with AI art to begin with not being as, you know, especially last year.

Not being real film quality a lot of the results can be pretty artistic looking. So, music videos seem to, you know, lend themselves well towards that, where it can just be a bunch of artistic looking abstract images thrown together. So that’s the secret there. Like, you know, they’re actually easier than telling, you know, a five minute story sometimes.

Yeah. The other aspect of this is, you know, I grew up in a musical family. My mother taught piano and I learned for most of my youth how to, and then, you know, tried to learn guitar for the past 30 years and failed. Always so that was another aspect of this is I also want to make music myself and make my own music videos Whether it be from rock to rap to anything.

I you know, I definitely want to be my generation. I’m a Gen X person. So I would love to see myself in a MTV style video.. So, I want to be a rock star. So I’m aiming towards that but at the same time, yeah, I love working with other musicians, you know, especially people who have, you know, have a lot, are better than me at making music.

So I’ve now made a name for myself in that space where even this morning right before this call, I got another band reach out to me from Sweden. I seem to get a lot from that part of the world reaching out. So I’m working on about five different videos right now for different bands. All that reached out to me in the past few weeks.

So it’s yeah, I love working with musicians, making their videos come to life.

[00:10:04] Helen Todd: Oh, and one that is really fascinating. I watched it last night ahead of the interview and it was just really mind blowing. And I know for some of our listeners especially just the audio that you can’t see it, but we’ll embed all these music videos onto the dedicated episode blog posts and link to Ben’s YouTube channel.

So you can see this. But is Dev Null and tell us how you came to work with them and then about the project itself.

[00:10:34] Ben Nash: Yeah. So, just to clarify too, there are two musicians in the world that go by the name Dev/Null. Again, I think one of them is in also Sweden, but the other one is in Brazil and it’s the Brazilian person I’m working with and this summer, you know, again, as someone who dabbles in music, but making music videos I realized there’s a huge opportunity here for me, for musicians to be making their own videos.

And just like any creative person musicians seem to record a lot, edit a lot, maybe not finish a project, have hard drives full of music sitting there, unpublished on, you know, unreachable. And so I realized too, for their sake, you know, to push their music out platforms like YouTube and X are definitely going to get more reach than something potentially like creating a deal with a label and getting on Spotify. So I thought, man, all these musicians.

Really need to start learning how to make their own videos for the music. So I put out an open call on X this summer. And I said that because two aspects of this one was, this is an opportunity for all these musicians to create video. And then two, if they don’t AI visual artists like myself, who dabble in music are now dabbling in music creation.

And there’s all kinds of AI music generators out there that are getting better and better all the time. So that traditional musicians who aren’t publishing themselves are going to be left behind with other people getting into music today, creating just a good quality tomorrow.

So I put out this open call: I said, listen, do it or reach out to me. And ever since then people have been reaching out to me. So, this guy in Brazil, Dev/Null, reached out and said, Hey, I’m, I fit your profile. Exactly. I have dozens and dozens of tracks, a couple of albums worth that are just sitting here. I’m passionate about him, but I, you know, I don’t know what to do.

So he shared a private website he made for me to, for us to collaborate on. And his album has about 21 tracks and I fell in love with his music. It’s mostly electronic music dance music, but also goes into different realms of jazz and even some classical parts. So I love the album and just fell in love with working with him too.

So, creative people can be across the spectrum of like, here…I recognize your talent, take it over, do whatever you want, or they can be completely control freaks and want to direct the whole process. But Dev/Null on Brazil was really open to, he liked the work that he saw in the mind. He said, just go for it.

So with him, with several of the tracks, I asked him more about each song. You know, is there a personal story behind them? Some of them he was really passionate about and gave a personal story behind it. And that really helped direct where the story would go. But some of them he said, no, this one’s up to you completely.

I’d love to see your take on it, which was nice to have. And then the whole album has an overarching story about personal growth, finding themselves in life story. So I did about 12 videos within two weeks for him and that album through those videos that helped expose him and me to more opportunities.

So it was a really good project. And in particular with those videos, I used a software package, another AI art tool called Neural Frames and Neural Frames that was just released a few months before that, if not weeks before that, and I’ve become one of the most prolific and most expert users of that platform.

And I talked to that developer almost weekly on zoom calls to talk about new features and bugs and such… So it’s, again, I’m getting deep with this process and really enjoy it.

[00:14:23] Helen Todd: Yeah, and for our viewers who want to geek out about the more technical aspects of Ben’s work there. I did watch the interview with the founder of Neural Frames and you guys go really deep into the making of the series, which was really fascinating to watch.

But one of the things I thought was interesting in that interview was just kind of your creative process, especially with this band.. or this gentleman who didn’t have lyrics to go with it. So can you kind of walk us through, especially cause you’re so fast and do so much, like how you approach all of these projects from a creative aspect?

[00:15:02] Ben Nash: Well, again with him, I wanted to know more what these songs mean to him. He did have some. some titles that definitely caught my attention for each track. And I said, Oh, there’s gotta be a story behind here. And yeah, correct. Without lyrics it’s often very hard to figure out what the song is about, but these songs are so emotional.

I think I mentioned to you this morning that every time I watch my own videos that I created for him, I honestly tear up because the music. Itself is so beautiful. And I think in most of those tracks, I captured it visually. So the application Neural Frames what sets it apart from most, any other AI video generator is you can continuously insert prompts throughout the timeline of a track and you upload an image to begin with.

And now you can upload images throughout, but. You also upload the music track and then the software will separate that music track out into its parts and pieces, such as like the drums and the voice and other, you know, baseline and such, and I can use controls within the application to visually react to those different sounds.

So it creates for a very audio reactive video and you can get dramatic with it or subtle and. Every second or every frame of those videos, there’s probably five or six different controls that are interacting to different aspects of the to the music. And so with that, I can somewhat tell a story throughout the video.

And again, the overarching. of his album was about growth through life. So the one character or characters that I made sure were in all the videos are these little tiny black figurines, just a silhouette of people as they travel throughout these visual landscapes. So it’s trying to show them go through growth challenges again, in an abstract way.

To try to fit that overall theme of the album and then, yeah, there’s so many subtle aspects that it would take forever to go through each song and visually point out this is what’s going on here, but there’s I do pay attention to every second and it’s not a set it and forget it routine. I’m constantly adjusting throughout one of those three minute videos, for example, takes about eight hours of time if I put that much effort into it.

[00:17:25] Helen Todd: And one of the things that also stood out to me, well, I guess to one, the creator of this tool was so impressed with what you were able to do using neuro frames. So that was really good to see and how you described your own artwork being, you, you would talk so much about like loving the texture of it.

And when I heard that in my mind, it was like your fabricator background was actually coming out and coming through your through your AI art. And so I was like, curious, like how much because I’ve said this before on the show, like subject matter expertise often influences the AI art and everyone’s individuals.

individualistic styles also influences. But I was like really curious how you see like the tactile and haptic aspects of your background really coming through in the music videos and the videos that you’re creating.

[00:18:15] Ben Nash: I’m glad you brought that up, Helen. That’s a, I haven’t really put that together myself, but yeah, absolutely. You know, my degree is in industrial design and, you know, that covers aspects from studying, you know, art to drawing to psychology of design emotional responses to products to engineering, to manufacturing. And one of the aspects that even in school that gets often overlooked is color and texture.

And that is such an important aspect to design in general. And to me what’s new that I only recently discovered again, something maybe was overlooked in college, but the other part of these. These videos is the audio aspect again, whether it’s a musical track or if I’m doing a short story with with, you know, people talking the, you know, music and audio is, I like to say is the unsung hero of video.

It’s so important. You know, one, a great example is the movie trailers. If you watch a movie trailer with the volume off. It’s not even 1/10 as impactful. It’s all those, you know, swooshes and booms and bangs that make the trailer so emotionally fun. But then, you know, taking that and trying to take someone who, you know, a musician who knows how to add texture to the, his music, and then trying to visualize that in ways is, you know, just such an opportunity, I guess would be the way.

And one additional element to Neural Frames and specifically to this Dev/Null album is I was using Neural Frames again for a few months or many weeks before I met Dev/Null and right when I did meet him, Neural Frames added a whole new language model to Neural Frames, one that was, at least this summer, an open source one called Stable Diffusion XL and Stable Diffusion XL is incredibly detailed, especially the texture. So yeah, I, in my prompts, half the prompts are describing the texture of the scene. And I mean, you know, grid like neural wires, covering rough fabric surfaces.

I think fabric was in a lot of those prompts. So yeah, it’s very responsive SDXL to my prompting.

[00:20:37] Helen Todd: That was one thing I was going to ask. If you could share an example of one of your prompts just because your aesthetic is so specific and really impressive cause I’m sure ever everyone’s like curious what, you know, what everyone else’s prompts are.

[00:20:53] Ben Nash: Yeah, for sure. Give me a little bit. Let’s keep talking and I’ll pull up an actual Neural Frames video here and see what some of those prompts are.

[00:21:02] Helen Todd: And how, and in one of your interviews that I watched, you said that you’re always trying to move your style forward. How would you describe your style if you could now or where you aspire for it to be?

[00:21:15] Ben Nash: Well, most of my 2000s online, I was a very prolific about politics and all that, and very combative. And so I’m trying to now try a whole new approach to social media and give uplifting positive messages. So that’s a part of it too. I still dabble in stirring the pot quite a bit.

Especially now, staying away from politics as much as possible, but back into even just the AI art community. I do like to get passionate about certain things, especially when I see people not try as hard as they should, because this is the opportunity of a lifetime. So yeah, my style and approach.

To my content, again, I do try to be inspiring in a good way. Sometimes, again, there will be some negative aspects to it. I recently did a video using Neural Frames about terrorism in the world. So that was had some rated R language in it. But yeah I, and I do try to keep continuously moving forward, not only with tools, but what I’m, with, My content.

I’m definitely trying to do more storytelling and traditional, you know, film in that regard. So, and then bringing actually storytelling over into the music video realm instead of just these abstract scenes like a slideshow. But I did find one of the Dev/Null videos and looking at some of the prompts here.

And I’ll go ahead and read this. So this one starts out, A music poster with abstract shapes and textured elements in the style of graceful balance, high dynamic range, “duckcore”, now I don’t remember where I got the term “duckcore”, but that was probably from I’ll explain that in a sec, but detailed character design, industrial influence, circular shapes…

So that’s just one of the many prompts that goes into that video. And then for example, the next prompt is about half the same, but I add in Nostalgia, Layered Composition, Circular Abstraction, Detailed Characters. You know, very… ambiguous terms sometimes, but when you do say in the style graceful balance, that really does mean something to these language models and it does pull out a specific style, so, and then, you know, circular shapes, obviously, now it’s going to throw in circles, so it’s the abstract elements you can still control quite a bit of.

It’s not just throwing something at the wall and seeing what comes back. Here’s some more in that same video. Black and orange stylized artwork of electronic dance music in the style of techno organic fusion, again, circular shapes, sepia tone, dance, colorful, eye catching compositions, and again, graceful balance seems to be a theme with this video.

So those are, you know, very abstract terms that actually mean stuff. It’s, you don’t have to describe a you know, a hip hop artist walking down the sidewalk, which I did last night, but it’s you can get really detailed with just, you know, words that mean things and about texture and shapes and colors.

[00:24:22] Helen Todd: For all of our listeners who are, you know, imagining what the output of these prompts are.

I guarantee that when you go and see the actual videos, your mind will be blown because of whatever you’re thinking..the output is just like so impressive. So again, we’ll be sure to link and embed all these on the dedicated episode show notes. So thank you for sharing your prompts. And you said that you were going to get back to the what was it? The duck thing? How you came to that.

[00:24:50] Ben Nash: Yeah. So a lot of times I’ll use even in the videos, I’ll jump back. I’ll, all my projects, you know, typically take six to tools to get to the finish line. So when trying to target a specific style or texture or look, I’ll use Mid Journey, even if I’m not using it for images, I’ll use Mid Journeys described feature.

So Mid Journey described, if you don’t know what it is.. is where you can paste in an image to MidJourney and it’ll describe it back to you in text. And so often I’ll get weird words that I’ve never heard of before, like “duckcore”. And I don’t even remember what that means, but it’s, you know, that’s where a lot of these terms come from.

It’s using, you know, tools like… Mid Journey to describe images and see what that image is described as.

[00:25:42] Helen Todd: Good to know that core. We can’t we can all go down an internet rabbit hole. Well, the other thing that kind of stood out is your tech stack and how you’re always testing new tools and how you use cross pollinate a bunch of tools.

Can you walk us through kind of your creative process from your tech stack approach to your projects? And kind of some of your I know you’ve mentioned Neural Frames a bunch, but some of your favorites are you know what you lean towards for Mid Journey, whether it’s that text tool or whatnot?

[00:26:17] Ben Nash: Yeah, I’ll I’ll talk about a completely new project that I did yesterday.

Actually, the past two days published it last night. It was a rap video and this one used quite a bit of tools to get to the final form. I used, first of all, I used ChatGPT to generate the lyrics. And I asked ChatGPT to generate the lyrics in the style of one of my favorite rap artists, Mac Miller.

But Mac Miller had a distinct style in his voice, but also in his his own lyrics. So I was blown away at how accurate chatGPT mimicked his style. So I was just, I didn’t even have to change the lyrics it gave me. So I’ll take, I took those lyrics. And then in this case, I used an audio tool called Suno.

Suno is a Discord-only based application right now, where you can paste in a couple of lyrics, not the whole song, and it’ll generate music to those lyrics. And you can also ask Suno. For you can prompt it for a specific style. So in this case I prompted Suno for a Mac Miller song. I think I said slow/funk/hip-hop was where I was going with that one.

And it got amazing results out of that. Now with Suno, it’ll only generate about, actually, I’m not sure, but let’s just say 20 seconds at a time. So I, it would not generate a complete song and each generation, it generates two different options. And each one is not always perfect or either of them can, both of them can be bad, but so I generated several dozen different parts to this potential song with pasting in about four lines of lyrics at a time.

And, got and then collected the ones that worked together. I edited those then together in Adobe’s audio editor. What is it? Audition. And slice, splice those together as one continuous track and, this had, you know, three verses, it had two or three versions of the chorus, it had a bridge, it had the outro, and and an intro as well.

So, traditional song composition there for that. And so now I have my lyrics, my, my… my rapper, the voice and the music to go along with it. But we’re still just talking about audio. And then with this particular video I wanted to animate text on the screen and then also have, you know, visuals behind it.

So for this video, I used a new AI text to video generator called Moon Valley, and Moon Valley is another just, you know, where you can input a prompt. So I took the lyrics from the song, one line at a time and put them into Moon Valley, rewrote that line completely for each prompt, because they weren’t perfect as is from the song.

And so just this one kind of did have a story to it visually. So someone wakes up in the morning, walks down the street and then goes throughout their day. So I was trying to maintain that visual part of the video. And so from there I generated, two, two and a half seconds, I think is what comes out of Moon Valley for the short animation.

So, and I did it in the style of anime, and so I have these cartoon two and a half second clips, uh, coming out of Moon Valley that, you know, is just video, no audio to those. And then I edit the, edited them all together in a video editor called CapCut. And from there, in CapCut, I put all the videos on top of the audio, and then I needed to do a to animate text on top.

And to animate text on top CapCut actually has a really good AI tool that will listen to your music and generate captions but it doesn’t always work perfect, especially with layered music. So I tried a few different approaches to that where I first had it. You know, try to generate the captions from the song, and it only maybe got about 60 to 70 percent.

And about 90 percent of that was accurate. So I then again, my, when I generated the voice and music, it was generated together with Suno. So I had to I wanted to get a more accurate caption generate, caption generator. So I used another tool. I just signed up for yesterday and already forgot the name, but it’s an online tool that can separate out the voice from the music.

And so I’d use that tool and say, I told you does that. And I got a voice track separated out from the music and brought that back into CapCut. And there I got about 95 percent of the text captions. extracted and that was 95 percent accurate. So now I have a timeline with the video, the music and captions.

And the captions though are usually four or five words long. In this particular video, I wanted to animate one word at a time. And that took a majority of the time with this video. Everything I’ve talked about so far was about two hours of work. And now to splice up those captions into individual words was about three to four hours long after that.

That was a lot longer than I wanted to put into it. But the results were amazing, because instead of just showing captions at the bottom of the video, these are animated in circular fashion and swooshes and really add a whole character element to the story, so. You can see that video on my YouTube and X channel.

It’s called AI In The Kix. So. Yeah, that’s a that’s a typical process, but not always typically those tools.

[00:31:56] Helen Todd: Oh, thank you for sharing. I haven’t seen that one, but I’m going to watch it right after we get off. And again, we’ll share the links and embed it in the interview or the dedicated. Episode blog post and what’s fascinating to you about the text is I know the AI tools have a really hard time with generating text. So it’s really fascinating that you found one that does it right, because I feel like they’re most of them are still working out the kinks on how to deal with fonts and text within the image generation.

[00:32:25] Ben Nash: Yeah, I think in the example you’re talking about would be more if you wanted to generate an image with a sign on a building and the sign reads, you know, something accurately.

In this case, I was strictly going after, you know, please extract the raw text that then I could copy and paste and animate separately. But yeah, I guess that’s actually pretty similar. Yeah, I mean, it was literally the tool that is used for making the closed caption captions. So that’s what I mean by extracting the text from the audio and placing them in the timeline with the audio.

So it’s yeah. It would make more sense.

[00:33:07] Helen Todd: Gotcha. Gotcha. My mind was somewhere else, but yes, that makes a lot more sense. wEll, and you’ve worked on some other really interesting projects and one that you mentioned at our SNC AI meetup for humans is this Terminator 2 parody. So I was wondering if you can share with our listeners and viewers what this project is.

[00:33:29] Ben Nash: Yeah, for sure. I love this project. I’m blown away that it even happened. It’s still not public. I can’t wait. Actually, today is the, our, we’re releasing our trailer for the whole project. So pay attention. It is amazing. It’s so funny and so good. And I can’t believe I’m a part of this project. So the project is several AI artists, video artists in particular to Sway Molina and Nem Perez organized this project and it’s a parody remake of Terminator 2 the movie and it’s supposed to be a long, you know, close to two hour long film remake of the thing as a parody and it’s with 50 different artists come, came together to do this project and the initial timeline was three to four weeks and it’s really even only I think was the production window.

Not everybody finished their production in that timeline, but most people did. And there was still a few slots available to finish out and then miscellaneous parts like the trailers and different social media teasers. But each artist was to produce a two to three minute, segment from the original movie in a parody fashion and used any style that artists sees fit.

So the whole movie will be a jump from scene to scene with different styles. It’s modeled after a parody movie that came out about 10 years ago, RoboCop. Two or Robocop, the parody remake, and that was with live action figures and probably some special effects, but this is now an AI version.

And the meeting, the kickoff meeting to this project was one of the most magical moments I’ve ever had online. It was a Zoom call with about 45 people. We all got to see each other’s faces for the first time and talk, and these are people that had signed up for the project, and not everybody that wanted to be a part of it was accepted.

It was definitely a… selection process. And then from there, we we actually had our own internal selection process of how we chose the scenes that we wanted to do, because there was a few scenes that many people wanted to do. And I was really happy. I got the, my first choice of the scene and it, again, after that kickoff meeting, we had about 10 days window to do our two to three minute piece. And, you know, with these music videos, the neural frames examples, I said, neural frames are very time intensive to create a video. I, again, a three minute neural frames music video will generally take me a whole day, about eight hours.

But when I’m having fun making videos. I can be as quick as one minute and one hour. So it’s you know, those are fun ones where I’m the client. I don’t have to be so critical of myself and I just want to have fun with it. So I generally aim for one minute for one hour is my ultimate goal. I speed is one of my goals.

But for this two and a half minute clip for the Terminator movie, it, I spent over 40 hours on it, it pushed me beyond my limits and in different tools and areas that I’ve not ever used before. So it was definitely a challenge and I had a lot of fun making it. I threw in some comedy, some inadvertent comedy, I will say this, the voice acting I used, you know, again using AI text to voice, the voice acting was so bad, it’s so funny, so there is that element to it that a lot of this is not meant to be a perfect one to one replication of the film.

so like I said the trailer that one of the artists put together from all of our clips should be coming out today. And it’s hilarious. So definitely a historic and pioneering project for this field. And I’m just so happy and honored to be a part of it. It’s a lot of fun as well.

[00:37:09] Helen Todd: Yeah. Well, congratulations for being selected among the artists to participate. You mentioned that it really pushed yourself. Could you explain or expand on like what were some of the biggest challenges or what spent or what you spent the most time on to, to really get the result that you’re looking for with the scene that you worked on?

[00:37:30] Ben Nash: Sure. My scene in particular is really three different scenes and, the scene is where a staff member at the secret military base that holds the Terminator arm and hand in stock and studying it and trying to understand where this technology came from. The opening scene to this section is a continuous. 40 second one take in the real movie where the camera follows these characters through a large room, setting the scene, having people talk, camera turns around these people.

And I’ve never done anything close to that in any realm except for potentially what’s called a mid journey pan and a mid journey pan is where you take an image from mid journey and keep panning in one direction and generating more and more of the scenes so you can get a super long image.

And then to take that image in the video, you can simply just animate this long image across the screen. So I thought, oh that’s the closest I can get to making such a scene. So, but instead of using a long static image of this background again, just this, like instead of a long JPEG scrolling from left to right.

I then turned that, uh, long image into a continuous set of videos that were seamless and scrolling from left to right. So the background has a continuous stretch of videos scrolling from left to right of the, replicating the same scene in this secret base. And then my, my style to my video was more of a cartoon look.

So, I just… placed on top of that scrolling background of video my two cartoon characters talking to each other. So it’s definitely not as Well, I was going to say not as sophisticated as the actual movie, but honestly, I might have put more work into it than the movie did as far as doing a continuous scene in film is incredibly hard, but man, the approach I took was also really hard.

So that was the first scene in my section, and I’m really happy with the results there. I threw in some hidden jokes as well some Easter eggs in that opening scene. A little bit more modern take to it. And then the next scene in my section was this goes back into traditional filmmaking.

I learned a new term and a new trick for me, and that’s called a rack focus, R A C K. And a rack focus is a shot in film where the camera will be focused on something, let’s say in the distance, and then it zooms and focuses on something in the foreground or vice versa. It could be focused on the foreground and focused and then change focus on the background.

And so it shifts the view of what the person is seeing without changing, you know, the camera or a cut. So that’s called a rack focus, a completely new term for me a month ago, and I nailed it. I love what it came up with. The rack focus you’ll see is when a scientist looks at the robot hand and it focuses from him to the hand and back to him.

And it looks really good. And that particular rack focusing I did make the trailer that comes out today. So I was happy to see them recognize that. So yeah, those were ways that this pushed me. And now I’m learning all about traditional filmmaking and all their tricks and tools, because, you know, I do talk about it often, but I don’t know the vocabulary yet.

I’m still a beginner in this realm. So I’m definitely probably cringeworthy to experts out there. That, you know, well, of course the rack focus is called the rack focus. I didn’t know what it was a month ago, but I’m learning and actually I’m participating in a lot of online, especially on X there’s a lot of spaces with other AI artists that did come from a traditional filmmaking background where their teaching is all these type of tricks and terms.

So yeah, that was what I learned from that project.

[00:41:27] Helen Todd: It’s such a good teaser for us to watch the teaser in the full film when it comes out. And well, I, you know, when one thing that comes through, especially when we met and how you describe all your projects is like how passionate you are as an AI artist and experimenting and pushing yourself through these tools.

And I was just wondering if you could tell us in your own words, like what, what brings you so much joy in using and creating AI art?

[00:41:53] Ben Nash: Good question. I think just as an artist I just want to show people out there. Look what I did. Look what I did. I want attention, you know, like, show me what, you know, show me you like it.

That’s all I want to hear. If you don’t like it, don’t tell me. So I think, you know, there’s a bit of an ego there, but I also am just bursting at the seams. I’m a creator art and just this has allowed me to be a lot more prolific. I can You know, go a lot faster. Fast is always going to go online, even at the, even if I’m compromising quality sometimes, so I want to pump out as much as I can every day.

It’s an experiment as well. So the more I can pump out and share with the world and get you know, people’s. Happy, nice responses, the more I’ll, the more I’ll keep doing it and get more focused. Again, that’s kind of how I started with AI music videos was, you know, I didn’t plan that a year ago and now that seems to be one of my specialties.

So I’ll keep experimenting and keep trying different tools and different elements. Again, I’m trying to get more into storytelling. Yeah, I just love creating. It’s a passion. I guess it starts back with my family. My, my dad was an architect and I grew up with him as a woodworker as well. So grew up with that.

And again, my mom was a musician and taught piano, but also she’s a traditional artist and paints a lot. And both of them made sculpture. We lived they were hippies. We grew up in the woods. So it was a really interesting, creative. background. So that’s probably where my drive comes from.

[00:43:28] Helen Todd: I love that.

And you know, you’re kind of at the forefront of all these AI video animation tools and testing new ones right as they come out. Where from like, I guess, a trend or prediction, where do you see the next phase of AI artists like yourself being able to create or what the tools are going to enable or if you Maybe just have wish lists of what else that you want to see from these tools and in the space as well.

[00:43:59] Ben Nash: Good question.

So one of the aspects, you know that process I described earlier with the Mac Miller video I did it, you know, it used several tools to get the final result, even, you know, when I signed up for that, you know, just last night. So I imagine a future where a lot of these tools are all integrated into one tool.

I don’t think there’ll ever be the perfect tool for making videos, but I imagine that, you know, the user interfaces are going to get better and faster and easier. One of the, text to audio tools I use is called CoQi, C O Q U I, and CoQi.ai is their interface is amazing for generating different scenes and parts of scenes, and it seems to be really geared toward people who have generated audio for traditional film in the past.

So, a lot of the traditional filmmaking tools are starting to their paradigms and, Their traditional ways of going about things are now reaching their way into AI tools, which I really appreciate. Again, I don’t know that realm so well in the past. And then vice versa too. We’re seeing AI tools or AI technology get put into, traditional video tools like Adobe Premiere, you know, Adobe had their Adobe Max conference last week where they announced all kinds of new tools especially actually with in Premiere now in the new version it can detect your. Audio words and captions, and then you can now edit video by selecting and copying, pasting the text captions instead of the actual video in the timeline.

I find that an amazing, powerful tool and hope to see a lot more of those integrations. So we’re seeing it happen both directions, AI technology going into traditional tools and then a lot of traditional tools their setups. Reaching into AI tools that have come out. Yeah, I think storytelling and, you know, creating again, what we think of as traditional film and television shows is going to be, we’re going to see a major breakthrough in the next year at this point, even though we’re all trying to do stories, it seems like a majority of what people are making in this genre are just trailers to potential.

Films and movies, which again, because trailers can be so powerful, especially with audio. So those are really impactful at this point. But a lot of us in my circles are really trying to build characters. You know, that might become part of a larger picture, larger story. We’re also starting to see people come up with their own intellectual properties.

So, new characters that don’t exist in the past, for example we see a lot of people recreate, you know, Star Wars and use traditional actors that are, exist in real life, you know, like Arnold Schwarzenegger for example. For example, but we’re starting to also see people create their own characters.

There’s a guy in Nigeria who created a character called forgot her name. Sorry, princess something. But you know, she, his character is huge and he gets hundreds of thousands of views on every video he pumps out. And it’s, you know, it’s like a Disney character and it’s getting much more views than reached in a lot of Disney characters with today, so..

That’s where I see a lot of people going this year. I no longer see the actor as a superstar. I see now the creator as a superstar creating these characters. So they don’t have to compete. They can work together. In fact, Sway Molina, the one of the organizers of the Terminator 2 remake project, he was an actor who’s now using all these AI tools to create different versions of himself.

In fact, he’s, he loves to place himself in his artwork and it’s, he’s creating you know, extra characters that he interacts with and different storylines. And he’s actually one of the first to actually create stories and have multiple videos of a series. So I, that’s where I see this happening soon.

It was kind of predicted by now, we would see full length feature films. You know, just a few months ago, people were screaming that, but I actually, I think our Terminator 2 project will probably be one of the first, you know, super long videos to come out. So. Yeah. Hopefully we’ll see this grow and blossom.

[00:48:17] Helen Todd: Oh, we’ll definitely have to get you back on the show after it comes out. And one thing that you have kind of alluded to in in this conversation, but also bringing up the Nigerian AI artists is just how these tools are democratizing creativity and access and how we’re going to get so many more.

Characters and stories outside of the traditional Disney’s of the world, and I find that just, like, really exciting. So I was curious, like, if there’s any other AI artists out there that you want to do shout outs or who are inspiring you with some other really interesting projects as well.

[00:48:54] Ben Nash: For sure, and I do remember it’s Princess Jane is the character that our friend in Nigeria came up with. One of my favorite AI artists that’s very influential and helpful, helpful in this space is Dave Villalva — and Dave Villalva had a he does have a background in storytelling, but he spent his most of his adult life, the past 20-25 years, in he says Corporate America. And he finally threw AI this summer decided to leave his traditional office job where he did a lot of traveling and sales. I mean, it sounds like nothing like an AI artist. And now that he’s working on AI art, he’s doing lots of X spaces throughout the week. Interviewing other filmmakers other AI artists that are, and he’s really focusing around the art of storytelling.

And he had someone on recently that gave us all a bunch of reference materials, a lot of books, you know, even like the vocabulary books of filmmaking and such. So he’s really pushing the forefront of storytelling and his artwork is amazing. I think in the past two months, I think he said he’s produced 2,000 4-second videos with a Runway Gen 2, which is one of the premier AI tools to create video.

And so he’s creating so much so fast and he’s. He’s discovered a lot of techniques. He’s sharing sharing those techniques daily. So yeah, Dave Vellava is one for sure. And I mentioned Sway Molina, one of the organizers of the Terminator 2 project. He’s an inspiration to us all as well.

And then my good friend Chet Bliss is starting to get into video as well. He’s super prolific. And images and he’s really a Mid Journey heavy user. He’s also now dabbling with stable diffusion and he’s approaching 180,000 images in the past year that he’s created. And now he’s, I, he took on my challenge of do one video a day and you’ll grow fast.

So he’s doing that now. It’s happy to see Chet do that.

[00:50:53] Helen Todd: I love that Twitter is such, or now, formerly known as Twitter X is such like a hub for all this activity. And we actually asked some of your fans for, you know, questions to ask you. So I wanted to make sure to include that question and it came from Jack Aikee.

So he asked two questions that I’ll put both of you are put forth both of them for you. So he was curious your views on the metaverse 2. 0 and the Apple vision pro and 2024 for AI would be interesting and engaging. And you’re, this is a lot in one question, but you can pick and choose what you want to answer and your video and AI content rule, make more, build a big account.

So answer that however you would like to dive in.

[00:51:43] Ben Nash: So, yeah, I was so formerly an iPhone user who then transitioned into Android, and now I’m back to iPhone. And the reason I just switched back to iPhone was because I realized this summer when Apple announced their Apple Vision Pro AR goggles. Set up for next year, I realized, wow, there’s no one that’s competing in this space that has this type of ecosystem.

Of course, I’ve been a Mac user and I’m happy to have my headphones actually work between my Mac and my head, my phone. But that’s what I mean about the ecosystem. When these goggles come out next year, it’s just going to be a super game changer. There’s always been, you know, the dream of this type of technology, VR and AR.

And I think Apple’s going to finally pull it off. We’re actually also seeing quite a bit of success with Facebook’s Quest 3 this, just in the past few months. There seems to be more and more videos in at least the AI art space being produced of people creating, you know, truly amazing, mind blowing, interactive experiences.

Apple seems to be focusing next year on, I think, one of their big Key types of content that’s going to just explode is the 180 degree view type of image, not a full three 60. I think a lot of people are going to consume this, just looking forward and looking left and right. And I, you know, it’s going to be an expensive product.

The first generation, especially as 3,000, I think, but I’m definitely. Saving my pennies and going to get one. Cause it’s, I’m going to create content for it. That’s what I want to do. It’s going to be a totally new way to create content. And, you know, again, I’m trying new tools every day and trying to stay on the forefront of that.

And if I can create content for the Apple vision pro I’ll, I’m assuming I’ll be one of the first doing so. So hopefully going to be seeing my name in that space next year. It’s coming soon. I love it. And to that second question, I think about creating content as much as possible that’s one of my mantras.

You create as much as you can because it’ll help not only you personally but to publish it, it’ll help you professionally. The more and more you publish, the bigger and bigger people you’ll get. And I truly believe that. And it’s been through my own experience. I’ve witnessed that happen.

And It’s just, that’s how you grow big these days. It’s not about sending lead gen emails. It’s about creating content and creating a reputation for yourself as someone who does that.

[00:54:05] Helen Todd: Oh, I know we’re getting close to the end of our interview. Although I know that we’re just really scratching the surface for this topic and all the amazing stuff that you’ve done.

But if you want our viewers and listeners to walk away with one thing or remember one thing from our conversation what. Is that one thing that you want them to walk away with?

[00:54:24] Ben Nash: I think it’s that last bit about creating content. Don’t be afraid. Keep diving in. I have people ask me all the time, you know, how do I get started?

Just start. You’ll learn so much in the first days, but also give yourself that 30 day challenge of do one thing every day. You know, create something and publish it every day. It doesn’t have to be perfect. It doesn’t have to be long. It can be short and just a snippet, you know, that’s how you learn.

I, half of my posts that I think will go big or viral don’t. And the ones that I think are just weak and short and easy are the ones that usually get the most attention. So there’s no. Exact you know, algorithm for content, but you’ll learn what people respond to for sure. So create.

[00:55:08] Helen Todd: I love that.

Yes. Keep creating. And how can people find you? And if there’s any other projects you want to make sure to promote the stage is yours.

[00:55:19] Ben Nash: Thank you, Helen. So on X, my user handle is Ben Nash, B E N N A S H, and it’s the same user handle on almost every social media platform. So, YouTube. com slash Ben Nash. Facebook and Instagram @BenNash.

I’m not that prolific there. Most of my posts are on Also, bennash dot com is my website, though, that’s kind of geared towards my previous life. I’ll be updating that very soon with a heavy focus on AI art and music video. And I’m so free to reach out to me on any of those channels, and I’d love to work with any other creative people.

[00:55:55] Helen Todd: I know when we met up, you mentioned that these AI tools have just been such a life changer and game changer in the trajectory of your career and where you want to go. So I really appreciate you sharing your story and hopefully will inspire some other people who might be You know, hesitant to dip their toes into it because it’s, I mean, the speed at which this stuff is coming out and what has happened in just a year for you.

And I’m so excited for to see as your story evolves and unfolds and All the other amazing things that you’re going to be creating with these tools and Apple Vision Pro. So thank you for sharing some of your journey with us here today, Ben.

[00:56:37] Ben Nash: Oh, thank you so much for having me. I appreciate this opportunity to share myself.

And I also, I’m very happy to see your podcast and listen to it. It’s also an inspiration to this space. So thanks for doing that as well. Thank you.

[00:56:50] Helen Todd: The AI community has to stick together here. So , we’re a very welcoming community. So thanks again. Thanks.

Thank you for spending some time with us today. We’re just getting started and would love your support. Subscribe to Creativity Squared on your preferred podcast platform and lever review. It really helps and I’d love to hear your feedback. What topics are you thinking about and want to dive into more?

I invite you to visit creativity square.com to let me know. And while you’re there. Be sure to sign up for our free weekly newsletter so you can easily stay on top of all the latest news at the intersection of AI and creativity. Because it’s so important to support artists, 10 percent of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized nonprofit that supports over 100 arts organizations.

Become a premium newsletter subscriber or leave a tip on the website to support this project and ArtsWave. And premium newsletters. Subscribers will receive NFTs of episode cover art and more extras to say thank you for helping bring my dream to life. And a big thank you to everyone who’s offered their time, energy, and encouragement and support so far.

I really appreciate it from the bottom of my heart. This show is produced and made possible by the team at Play Audio Agency. Until next week, keep creating.