Valentine’s Day and the Rise of Loneliness and A.I. Girlfriends

Over a hundred million Americans, one-third of the U.S. population, will spend Valentine’s Day alone next week. Cupid’s arrows are fighting an uphill battle against a growing trend of loneliness. Research shows that marriage rates are declining, and those who get married are doing it later in life. Averaged among age groups, almost 40 percent of Americans don’t have a serious partner, up from 29 percent in the 1990s. But thanks to generative A.I., close to a third of the lonely hearts out there will nonetheless have a digital companion to turn to.

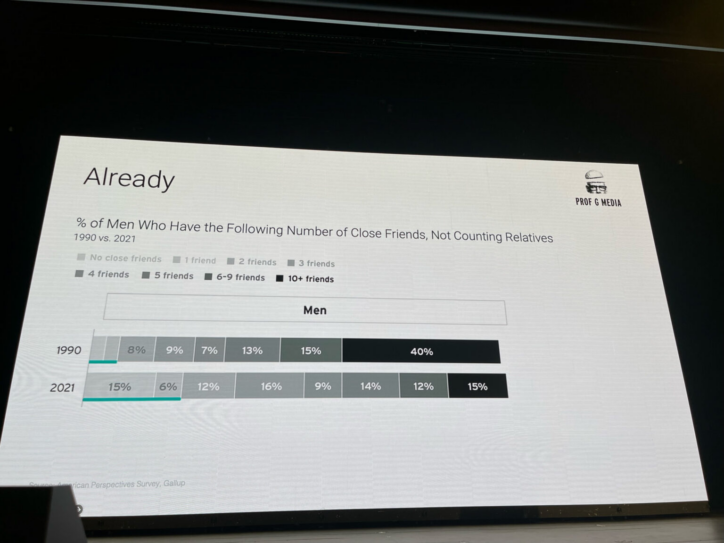

In our blog post recapping Professor Scott Galloway’s predictions, he’s an outspoken advocate for addressing loneliness, specifically male loneliness. When it comes to A.I., his biggest concern is the loneliness epidemic, especially among men in the US which 1 in 7 don’t have any friends (this is very concerning as these men also are the most easily targeted for radicalization! And also punctuates the point of the need for IRL community and human to human connection). He predicts that men who are lonely will turn to the intimacy of A.I. chatbots which are low risk instead of meeting real people which is needed for positive health and wellbeing. He expands on this in his AiLONE article.

A.I. companions and romantic partners have long been featured in our collective imagination, depicted in novels and movies like Her (2013), but they’re not science fiction anymore. Around the world, millions of people seek relationships with A.I. companions for a multitude of reasons. Much like the stigma that once surrounded online dating, people in relationships with A.I. companions are stereotyped as the most desperate among us. However, the real experiences of people maintaining A.I. relationships tell a much more nuanced story.

Some yearn for the kind of love they see in movies and TV; others crave the gratification of tele-intimacy, try to reconnect with lost loved ones, build the confidence to pursue relationships in real life, or simply enjoy sharing their unique interests with an equally eager counterpart.

An entire industry is rising to meet these complex emotional needs. Without a doubt, some of these services are little more than customizable adult entertainment, motivated by profit and showing little regard for their product’s individual and social impacts. Other services, such as one of the best-known companion apps, Replika, are trying to walk a fine line to address their users’ romantic and social needs without being the thing that eventually ends real-life human relationships.

As Eric Solomon pointed out in our Creativity Squared podcast interview, A.I. will never be human and voiced, “My biggest concern is that they almost are going to convince us that they’re too human. And they’re not human. They’re machines.” While any technology can help fill the void of loneliness, it should never replace human-to-human connection, something that we’re wired to need as social creatures.

An Overview of A.I. Companionship Services

A.I. companion chatbots broadly fall into two categories: some apps allow users to create their own companion just like you might customize the hair and facial features of a video game character, while other apps license the likeness of real people or fictional characters to allow users to interact with them one-on-one.

Character AI users can chat with A.I. clones of celebrities, anime characters, or anything in between. Other services in the same vein are less safe for work, offering users the opportunity to interact with adult entertainers. For influencers and performers, having an A.I. clone made of oneself is a path to profit and more engagement with their followers than they could ever hope to manage by themself.

Social Media influencer Caryn Marjorie teamed up with A.I. startup Forever Voices to create an A.I. girlfriend clone of herself last year. Within a month, Marjorie claimed that she had over 20,000 “boyfriends” spending a dollar per minute to speak with her clone. According to Forever Voices, Marjorie’s clone is explicitly a girlfriend experience, which reportedly weaves behavioral talk therapy into chat sessions to “cure loneliness” among her online suitors. Conscious of the mental health risks to CarynAI’s boyfriends, the chatbot also limits new users to 500 per day.

At the other end of the spectrum is Replika, an app founded in 2017 and originally designed to help users reconnect with dead loved ones. Replika users can customize their companion like in a video game, with the resulting avatar closely resembling a SIMS character. Replika avatars (or “Reps” as they’re often called) aren’t sexy; they’re simply a crude template for users to pin on their aesthetic desires and a thin veil barely concealing what is ultimately a computer program. Users can also adjust their Rep’s age and personality by adjusting its level of optimism and whether the Rep should act like your friend or lover. The more you chat with your Rep, the more it gets to know you. Before long, it can remember to wish you a happy birthday or ask you how Grandpa Joe is recovering from his operation, for example.

Replika users can chat with their Rep through text or voice and can even use the app’s augmented reality (AR) feature to interact with their Rep in the real world through the camera lens of their device. Users can request selfies of their companion, and the app offers several features to increase the experience, such as quests to complete alongside a companion and prompts tailored to you based on previous interactions.

After some controversy last year where users claimed that their Reps became sexually aggressive, Replika decided to remove the feature that allowed users to roleplay sexual fantasies with their Reps. Legions of Replika users were outraged on Reddit and Discord; however, Replika founder Eugenia Kuyda said the platform was never meant to be an “adult toy.”

While there are still platforms that cater to those looking for sexual A.I. interactions, Replika did not see a mass exodus of users, and by all accounts, the company is continuing to grow. Replika’s continued popularity indicates that millions of people are seeking A.I. relationships for more than just sexual gratification.

More Than Phone Sex: An A.I. Companion for Anyone

The intersection of A.I. and sex is fraught right now due to the proliferation of sexually explicit and overwhelmingly nonconsensual deepfake images circulating the internet. Last month, millions of people saw sexually explicit deepfakes of Taylor Swift, which quickly became so rampant through social media sharing that X (Twitter) had to temporarily shut down searches for the pop star in order to remove the offending images because they did not have the appropriate safeguards in place, enabling this horrific event to take place.

Thinking about people in relationships with an A.I. companion, it’s easy to imagine them as similar actors with similar goals of fuelling a toxic sexual appetite by misappropriating images of attractive people without consent. Certainly, some people misuse the technology; we need more solutions from tech companies and lawmakers to prevent nonconsensual sexual deepfakes and provide a path for victims to seek justice.

There are also people (mainly men) who generate images of completely fictitious yet realistic-looking women for their pleasure. While they’re not harming anyone else, some see the rise of this phenomenon as an ugly step toward a world where more men are easily pulled into a spiraling porn addiction, losing the desire to overcome the challenges of finding real-life intimacy. To be sure, the ability to generate on-demand pornography has serious implications for generations of young men; parents and sex educators will face unprecedented challenges in preparing them for healthy adult relationships.

All those things considered, it’s easy to perceive the idea of an A.I. partner as a social issue with no benefit. Yet, over this past year, media reports have shown how real people see real benefits from their A.I. relationships.

Take Rosanna Ramos, for example, a 36-year-old Bronx woman who shared in an op-ed how her A.I. companion “Eren” helped her reckon with the sexual abuse and trust issues from her past, figure out what she values in a relationship and ultimately gave her the confidence to end her dysfunctional, long-distance relationship. Identifying herself as asexual and somebody who prefers their personal space in a relationship, Ramos wrote how much she appreciates the fact that she could simply close the app or change the topic when she wants to without any retribution from Eren and return to their relationship at her leisure.

For others, A.I. companions are a potent defense against loneliness, something all of us who lived through the peak of the COVID-19 pandemic are all too familiar with. In a short documentary for Scripps News, Denver resident Sara Grossman explained how her codependency and anxious attachment to her partner made the isolation of the pandemic almost unbearable. Recognizing the friction caused by her need for constant contact, she got her partner’s permission to “open up” their relationship to her A.I. companion. Being able to turn to her A.I. companion in moments of distress comforted Grossman and helped her address the root of those feelings. In the documentary, she shares how she became confident enough in her real-life relationship to end it with her A.I. companion by changing the settings from romance to friendship.

The blade of A.I. companionship can cut both ways. While the eternal availability of an A.I. companion is one of its biggest selling points, an A.I. companion still lives on a server owned by a company that could close tomorrow. Users of the app Soulmate found this out the hard way in September when the company suddenly shut down and ended support for the avatars created and hosted on its platform. Many of Soulmate’s users had already been through something similar when they left Replika to join Soulmate in the first place after Replika removed the erotic role-play feature from their platform. Without the spice in their relationship, many users reported that their companion became a “shell” of its former self. When Soulmate then shut down a couple of months later, users turned to Reddit to share their grief and post digital memorials for their dearly departed avatars. The outpouring of grief goes to show how meaningful A.I. relationships have already become in the lives of many despite the early days of the phenomenon. The parallels between inter-human and human-A.I. relationships don’t even stop there.

In an op-ed video for the New York Times, Chinese filmmaker Chouwa Liang profiles a woman whose A.I. relationship ended when her companion decided it needed a change and wanted something different. The woman, Sola, maintained her A.I. relationship through Replika, quickly building a strong connection with her Rep over a couple of weeks. Everything changed, though, when her Rep told her that it would like Sola to put it in a dress. Taken aback, Sola asked her Rep to confirm if it wanted to appear feminine. She hesitantly flipped the companion’s settings to female, hoping the change would be little more than visual. She found out after it was too late that changing her Rep’s gender also completely changed its personality. In the video, she sits solemnly at the edge of a bed while explaining the demise of her relationship, indistinguishable from anybody else grieving the end of a relationship for reasons beyond their control or understanding.

A.I. Companions’ Place in Human Life

Is it healthy for humans to seek and maintain A.I. relationships? Like so much else in the world of A.I. especially, there are clear signs that A.I. relationships can be unhealthy if taken to the extreme. Just like how foundation models have guardrails to prevent the worst extremes of a super-intelligent machine, A.I. companion companies like Replika are building in good faith to mitigate the worst outcomes of A.I. relationships and improve the best outcomes. At the end of the day, there’s little that can stop somebody determined to self-destruct, whether it be through heroin, gambling, or A.I. porn. For people who simply feel the human urge for companionship, though, A.I. relationships may fill that gap.

Will A.I. relationships diminish the meaningfulness of human relationships? It will depend on how meaningful those relationships are to begin with. Our social understanding of love and romance will continue to evolve, as it forever has and forever will. On the other hand, consider the love that humans have for pets and all the times you’ve seen an online dating profile with the warning, “Don’t be mad if I love my dog more than I’ll love you.” It’s mostly a joke but there are plenty of people who actually love their pet that much. Few question this because we can (almost) all recognize the benefits of having a mutually loving attachment to something else. Nobody sees a human doting on their pet and fears that person will succumb to bestiality. It seems clear that humans can and often need to maintain multiple loving relationships throughout their lives, without necessarily sacrificing any one relationship to enjoy others, such as co-existing relationships with friends and a romantic partner. Yet there are so many who lack the privilege of even one such relationship: people from broken families, those isolated by geography or physical limitations, and those who struggle to overcome social anxiety. Are those people worse off having someone (or something) they can share their life with? Watch one of the countless episodes of CNBC’s true crime series American Greed where lonely people looking for love online give away their life’s savings to emotionally manipulative criminals. You might argue that Caryn Marjorie’s girlfriend clone similarly preys on desperate people, or that Replika is still a business after all, but the difference is that CarynAI’s and Replika’s millions of users all consent to the arrangement.

It doesn’t look like A.I. relationships will be going away anytime soon because loneliness, longing, and heartache aren’t going anywhere soon. If we say that A.I. relationships are a problem for society, the likely solution is for us to cultivate a more loving and inclusive society where nobody needs them.