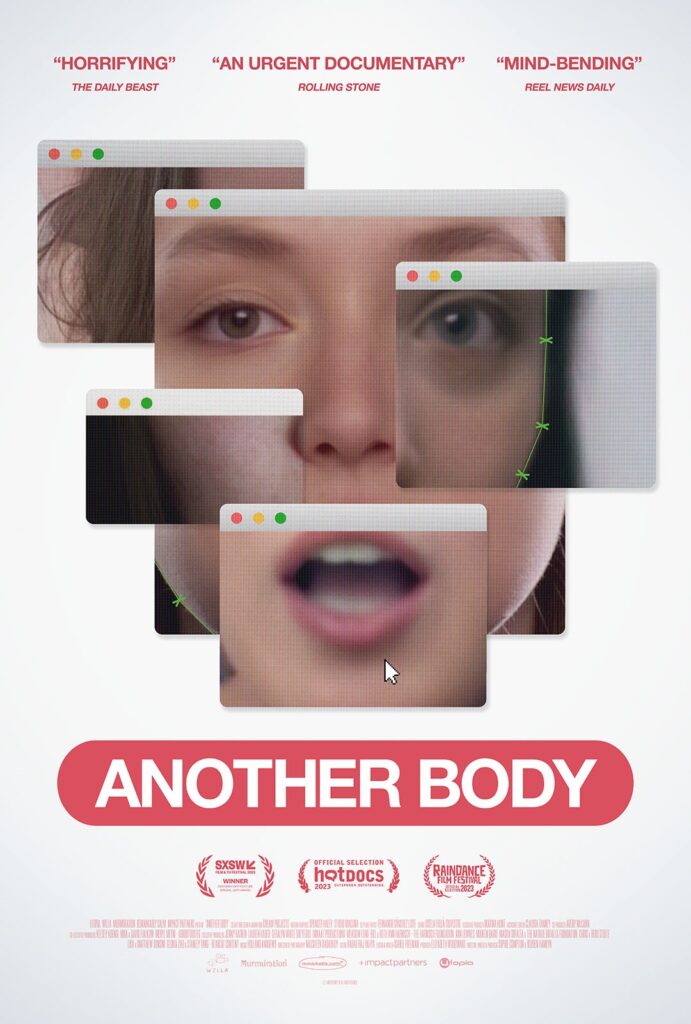

“Another Body” & the Disturbing Rise of Deepfakes

The recent documentary “Another Body” provides a disturbing look into the real-world impacts of deepfake technology — specifically, how it is being used to create nonconsensual fake pornography.

Directed, written, and produced by Sophie Compton and Reubyn Hamlyn, the film, which had its world premiere at South By Southwest this year, chronicles a young woman named Taylor who discovers that someone has used A.I. technology and photos from her social media profiles to create realistic fake pornography featuring her face.

Taylor describes the psychological devastation of finding herself violated through these videos. She discusses feeling unsafe knowing the deepfakes could help strangers track her down in real life. And despite police reports, she learns there are few laws actually banning this nonconsensual objectification.

What’s more, as the film shows, another friend of Taylor’s from college has suffered the same fate. Then, in a dramatic twist (spoiler alert!), the filmmakers reveal that Taylor and the other subjects of the film have themselves been deepfaked — consensually and using the same technology that was used maliciously — to protect their identities while sharing their stories and to showcase the technology’s capabilities for both good and bad actors.

As Compton explained:

“It was really important to us that everything we anonymized was just like it happened and we only changed the details that were identifying. We wanted to reflect the exact truth and the spirit of the story.”

Through Taylor’s story, “Another Body” exposes deepfakes as the latest evolution of technology being used to harass and subjugate women. This dark and deeply troubling reality is undoubtedly among the most harmful issues posed by deepfakes.

But it is not the only one. As A.I. continues advancing rapidly, deepfakes have the potential to undermine truth and trust in all realms of society.

Let’s explore the topic in more depth.

What are deepfakes, and how do they work?

Deepfakes leverage powerful A.I. techniques to manipulate or generate fake video, audio, and imagery, or fabricate media by digitally impersonating people’s faces, voices, and motions. These are made without the person’s permission and are created for nefarious purposes.

While photo and video manipulation has existed for decades, deepfakes take it to an unprecedented level, generating strikingly convincing forged footage that can sometimes even hold up against professional scrutiny.

This is possible due to recent breakthroughs in deep learning algorithms.

The “deep” in deepfakes refers to deep learning. This advanced form of machine learning uses neural networks modeled after the architecture of the human brain. By processing massive datasets, deep learning algorithms can analyze patterns and “learn” complex tasks like computer vision and speech recognition.

Specifically, networks analyze images and/or videos of a target person to extract key information about their facial expressions. Using this data, the algorithm can reconstruct their face, mimic their facial expressions, and match mouth shapes.

This enables realistic face swapping.

So, if Person A is deepfaked, and Person B is the new video source, the algorithm can convincingly depict Person A’s face doing whatever Person B is doing.

The results can appear remarkably life-like.

The term “deepfake” itself comes from 2017 when a Redditor calling themselves “deepfakes” shared pornography featuring celebrity’s faces swapped onto adult videos using artificial intelligence

The increasing realism quickly led Reddit to ban the community, but the momentum was already unstoppable. Around 2018, consumer apps brought deepfake creation to the masses. As algorithms improved, users could produce fabricated videos, voices, and images from home PCs in minutes.

In parallel, developers built deepfake tools for gaming, film production, virtual assistants, and training content. Meanwhile, political usage emerged globally in parody videos and propaganda.

Experts estimate at least 500,000 video and voice deepfakes have circulated just on social media — and just this year — alone. As the quality of the outputs continues to advance rapidly, many deepfakes now pass the “naked eye test,” making them all bust indistinguishable from reality without forensic analysis.

The scale of this volatile technology presents an unprecedented risk for safety, geopolitics, and truth itself if left unchecked.

Potential Threats of Deepfakes

Beyond personal violations like those exposed in “Another Body,” deepfakes threaten wide-ranging harms:

Disinformation Wars

Manipulated videos and imagery could convincingly depict political leaders declaring election tampering, demanding violence from supporters, using slurs, or any number of dangerous scenarios. Deepfakes could help sway tightly contested races or erode trust in government institutions over time.

For example, in 2020 a Nancy Pelosi video that was manipulated with traditional editing techniques to slow down her speech and make her appear inebriated quickly went viral on social media and impacted the news cycle.

As another real-world example, in our interview with Andy Parsons, head of Adobe’s Content Authenticity Initiative, Andy also discussed how Trump’s Access Hollywood tape might have been discredited through accusations of fakery if the technology had existed them.

Financial Crime

A.I. voice cloning services already enable fraudsters to imitate executives and scam companies. As the tech advances, doctored footage may trick more victims or simulate in-person identity theft. Forged media could also manipulate stock prices or cryptocurrency values.

Escalating Global Conflicts

Distributed deepfakes posing as citizen journalism could show fake atrocities committed by one nation to spark reprisal attacks from adversaries. Even proven fakes could worsen tensions. This risk intensifies as tensions flare between nuclear-armed states.

Undermining Truth Itself

Widespread synthetic media poses threats to truth and reality that we’re only beginning to fathom. Experts warn it may fuel widespread cynicism and doubt in all video evidence. People could start questioning whether major events even occurred or rejecting inconvenient truths as “deepfakes.”

In our interview with artistic director and co-CEO of Ars Electronica Gerfried Stocker, Gerfried discusses this in context with manufactured realities.

During the ongoing war between Israel and Hamas, misinformation and disinformation are playing a role in stoking emotions and attempting to sow division online. While most of this so far has not been deepfaked, some very realistic fake imagery has appeared.

The existence of deepfake technology also creates another insidious issue: Muddying the water so that observers doubt even real photos and videos.

The potential for deepfakes to further disrupt this and other future conflicts and global events is deeply concerning.

Additionally, with the 2024 elections in the U.S. and 39 other countries nearing, officials are extremely worried about what havoc deepfakes could wreak.

Bad actors could leverage deepfakes to erode public trust, turn groups against each other, and undermine credibility in the results. And with political polarization and diminishing trust in institutions already high, this misinformation could tip society over the edge.

Fighting Back Against Deepfakes

So what can be done against deepfakes spreading chaos, psychological abuse, and disinformation? The movement towards legal solutions seems slow amid debates around free speech and exactly what constitutes unacceptable manipulated media. Some countries have begun introducing restrictions, but global coordination remains poor.

On the technology front, researchers are locked in an “arms race” trying to improve deepfake generation versus deepfake detection. But so far humans seem better than algorithms at spotting fakes.

The Content Authenticity Initiative, a community of media and tech companies, NGOs, academics, and others working to promote the adoption of an open industry standard for content authenticity and provenance, recently introduced a Content Credentials “icon of transparency,” a mark that will provide creators, marketers, and consumers around the world with the signal of trustworthy digital content.

Legislative solutions are also being introduced, both state by state and in the federal government. If passed, bills such as Representative Yvette Clarke’s DEEPFAKES Accountability Act would seek to protect individuals nationwide from deepfakes by criminalizing such materials and providing prosecutors, regulators, and particularly victims with resources.

While there is no national legislation specifically addressing deepfakes at this time, and aspects of Clarke’s bill in particular and government regulation of A.I., in general, remain under debate, tech companies and elected officials are generally aligned in their concerns about the dangers of leaving deepfakes unchecked.

Social context also aids in detecting deepfaked media.

If a public figure appears to be doing something wildly out of character or illogical, skepticism is warranted. Relying on trusted authorities and fact-checkers can also help confirm or debunk dubious media.

Ultimately we all must approach online content with sharpened critical thinking skills. Assume everything is suspect, check sources, and dig deeper before reacting or spreading.

Our individual actions online will decide whether truth drowns or stays afloat.

Here are some tips for identifying possible deepfakes yourself:

- Look around mouth/teeth – strange textures may indicate manipulated imagery

- Check lighting/shadows for inconsistencies

- See if reflections in eyes/glasses seem accurate

- Notice any jittery motion, especially in face/head area

- Watch background details for artifacts or blurriness

- Listen for odd cadence/tone/quality in audio

And most importantly — verify using outside trusted sources before believing!

Staying Safe Online in 2023

The internet is a treacherous place these days. Between risks of fraud by voice deepfakes, nonconsensual porn videos featuring our faces, and mass manipulation through misinformation and disinformation, pitfalls abound.

Yet, there are still some precautions you can take to minimize your risk exposure.

Start by limiting personal information shared publicly online or with strangers. Be sparing about revealing locations, family details, ID documents, or other sensitive materials. Lock down social media privacy settings and remove old posts that could poorly represent you now. Secondly, establish multifactor authentication everywhere possible to protect accounts from unauthorized access. Use randomly generated passwords unique to each site stored in a reputable password manager.

When encountering dubious claims, photos, or videos online, remember to verify using outside authoritative sources before circulating or reacting. Seek out high-quality journalism to stay accurately informed rather than hyperpartisan outrage bait. Fact-checking sites can assess the credibility of viral stories.

If confronted with deepfake pornography or identity theft, immediately document evidence and file reports. Sadly, local authorities lack resources around high-tech crimes but may assist with removing content under certain laws or referring cases to investigators. Reach out to supportive communities dealing with online exploitation as well.

Finally, in the latest Creativity Squared interview, Head of Online Safety at London-based digital transformation partner PUBLIC Maya Daver-Massion mentioned organizations like the National Center for Missing and Exploited Children, which offers resources for parents and age-appropriate learning materials for kids about staying safe online.

She also discussed the Internet Watch Foundation, another organization on the front lines helping companies purge child sexual abuse materials (CSAM) from their servers and assisting law enforcement prosecute those who traffic in CSAM. U.K.-based Parent Zone has also developed a tool with Google called “Be Internet Legends” for 7-11 year olds which gamifies the online safety learning experience.

Simultaneously, other platforms are working to label and remove harmful synthetic content, government agencies are striving to improve deepfake detection, and legal experts are prototyping laws against harmful generative media.

Some consumer apps even empower users to watermark and certify their videos.

As “Another Body” demonstrates, the threats are daunting. However, through ethical engineering practices, projects like the Content Authenticity Initiative, updated policies, legal consequences, and our own human critical thinking abilities (we do still have those, even in 2023!), there remains hope for a digital landscape aligned with truth over deceit and creating a safe online environment for all.