Ep7. Adobe on A.I. & Trust: Understand the Urgency for Media Transparency & Ethical Generative A.I. with Andy Parsons from Adobe’s Content Authenticity Initiative

Building trust online and establishing authenticity, transparency, and ethical A.I. is a big task. And it’s one that Andy Parsons is tackling head-on.

Andy Parsons is the Senior Director of Adobe’s Content Authenticity Initiative (CAI), which is creating the open technologies for a future of verifiably authentic content of all kinds. Helen, your Creativity Squared host, is a proud CAI member!

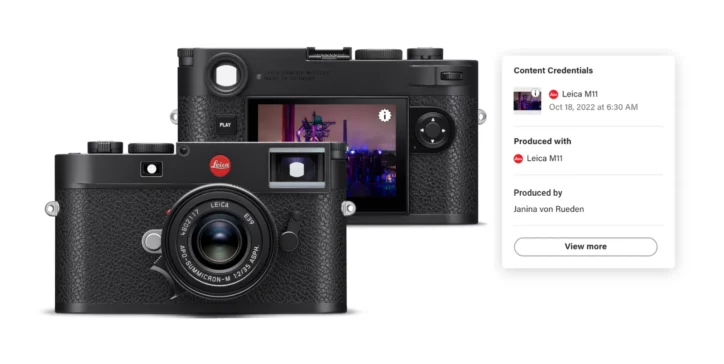

With collaborators across hardware (including brands like Leica Camera!), software, publishing, and social platforms the CAI is empowering communicators, journalists, and artists with secure provenance. For consumers, this important work restores trust and transparency to the media we experience.

Throughout his career, Andy has worked to empower creative professionals with innovative technologies. Prior to joining Adobe, Andy founded Workframe (acquired in 2019), the pioneering visual project management platform for commercial architecture. Andy previously served as CTO at McKinsey Academy, McKinsey’s groundbreaking educational platform and he co-founded Happify, the world’s leading mobile platform for digital therapeutics and behavioral health.

As a keynote speaker, podcast guest, and invited expert, Andy has addressed audiences at SXSW, NAB, RightsCon, CVPR, DMLA, CEPIC, Infotagion Podcast, and FLOSS Weekly. He has been quoted in many publications including WIRED and Axios.

Andy lays out CAI’s mission to combat disinformation through verifiably authentic content. By combining standards, technology, and open source collaboration, Adobe is paving the way for a more authentic digital experience and training its own generative A.I. tools in an ethical manner.

Keep reading for some of the highlights from our conversation. Andy also drops some tantalizing teases about Adobe features in the pipeline!

The Urgent Need for Content Authenticity

At SXSW 2023, Andy spoke on a panel about the ethics of deep fakes and if they are always bad. He realizes people are bombarded with content that may be faked for creative or negative purposes. Through a combination of standards, technology, and open source, Adobe’s CAI aims to bring a level of trust so people have the ability to verify and understand what they see online. In a world full of powerful fakes, and looking ahead to worldwide and the U.S. elections in 2024, it’s crucial to define reality and ensure transparency.

“Holding a grasp on what is actually real and what is trustworthy is what the world needs, has always needed, but I would say is particularly challenged in these recent days.”

Andy Parsons

Andy believes in the need to be careful, pay attention, and put measures in place to reduce the impact of fake media. Disinformation is nothing new but the degree to which disinformation is built and disseminated with social media and A.I. is new.

The more power that is put in creative hands, the more potential damage you put in bad actors’ hands. At Adobe, they want to empower good people to do the right thing. He believes we need to get to a place where people can click on a piece of content and know where it came from. Media without evidence of origins should be questioned. He feels the urgency to get this work done and have widespread adoption so people have digital literacy and are not fooled by fake content.

What Is The Content Authenticity Initiative (CAI)

The Content Authenticity Initiative was announced by Adobe in 2019 and is a community of media and technology companies, NGOs, creatives, educators, and others working to promote the adoption of industry standards for authenticity and provenance. It’s a group working together to fight disinformation through attribution. As of the episode recording, there were over 1,300 members including The New York Times, BBC, The Associated Press, Getty Images, Gannett, and Microsoft.

“Just like you sign a digital document or PDF, we think that media should be signed in the same way so that we can understand its origins.”

Andy Parsons

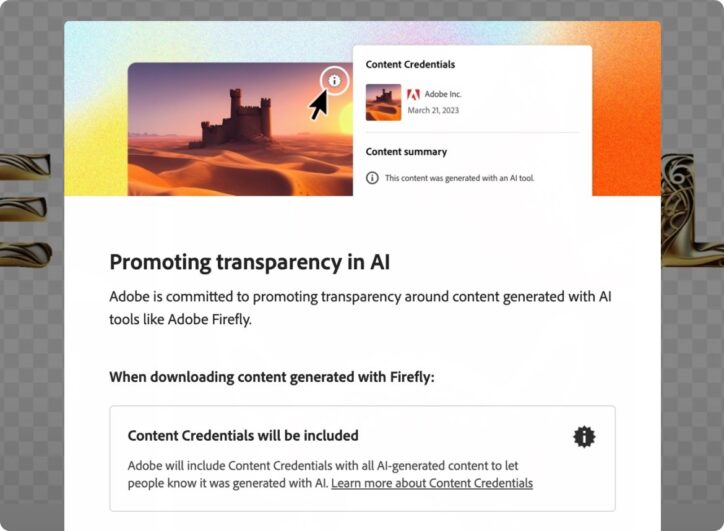

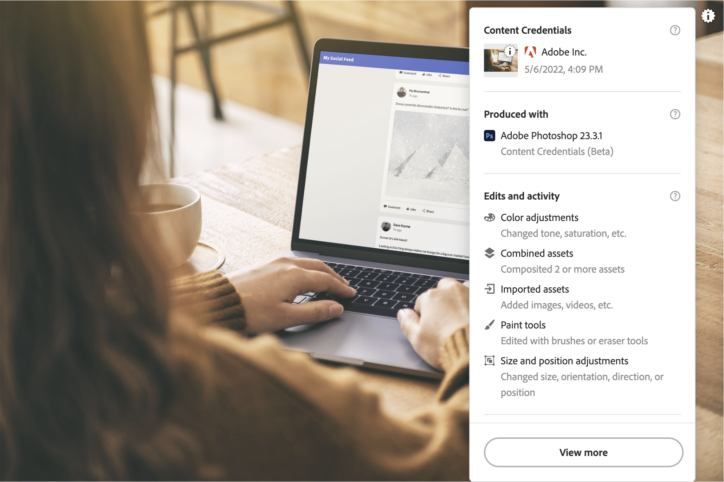

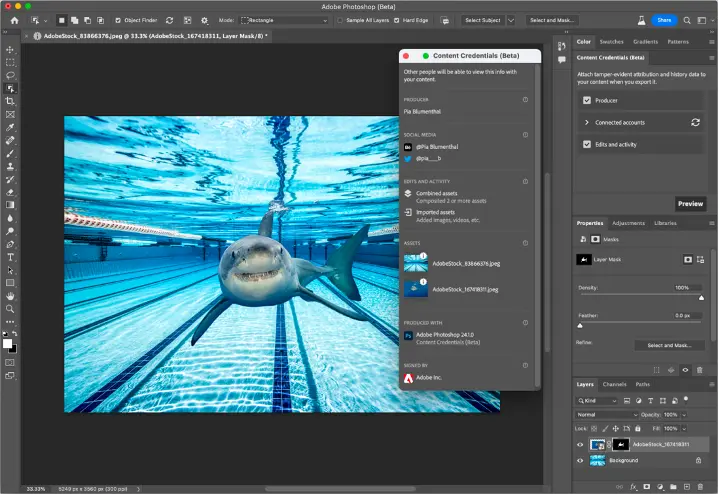

It’s about believing in the idea of provenance, which is understanding where things came from, what they are, who made them, and whether it was made by a person or artificial intelligence. How does it work? It’s effectively a way to capture a new kind of metadata that is cryptographically verifiable. For example, if something is taken on a camera, or goes through Adobe Photoshop, that technology captures critical information that is verifiably factual about how it was made. It allows for identity verification and A.I. transparency. Furthermore, Adobe built in opt-in features to their products to disclose if something was done by A.I. as they believe in transparency. It’s Adobe’s intent to empower creators with this tech and to mitigate against deep fakes by advocating for the use of Content Credentials and bringing transparency to content.

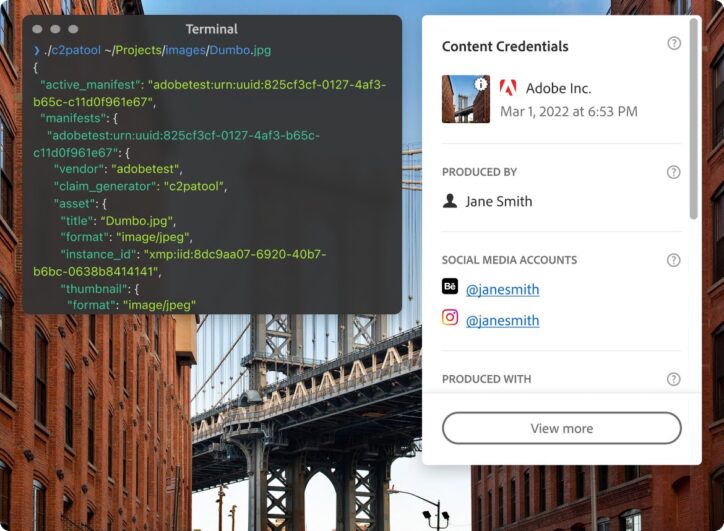

Coalition for Content Provenance and Authenticity (C2PA)

The underlying standard and openness of all this is not an Adobe technology. That’s where the Coalition for Content Provenance and Authenticity (C2PA) comes in. The C2PA is a Joint Development Foundation project that unifies the CAI and Project Origin to collectively build an end-to-end open technical standard to provide publishers, creators, and consumers with opt-in, flexible ways to understand the authenticity and provenance of different types of media.

This non-profit body exists to write the technical standards and specifications. The work is public and it is absolutely free. Things are being built open-source to make them widely available and there’s no intellectual property or patent encumbrances. Andy encourages people to get involved! Adobe wants people to contribute and be in touch with their team so more voices are heard and we can make forward progress addressing the issues surrounding artificial intelligence.

“That’s a matter of public record effectively. So no matter where that image or video goes, you can always get back to the provenance data.”

Andy Parsons

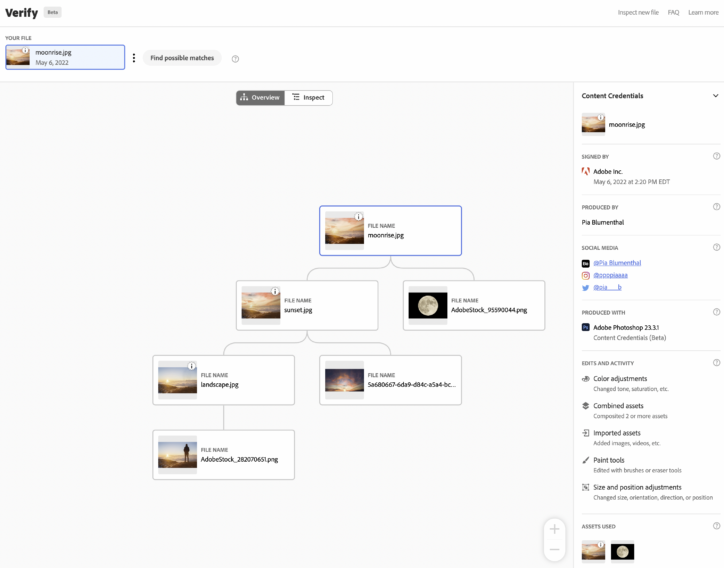

On the technology side, Adobe has implemented their version of fingerprinting. For instance, you can take the pixels of an image, the frames of video, or the waveforms of an audio file and actually take a fingerprint like a human fingerprint. This can help uniquely identify the piece of media and know who it belongs to and where it came from, even if the metadata is stripped or lost. People can choose where they want their content stored whether it’s Adobe Cloud or a decentralized blockchain. Even if there is a mashup of artists or even a screenshot of a photo, the origin story is always there.

Adobe Firefly and Ethical Uses of Generative A.I.

Adobe wants to use generative A.I. to empower creators. Firefly is Adobe’s creative generative A.I. engine that is currently in beta. While other companies may have moved faster getting into the space, Adobe wanted to do it ethically while respecting artists’ rights and copyright ownership. They didn’t want to just move fast and break things. Adobe took their time to find the right ethical balance of providing something powerful to Creative Cloud users.

“It’s also ethical in the sense that Firefly is trained ethically. We do think we’re leading the way and doing this in the right way. We might be slower than other companies, but once you get in Photoshop and on the Firefly website, I think you’d see this reflected in the output.”

Andy Parsons

Adobe’s Firefly approach focuses on training models using licensed Adobe stock images (in addition to openly licensed and public domain images), not just crawling the internet for any piece of work. The datasets were trained in accordance to their Stock Contributor license agreement (and here’s the Stock FAQ).

It’s Adobe’s intent to build generative A.I. in a way that enables customers to monetize their talents, much like they’ve done with Stock and Behance. And they are developing a compensation model for Stock contributors and will share details in the coming weeks!

Andy mentions that generative A.I. should serve as a creative co-pilot, amplifying human potential rather than replacing individuals.

While Adobe Firefly is currently in beta, it is unavailable for commercial use. The watermark is in place to remind beta users of this. Adobe will compensate creators once Firefly is out of beta, and the watermark will also be removed.

“It feels good to use it because you know that you’re not impeding anyone else’s creativity or encroaching on their rights as artists. And that’s the feedback we’ve gotten loud and clear from the market…All of the images and generated material that comes from Firefly is imbued with content authenticity from the get-go. It’s built-in. ”

Andy Parsons

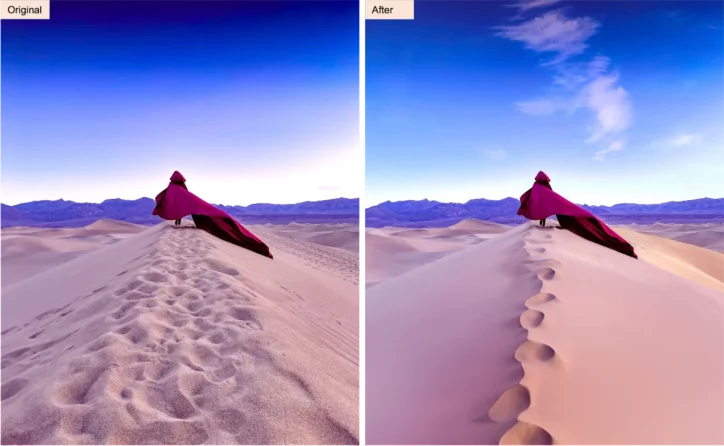

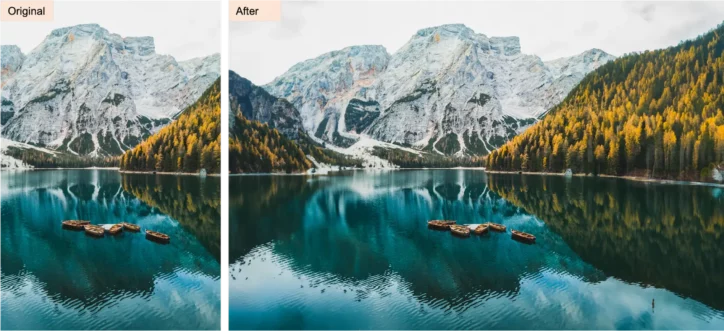

Andy talks about Generative Fill in Photoshop, which was recently introduced, that will allow people to amplify their creativity. Generative Fill is a “revolutionary and magical new suite of A.I.-powered capabilities grounded in your innate creativity, enabling you to add, extend, or remove content from your images non-destructively using simple text prompts. You can achieve realistic results that will surprise, delight, and astound you in seconds.”

Beyond the regular power of Photoshop, there’s this extra tool that will help generate new ideas and material to the art you’re producing. While the tools still need to mature, people can feel good about using it because you know you’re not impeding on anyone else’s creativity or encroaching on their rights as artists. Plus, all content generated through Firefly is embedded with content authenticity from the beginning.

Andy highly recommends that our community check out all the amazing content that is being created through Adobe’s tools. A quick Instagram search of #AdobeFirefly shows what people are up to along with Adobe’s official Instagram account. Be inspired, experiment, and explore how you may use generative A.I. in your own work. And on a positive note, Andy does believe more and more people will use generative A.I. ethically and produce really amazing things.

Creativity For All

Adobe’s core principle of “creativity for all” underscores their commitment to providing tools and resources for individuals to express themselves. The democratization of creativity has long been part of Adobe’s DNA, enabling users to pursue their artistic endeavors. This culture has affected the development of Firefly and other Adobe tools so individuals can confidently express themselves in a transparent and ethical manner.

Adobe has developed a layered approach to facilitate the adoption of content credentials. The vision is that anybody who wants to implement or use content credentials should be able to do that, whether they’re in the Adobe ecosystem or not.

It starts with open technical standards, which provide specifications and guidelines for implementation. The next layer involves open source tools, including software development kits (SDKs) for mobile platforms like iOS and Android, as well as low-level libraries for back-end services. These resources make it easier for developers to integrate content credentials into their applications. The third layer focuses on user interface components, offering pre-built open source tools that can be easily incorporated into websites or applications.

It’s truly about collaboration and working together. For instance, Adobe partnered with industry-leading camera manufacturers, Leica and Nikon, to implement provenance technology into Leica’s iconic M11 Rangefinder and Niko’s mirrorless Z 9. Both partnerships help advance the CAI’s efforts of empowering photographers everywhere to attach provenance to their images at the point of capture, creating a chain of authenticity from camera to cloud. It was the first time that this secure-capture technology was put into the hands of the creative community.

“We’re real people, we’re real human beings there, you can talk to us. But most importantly, it’s not just the Adobe team, it’s like hundreds of individuals who are also working to do this.”

Andy Parsons

Andy encourages developers to engage with their community through platforms like Discord, where they can exchange ideas, seek assistance, and share their experiences. The open source ecosystem surrounding content credentials fosters collaboration and enables individuals and businesses to explore various use cases beyond what Adobe initially envisioned. Collectively we can take the right step forward.

Rethinking Media Literacy

How will you know if a piece of content has the proper attributions and origin?

Adobe’s vision includes a groundbreaking feature that aims to enhance transparency and context in digital media. By simply hovering over an image or video, users will be greeted with a distinctive trademark icon, indicating the presence of additional information. This icon, representing CTPA standards, will not pass judgment on the truthfulness of the content but will provide users with access to relevant details and context. Clicking on the icon will reveal a convenient overlay, displaying critical information such as the image or video’s signatory. The technology is not saying this piece of content is right or wrong. It’s simply providing people with additional context so they can make informed decisions. People can decide for themselves if a piece of content is trustworthy, but they need to have the information to do so. By making the icon easily recognizable and widely adopted, Adobe aims to provide users with the same level of importance and understanding as the lock symbol represents in cybersecurity. However, the next step is educating people.

“We need to rethink media literacy.”

Andy Parsons

Media literacy is a passionate topic for Andy and rightfully so. It’s a critical investment we need to make. Recently, Adobe released the first chapter of their approach to media literacy education in schools. Developed in collaboration with external partners and experts from NYU, they have created three curricula catering to middle school, high school, and upper education levels. The aim is to revamp media literacy by encouraging critical thinking, skepticism, and interrogation of media. While traditional methods of analysis are still important, the landscape has changed with the advancement of synthetic media tools. Adobe emphasizes the need to understand the capabilities of these tools and the potential for manipulation. They provide free access to their curriculum on Adobe edX, where educators can download PDFs and explore the resources. The curriculum focuses on teaching students alternative ways to scrutinize media, including using tools like Google reverse image search and verifying context before sharing information. Addressing the urgency of the matter, Adobe urges educators to adopt this curriculum and emphasizes the importance of integrating media literacy education into classrooms.

But we also have to invest in understanding media literacy as adults. Andy is actually optimistic that some of these techniques can be effective in the hands of journalists and forward-thinking adults even within the next year when it comes to media consumption and looking ahead at the 2024 elections.

A.I. Future Predictions and Getting Involved

In terms of timeframes, Andy acknowledges the difficulty in predicting the exact timeline for broad adoption of certain technologies and concepts like media credentials and the metaverse. However, Andy expresses confidence in seeing exponential uptake, interesting use cases, and widespread adoption within the next year or two, and certainly within three years.

Andy believes that cryptographic provenance and verification will become a standard feature for media and virtual assets, ensuring transparency and trust. While he can’t pinpoint when this will happen, Andy has hope and anticipates significant progress within the next two years, envisioning a future where conversations revolve around the next wave of adoption rather than introductory discussions.

“If you believe in some of the things we’ve talked about today, there is absolutely a way to get involved and help in some small way.”

Andy Parsons

Andy reiterates and encourages our community to actively participate in the mission of content authenticity and join the CAI. Andy acknowledges that people often wait for things to happen passively but emphasizes that in this case, there is an opportunity to contribute and make a difference. Everyone can find a way to get involved! Visit our additional links mentioned in this podcast below to connect with Andy, join CAI, and converse on Discord.

Links Mentioned in this Podcast

- Follow Andy on Twitter

- Connect with Andy on LinkedIn

- Content Authenticity Initiative (CAI) Website

- Adobe Discord

- CAI Open-Source

- CAI Twitter

- CAI LinkedIn

- Coalition for Content Provenance and Authenticity (C2PA)

- Karen X Firefly Demo

- Leica M11

- Adobe Firefly

- Adobe Supercharges Photoshop with Firefly Generative A.I.

- Adobe Partners With Leica and Nikon To Implement Content Authenticity Technology Into Cameras

Continue the Conversation

Thank you, Andy, for being our guest on Creativity Squared.

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 100 arts organizations.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

Andy Parsons: So if you can question anything credibly, then nothing is true. Right? It’s absolutely the case that Donald Trump could have said, interesting. I never said that on that bus. I don’t know what that recording is, but I didn’t say it. And if there’s reasonable doubt and no way to prove or understand or contextualize something, then literally nothing is true.

I hate to, you know, say it in such dramatic terms. And that’s exactly what’s underpinning the urgency that we all feel to get this foundational element of objective fact into all of our media. And if it’s not there, then you’re on your own. And maybe you question it as we said earlier, but if it is there, you have some information that you need to decide whether it’s trustworthy.

Helen Todd: On today’s episode, we have Andy Parsons, who’s the Senior Director of Adobe’s Content Authenticity Initiative, also known as the CAI, which is creating the open technologies for future of verifiably authentic content of all kinds.

With collaborators across hardware, software publishing, and social platforms, the CAI is empowering communicators, journalists, and artists with secure provenance for consumers. This important work restores trust and transparency to the media we experience.

Throughout his career, Andy has worked to empower creative professionals with innovative technologies. Prior to joining Adobe, Andy founded Workframe, the pioneering visual project management platform for commercial architecture, and that was acquired in 2019.

Andy also previously served as CTO at McKinsey Academy, which is McKinsey’s groundbreaking educational platform, and he also co-founded Happify, the world’s leading mobile platform for digital therapeutics and behavioral health. As a keynote speaker, podcast guest and invited expert, Andy has addressed audiences across mediums and at conferences, including where I met him at South by Southwest.

Today you’ll learn why there’s an urgency to restore trust online, how Adobe is leading the industry in ethical generative AI, CAI’s approach to establishing an open industry standard for content, authenticity and provenance, the need for media literacy and some tantalizing teases about Adobe features in the pipeline.

Enjoy.

Theme: But have you ever thought, what if this is all just a dream?

Helen Todd: Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox on YouTube and on your preferred podcast platform. Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space.

The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Welcome Andy to Creativity Squared.

Andy Parsons: Thanks, Helen. It’s a pleasure to be here.

Helen Todd: So I first met Andy this year at South by Southwest, where he spoke on the panel, The Ethics of Deep Fakes: Are They Always Bad? And it was such a great panel and we’re gonna dive into some of the topics discussed here, but in one of your other interviews that I was listening to ahead of this, you mentioned, related to the Content Authenticity Initiative that your Senior Director of that the world needs this.

So let’s start here. Why?

Andy Parsons: Oh, such a good question. How much time do we have? No, I’ll keep it brief. So let me first define what we think of as content authenticity. You know, we’re living in an age where even week by week, we see more powerful photorealistic, video-realistic, audio fakes used for both creative purposes, but also, you know, potentially negative, damaging purposes.

And we think on the Content Authenticity Initiative at Adobe, that through standards and technology and open source, granting people a fundamental right to understand what it is that they’re looking at or experiencing is just something that is missing on the internet.

Some people say we’re restoring trust. I think we’re bringing a level of trust and it’s really a simple idea. We’ll get into the details, but it is that if you’re looking at something or experiencing something online and you wanna know more about it, you wanna know where it came from. In some cases, who made it, who’s taken responsibility for it, whether AI was used, you should be able to know that by simply tapping something or clicking something, or listening to something.

And, you know, in case it’s not obvious why the world needs this now with, you know, worldwide elections and the 2024 US election coming up, it’s that without it, we live in a very fragile, fragmented world of truth or lack thereof, where anybody can say anything.

And if you can’t, if anything can be faked and anything can be a photograph that actually wasn’t made by a camera and doesn’t reflect reality, then how do we even define reality? So, you know, holding a grasp on what is actually real, what is trustworthy is what the world needs, has always needed, but I would say is particularly challenged in these recent days.

Helen Todd: The timeliness of this conversation couldn’t be even more perfect cuz we’re recording on Friday, May 26th and this past Monday, we saw the fake AI photo of the Pentagon blast that circulated. It was totally fake and then hit the stock market too, which, might be the first AI generated image to impact the stock market.

So I think that just punctuates the point of the need for this.

Andy Parsons: Absolutely. Yeah. And I don’t know if it’s the first, it’s certainly the first that got the level of attention that this got and the market dipped for five minutes. And we know markets are largely automated. You know, there’s probably a place for content authenticity and provenance, this idea of knowing where things came from in these automated trading system. That might be a topic for another day.

But, yeah, it’s a good example and a timely example. And yet another reminder for those of us focused on the space that the impact to human beings is real and we need to be careful of it and pay attention and put measures in place to reduce the impact of fake media.

You know, disinformation is nothing new, but the degree to which disinformation is easily built and made and disseminated is pretty new with social media and generative AI.

Helen Todd: Yeah, and something that you had said in another one of your interviews, or I think it was a CAI presentation, that our objective reality is very much in question now, so as we, some would argue that this has already happened. I might say it’s happening in the next few weeks or months.

So I was so glad I was like, that I heard this and that I got to interview you today, so that you can expand on that just as kind of a time capsule of like where we’re sitting in this moment in time.

Andy Parsons: Yeah. So Helen, you and I met at South by Southwest. I’m sure I made those remarks before that and certainly after. I think that was right. You know, I might even say days like seeing this develop in the next few days, certainly weeks, months. As we all expected, the photorealism of things like Midjourney and other tools, and even Adobe’s own Firefly, which I think was not released, when we first encountered each other.

These things are very easily to use, very powerful for creativity. But the more power you put in creative’s hands, the more potential damage you put in bad actors’ hands. So, you know, we’re really about empowering good people and good actors to do the right thing, not to catch deep fakers.

But over time we expect that everybody will come to expect that. If you, if you want that extra context and you wanna click on something and know what it is and where it came from, like I said, you should be able to have that.

And media that doesn’t have it, that has no evidence of its origins is something you should call into question because, you know, you’ll find that Adobe Firefly, Stability AI, all of the leading generators of media that are, that’s purely synthetic or, you know, using latent diffusion and whatever the next technology is gonna be, are adopting this. Stability has adopted it. Firefly, of course, has it built in at the core.

And we need this now more than ever. And the more we see, you know, we, this is not the, the Pentagon photo is not the last, as you’ve noted, it was kind of poorly done. But even the notion of poorly done, if you go back to 2019 and the Nancy Pelosi so-called cheek fake, which was, you know, used no artificial intelligence or sophisticated techniques whatsoever, and yet was effective, right?

It got shared. The President of the United States shared it. You needed only to look at a few seconds of that video and say, huh, something’s not quite right. But we didn’t have the ability to say, what is this actually? Like, was this edited? Is this from a video camera? Was this taken on location? Was it manipulated?

So, the notion of what’s like barely passable as a fake and believable has escalated in terms of its believability. So you have to look pretty closely at that Pentagon photo. And if you’re scrolling by tiny thumbnails in your social media feed, it’s not so obvious that this is fake, right? And if it fits your worldview and you want to share it, who knows what the potential damage or impact is to hundreds or thousands of downstream followers of your followers, of their followers.

So I do think that, you know, notion of days or weeks or months, underscoring the urgency that we all feel to have a foundational understanding of objective reality is, like you said, has never been more critical and we need to accelerate.

You know, I’m very proud of the work we’ve done with our partners and in our standards body inside and outside of Adobe. But there’s a lot more to be done. There are a lot more partners that we need to see adopting this, and I feel that urgency every day. I’m sure you do as well.

Helen Todd: Yep. Well, I feel like this is a great segue to actually introduce the Content Authenticity Initiative to those who may not know it, and kind of your approach, to the standards, and of course dovetail into the tools that Adobe is enabling.

So if you don’t mind, giving everyone an introduction to how you’re approaching this, these urgent topics that we’re all all needing right now.

Andy Parsons: Sure. And I know Helen, Content Authenticity Initiative is a mouthful. I stumbled over it for the first year myself. You can say CAI, which isn’t much easier.

But, yeah, the CAI is an initiative that started by Adobe in 2019, announced at 2019’s Adobe MAX, in partnership with the New York Times and Twitter at the time. It’s since grown to a partnership of over a thousand members. I think we’re approaching 1300 as of this recording.

And these are all folks who are interested in the topic of content authenticity. I’ll get into our strict technical definition of what that is in a moment, believe in the idea of provenance, which is understanding again, where things came from, what they are, who made them, whether it’s real or made from a generative AI technology or platform.

And a good chunk of those and the hundreds are actually actively implementing using open source code. So how does it all work? It’s effectively a way to capture a new kind of metadata that is cryptographically verifiable. And all that means is, if something is taken on a camera or goes through Adobe Photoshop, the technology captures critical information, that is verifiably factual about how it was made.

So was generative fill used in Adobe Photoshop. This is a new feature that launched a few days ago. was a person removed from a photograph. Was a police car added to a photograph? Those, was this, certifiably known to have from a camera? What were the GPS coordinates of that camera?

Now it’s natural to say, wow, that’s a lot of information we’re capturing. I haven’t even talked about identity, which can also be captured and it’s very important in some use cases. People say, oh, this is already sounded like surveillance, right? Do I want all my media to have all this information about where I was and what I did?

The answer of course is no. This is all optional. So if you’re the Associated Press, one of the many CAI members in the media ecosystem, you require folks who work for the Associated Press and folks who submit images as people who work for the Associated Press and are in remote regions to turn on content provenance so that the AP can make sure they’re adhering to their own high standards for information integrity.

But that doesn’t mean that downstream consumers need to know the GPS coordinates of the photographer and in fact, that might put that photographer in harm’s way. But even if you start the provenance trail at the point of delivery, simple ideas, like if something purports to have come from the BBC and you wanna trust the BBC, you do trust the BBC’s worldview and sourcing methods, just provably knowing that this actually came from the BBC is really important. We don’t have that now.

No matter where that image goes or no matter where that video goes, knowing that it traces back to the BBC is something we need.

Similar with timestamps, you know, we’ve seen lots of, sort of low entry, simple types of disinformation where an image or a video is simply mis-contextualized like an image of a wildfire that’s actually 20 years old is put out there as if it happened yesterday in the middle of New York City, right?

Knowing when something was, made, captured, that it was captured, not generated or made by some other means, and where it came from, even at that last hop between the publisher and you. These are things that we can have. The technology’s very straightforward.

And then content authenticity can do a lot more. It can actually capture verifiable identity. So if you’re an artist and your Banksy without revealing your identity, there’s support for synonymous entities saying, yeah, this is actually Banksy where you’re getting the idea of actual content provenance, just like, and the word is in fact borrowed from the art world where just like you wouldn’t buy a Picasso painting unless you had pretty good, good sense that it was what it purported to be, you know, we think we have the ability and the right to know what all of our media is.

And in cases where it makes sense, like creative use cases where an artist wants to get credit for their work, identity can be attached and should be attached. So we think of content authenticity, we think of authentic content and authentic storytelling as existing on a spectrum where in some cases you might wanna share identity, but not details about how the thing was made.

We think another end of the spectrum you might wanna share no details about who you are or where you are, but, you know, things that your downstream readers might want to know and not identity in that case. And then there’s a lot of use cases in the middle of that, right?

We think at Adobe that AI transparency is one of those. So, a requirement or a moral obligation or an ethical obligation to disclose that something was made entirely by AI and not by a human is something that we put in by default in Adobe Firefly and our creative cloud tools that make use of it. We just think it’s important to be transparent and disclose that.

And the other, you know, question here is why not? Why not do that? Why not give people this right? On social media and platforms and news organizations and tools and cameras? It’s optional, and it’s critically important.

So, you know, the technical details involve, off the shelf cryptography kind of a new method for signing things. But effectively, just like you sign a digital document, PDF sign your lease or your deed to your house, we think that media should be signed in the same way so that we can understand its origins.

Helen Todd: Yeah thank you for explaining that. And one of the really interesting aspects of it that I found through one of the demo videos, which I’ll make sure to include in the episode’s dedicated show notes and blog posts, is even if there’s a screenshot of an image, it still can be verifiable.

So can you share how that works? Cuz I know that’s a concern among a lot of artists as well, and in their work being, you know, ripped off the internet and used for other purposes. Yeah, thank

Andy Parsons: Yeah, thank you for asking and I’ll, I’ll take the opportunity. I was remiss in not mentioning the underlying standard and openness of all this. So this is not an Adobe technology. My team at Adobe works on it. We’re full-time focused on it.

But it’s really based on an open standard that we help develop in an organization that’s a nonprofit outside of Adobe called the C2PA. In case it was hard enough to say content authenticity initiative, the C2PA is the Coalition for Content Provenance and Authenticity.

And that’s a nonprofit we assembled under the auspices and governance of the Linux Foundation, which is a well-heeled organization in the world of open standards and open source. And that body exists to write the technical standard and the specification. We’re now in our third version of that. It’s available, it’s free, there’s no intellectual property, no patent encumbrances, it’s absolutely free. The work is done in public, and I urge your listeners if they’re interested in the deep technical details to take a look at C2PA.org

On top of that, Adobe, through the CAI has built open source implementations in various degrees to give to the world. Again, no, no IP, no licensing requirements. It’s all free. We encourage people to contribute back to it and be in touch with our team, for, for all of those purposes.

And one of the things in the standard that we have implemented Adobe in our version is something called fingerprinting. And that says, you know, look, at the end of the day, this digital signature is data. It can be surgically removed or stripped out from an image or a video. It’s totally possible to do that. At the end of the day, these are just digital bites and they’re on a disc or on a website somewhere, and it’s possible to lose this data.

However, we’ve come up with a way through what we call soft bindings in the standard to say, using only the pixels of an image or the frames of video or the wave forms in the audio file we can actually take a fingerprint, just like a human fingerprint and say, we can uniquely identify this piece of media and look it up in a cloud somewhere, or even on a blockchain for a decentralized there, there are a bunch of CAI members who are doing this on decentralized blockchain technologies. And then you can recover that metadata.

So you can kind of decide whether you wanna store this in the cloud on a blockchain, on a case by case basis. If you are that journalist in the field, you might not want to trust Adobe’s cloud or anyone else’s cloud. If you are an artist who wants attribution, even if your image is screenshotted or you know, co-opted in some other way, you might opt to store this stuff in the cloud.

And in fact, in Photoshop where you can see this feature called content credentials in all its glory, you can decide, you can click a checkbox and say, I wanna put this in my file so it goes wherever the file goes and I wanna store it in the cloud. So it’s recoverable no matter what happens. And those bits of provenance data, you know, you’re not storing the image, you’re just storing the cryptographic chunk of data.

It’s like 1K worth of information that’s a matter of public record effectively. So matter where that image or video goes, you can always get back to the provenance data.

And one other thing I’ll, I’ll mention that’s kind of novel and interesting in this particular approach is, if Helen, you have an image and I decide to use it in my Photoshop creation or my Premiere Pro production, you’ll get credit for it.

And the provenance data that came in with that image, we call it an ingredient, will travel along on export from Photoshop or Premier or any other tool that supports this. And that way we build up kind of a genealogy saying, hey, this is a mashup of 20 different artists. All those artists should also get credit for their work.

And similarly, if I bring in a montage of an Associated Press photo, a writer photo or other sources, we should know what those are as well. And if they’re all enabled to use content provenance, whether somebody takes a screenshot or not, we can recover all of that information where you need it.

Helen Todd: Yeah, that’s really cool. And the, I think one interesting thing about this also is kind of the split between media, the media landscape and the creators and kind of the need, the different application use cases and how one might navigate using these tools over the other, And so how, how are you thinking about the difference between, and you’ve kind of already touched on it a little bit, the use cases for creators wanting these digital signatures versus, you know, a journalist and how, how and when they might want to use these?

Andy Parsons: Yeah, like I said, it’s all opt-in. Except in the case where Adobe’s made a choice that says if you use generative AI through Firefly, we’re going to require that that be documented. That’s, that’s what we think is the correct, ethical thing to do for all the reasons we’ve already discussed. Regarding capturing identity details about your camera, et cetera, that’s gonna be on a case by case basis.

So if you want to, declare a copyright or declare ownership or say, you know, I’m a big enterprise and I wanna make sure that all my users are indemnified from anything they do with this genAI tool so we’re gonna stamp it with our identity on the way out.

Or if you’re the BBC saying, our customers just need to know that we have signed off on this, all those use cases are possible and enabled, and you’ll see some of them coming to fruition live in the next few months.

And then there’s the independent photojournalist, right? Who can decide to enable this on a camera. We have two cameras that will be in market soon that were demoed at Adobe MAX last year, one from Leica and one from Nikon, and there are others that we’ll be announcing soon.

In those cases, that’s a feature you enable on your camera, you set it up just like you might your IPTC data for those who know what that is, you turn it on, you shoot. And we can know that those images came from this camera model.

Again, not to surveil you or know your IP address or anything else, but signing on the device, whether you’re online or offline, might be critically important for a journalist.

And, you know, I’m not a journalist or a photojournalist, but I certainly work with many at Adobe. One of my colleagues ran AP Photo for many years and has spent his life, you know, dedicated information integrity and truth telling.

And I’ve heard from, from this gentleman and his colleagues that that base level of understanding and verifiability is really important and we’re on the verge of losing it because of genAI. So those are, those are all the use cases on the spectrum. There are others that we could delve, delve into, and there’s a lot of nuance here, right?

Which is why we leave it in the hands of creators to decide how they wanna make use of this. But simply enabling them to have access to it has caused a broad, kind of wave of enthusiasm in our community of over a thousand members. And we think there’s gonna be more in the coming years. And frankly, the missing piece is seeing this show up on social media and platforms, which is what we’re also working on.

We have lots of engaged, energizing conversations with all the typical players you would expect. and I’m optimistic because they are all interested, you know, whether we need legislation to get this over the line and see verifiable objective facts about our media, that’s not for me to decide, but seeing people adopt us on their own because they feel the urgency that I do is really rewarding, and we’re seeing more and more of it every day.

Helen Todd: And I know we have a lot of Leica photographer listeners just because I’m a big part of the Leica community and helped launch them on social media for five and a half years, we were there.

Andy Parsons: Oh, wonderful.

Helen Todd: Yeah. So

Andy Parsons: So the range,

Helen Todd: A lot of Leica love.

Andy Parsons: Yeah. That, that’s great. So the camera we showed at MAX was a prototype M11 and, I won’t, I won’t scoop any news, but, you know that that camera of course will find its way into market soon. We’re very excited for that.

Helen Todd: Yeah, I’ll be sure to link that announcement in the show notes as well.

Andy Parsons: Okay

Helen Todd: You mentioned legislation and it came up in one of your other conversations about the deep fakes enforcement task force legislation that you’re kind of behind. I was wondering if you could tell us more about that, especially in light of this Pentagon image that came and kind of where, what the intention is and where that’s at right now.

Andy Parsons: Yeah, so just a minor correction. We’re not behind it. We’ve contributed to it. And lots of technology companies, you know, have input and liaise with legislators and often Adobe is called on to testify and speak with legislators and their staffers.

And we’ve been involved in some of that. You know, the details of the Deep Fake Task Force Act are to basically spin up government agencies to study the problem, and recommend solutions for all government agencies and potentially the legislators to down the road legislate platforms and others.

I don’t know where that stands right now actually. And I’d be speaking out of turn if I, you know, gave you my assessment of government progress and congressional ins and outs. It’s just not my area of expertise. But I do think we need to look to Europe, to look at the AI Act and something that’s moving a bit faster through the legislative channels that really concerns itself with active steps to legislate.

And again, not necessarily my or, certainly not Adobe’s point of view on whether we need legislation. My point with all that is, if there is legislation to be brought to bear, we want it to include this notion of disclosure and we feel very strongly that there should be one way to disclose cryptographically what something is.

There shouldn’t be 15 ways. Because that’s effectively, you know, zero ways. There shouldn’t be competing standards, there should be one standard. You know, I’ll tell you from my point of view, I think the C2PA standard we spoke about a minute ago, is that one standard.

But if something better comes along, if somebody says no, there’s a fundamental improvement here that can be brought to bear, we’d be glad to embrace it.

Right now I think the C2BA is that, it’s far ahead. It has adoption, it has traction. And if and when legislators look for a particular methodology that’s open and free and not owned by any particular company or individual, as a way to imbue media with provenance and context, we think they should reach for the C2PA. So our, all of our relationships with government officials and conversations are about that.

You know, I have a personal opinion on whether we need legislation. I think legislation can be effective when you have a potential threat facing humanity and democracy. But again, it’s not my area of expertise to decide or understand how and when that should be brought to bear.

But if it is, we think we have something very important to offer.

Helen Todd: Yeah, I think that’s great. And I’m very much for, I’m, you know, this is outside of my expertise too, but very for, much for an international standards that everyone embraces, in general.

Andy Parsons: Yeah.

Helen Todd: Across a lot of things. And including algorithm transparency, which is a whole nother, a whole nother thing.

Andy Parsons: Absolutely.

Helen Todd: I know Adobe has actually come up multiple times in different, episodes on our show of being kind of the leading standard of ethical approach to generative AI. And you mentioned Firefly, but I was wondering if you could actually explain to our listeners who may not know what Firefly is and then some of the ways that you’re kind of leading the industry in your approach to generative AI

Andy Parsons: Yeah, sure. And there’s a lot to read about this. I’ll, I’ll just sort of gloss over the surface. We could share some links in the show notes. So, you know, it’s, it’s very clear that Adobe wasn’t the first to the starting line with genAI right? There’s a lot of activity, open source. There are companies like Stability and Midjourney who are moving very quickly, improving quality very quickly.

And, you know, in that universe of companies outside of Adobe, it’s also clear that some of them, you know, move fast and break things, so to speak. The old kind of Facebook adage, and there are many ways that things get broken. One of them is respecting artist rights and copyright and ownership, and deciding when fair use applies and when it doesn’t apply.

At Adobe, we took our time, you know, to find the right ethical balance of providing something really powerful to Creative Cloud users, but also respecting artists rights because those are our constituents and our audience and the folks that we care most about.

We had this feeling that the idea of a creative copilot that helps you do extraordinary things and sort of 10x’s your work is where genAI should be focused, not on wholesale replacing people. There’s a new kind of creativity.

Helen, I think you might, you and I might have even spoken about this. When photography was invented, there was, you know, a tremendous upheaval in the artistic community of painters and artists of all kinds saying, well, this is it. Like this is gonna put us all outta business and what is art even anymore?

And I think it’s safe to say hundreds of years later, later, we’ve, we’ve basically survived that and figured out new ways to be creative. So these things, you know, raise the creative potential of humans. And I feel very strongly about that, as does Adobe.

So, you know, Firefly, basically amplifies that in every possible way, starting with training on licensed Adobe stock images, right? Not crawling the public internet and taking information from people that may be usable for this purpose or not. We know that the copyright office and copyright law worldwide has a lot of catching up to do.

I was just at the CEPIS conference in, in France a couple of weeks ago where this was a hot topic of discussion and, you know, across the world, Europe, UK, the US, global south, you know, everyone feels like there’s a lot we need to learn about copyright and largely existing copyright doesn’t, like law doesn’t apply.

We need to figure that out. But while legislators and copyright experts figure that out, we wanna be very fair and ethical about what data is used to train our models. And that’s, that’s where Adobe started this process. Second, there are tons of innovations that basically focus Firefly on that creative amplification potential.

You know, you can use it, you can try it. The feature that just launched in Photoshop, generative fill is really there for people who want to amplify their creative intent and creative potential in images and art and Photoshop. So in the context of Photoshop with all the, you know, beautiful tools and power that you’ve come to experience and enjoy, now you have this extra thing that’s gonna be pervasive where you need it to generate new ideas, and add generative material to the art that you’re producing in Photoshop.

It’s also ethical in the sense that it uses Firefly. Firefly is trained ethically, and we do think we’re leading the way and doing this in the right way. We might be slower than other companies, but what you get in Photoshop and other tools and on the Firefly website, firefly.adobe.com, which is fully open now, I think you can see this reflected in the output.

It’s really good at some things. It has some, some maturity on other things, but it feels good to use it because you know that you’re not impeding anyone else’s creativity or encroaching on their rights as artist. And that’s the feedback we’ve gotten loud and clear from the market. And I left out the most important part, of course, which is all of the images and generated material that comes from Firefly is imbued with content authenticity from the get-go. It’s built in.

Helen Todd: Yeah, I think it’s great and I applaud Adobe, for really leading the industry and what ethical, generative AI can look like. And one of the things when we met in Austin, or one of the tips that you also put on stage is to tell creators to go ahead and start using these tools, cuz it’s already, I know, I think the, the generative fill is in beta, but I know the content credentials is already available.

So I don’t know if you wanna expand on that at all.

Andy Parsons: Yeah, so when we were in Austin, it was fully launched in Photoshop, it’s been there for about a year. Just like every Photoshop feature and Adobe feature, it gets incremental updates. I think it’s become easier to use even since, since March. So we’re improving it incrementally, adding things to it.

It’s also launched in Lightroom as a tech preview. and that’ll be available to everyone who turns on tech preview and available in a generally available version in the coming months. It’ll be available in Adobe Camera Raw, so anywhere in that kind of photographic Photoshop creator workflow, you’ll have this available to you.

As I mentioned in the Firefly web app, which is kind of a playground for all the amazing things that Firefly can do, including text effects where you can type some words and say, hey, you know, I want this to look like a furry Abyssian cat. That’s a weird example, but I have two of those just to the right of me on camera here.

And you know, the letters will actually take on the persona of a furry Abyssian cat textures, materials. That’s all using the Firefly generative engine and everything that you get out of Firefly Web. And as Firefly makes it way, makes its way an application, we’ll also have this AI transparency ethical standard that we have at Adobe, implemented as content credentials.

So yeah, effectively in those tools and additional tools in the Creative Cloud, you’ll be able to turn on content credentials, which says, you know, when I export or take something out of this tool, maybe bring it into another tool, import from my camera, we’re gonna stamp this cryptographic metadata that’s certifiable to say this was made by Andy Parsons, or this was made in Photoshop, version X, Y, Z, using Firefly generative engine version one.

And also I can connect my social media account, so you know, away from the journalism side, more towards the creator side. you can connect Web3 accounts. If you’re making NFTs or you have Web3 credentialing as part of your workflow, you can connect those in your browser using something called connectedaccounts.adobe.com, and that information can also be cryptographically sealed into the files that you export.

And there are various other settings and things. We’re moving towards a world where there’s one place to do this. To set this up, kinda set it and forget it in the Creative Cloud. And then all the applications that you use every day, whether you move between them or focus on Photoshop or Premiere, will inherit those values.

So they’ll be very easy to change, very easy to understand what you’re doing and give creators full agency in whether they capture their identity or not and what kind of identity bolstering they decide to use, whether it’s connecting your Twitter account, your Instagram account, or Behance account so there’s a lot of power in this kind of simple UI that I encourage your listeners to check out in Photoshop and Lightroom.

Helen Todd: Yeah, that’s great. And I saw Karen X, who’s a big creator, do a demo of the generative fill tool, and it’s very, very cool. So I’ll be sure to include that in the episode show notes.

But since we do like to explore the, the really cool things that these AI tools, open up to, are there any other really interesting projects that you’ve come across from creators who’ve, kind of done the deep dive in and embracing these tools?

Andy Parsons: Yeah, I mean, at the core of Adobe’s kind of approach to creativity is, we don’t have all the answers. You know, the company, people I work with every day are, you know, deep Photoshop experts and photographers, and they do use the stuff in their personal projects. But, every time a feature is released, we learn a ton about, you know, sort of meta creativity.

It’s like, here’s this tool, and then people get creative in how to use the tools in, in ways, in ways that we didn’t even think. So, yeah, there’s a Discord channel, there’s an Instagram channel. Like I urge people to take a look at what Photoshop creators are doing themselves and the ones that Adobe is is promoting.

There’s just myriad amazing things that people are doing with text effects and Firefly and, you know, clever prompt engineering, if you will, to get things out of Firefly, bring them into Photoshop, make beautiful finished images, for all sorts of purposes.

And then I think there’s a whole nother wave, coming from Adobe and other companies who are pushing the boundaries of what genAI is capable of, whether it’s openAI or some of the other companies that are doing it.

Shutterstock is doing some interesting things. And you know I can’t give you details but inside the walls of Adobe research, there’s some remarkable stuff happening that would blow your mind and will, you know, will at some point. I’m absolutely sure that at Adobe MAX this year, during sneaks, which is kind of when some of that research gets to bring its head above water and show off what’s possible, there’s gonna be some remarkable things, on the topic of genAI.

But, you know, at the end of the day, importantly, it’s all done ethically as we talked about, and it’s all kind of, in this cocoon of helping creators do more, you know, not to replace them, not to put people out of work, but to elevate the level of creativity and productive output that they that they can have.

And not surprisingly, some of the things I’ll, I’ll hint at are around video. You know, video, object-based editing rather than pixel-based editing. Just some remarkable things that when you see it, you’re like, oh, this of course, like this should exist and I wanna use this. So, I get excited just thinking about the creative potential of these things.

And Adobe has this, you know, massive, beautiful platform to deploy these ideas and tools that people are already familiar with. So incrementally, I think you’ll see more and more people using them, ethically and in good ways, and producing amazing things.

But I would be foolish to tell you that, I know all the amazing things that Adobe creators and others will do with these tools because every day, I’m not a creator myself, but with the power of Firefly, I’m kind, you know, getting there and experimenting with it and it’s a lot of fun. But in the hands of a professional, it’s just incredible.

Helen Todd: Such a tease, Andy, such a tease of what’s coming. We might have to get you on, after the announcements roll out again.

Andy Parsons: Absolutely, sure.

Helen Todd: Yeah. And I, it seems like, all these tools, you mentioned you’re not creator, but it’s kind of just democratizing creativity too, where you might not be able to paint offline. But now with these tools, it kind of digitally gives you some of these, you know, creator superpowers in, in a way. Do you think of Adobe as democratizing creativity at all?

Andy Parsons: I mean, you know, our mantra that’s, you know, written on the walls of Adobe and all of our social media accounts and we, we live it every day is creativity for all. You know, creativity for a Photoshop artist or a retouch artist or, you know, an award-winning photographer is different than creativity for me.

But if I wanna make something, yeah. That, that democratization is nothing new, I don’t think Firefly is the first tool that introduces it. It’s been part of our DNA for, for decades. You know, truly creativity for all means, that if you wanna make something, you should have the tools and facility to do it.

It doesn’t mean that I’m going to become an award-winning photographer, but if I wanna express something, I know that I can rely on Adobe tools to, to do it. And again, critically, do it in a transparent, ethical way. And if I wanna get credit for my work, we have this relatively new facility and content credentials to make sure that, that I can.

Helen Todd: You had mentioned at one point open source, and I know open source is really important to the work that you all are doing. So I was hoping that you could kind of expand on why open source is important and how you all think about it at Adobe as well.

Andy Parsons: Yeah, thank you for asking that. So, you know, for anything to be broadly adopted again, nothing about content credentials is an Adobe proprietary technology. We’re leading the way in terms of UX, user experience. And the way people interact with content credentials, but it’s all available to everybody.

The vision is that anybody who wants to implement or use content credentials should be able to do that, whether they’re in the Adobe ecosystem or not. And frankly, we need content credentials and authenticity and ecosystems where Adobe doesn’t play like, you know, certain corners of the news ecosystem or the broad ecosystem, the, the broadcast ecosystem.

So we built this in sort of layers. You can think of this as sort of a set of layers building up to those user experiences in all sorts of products. It starts with standards. So an open technical standard that explains how this works, why it works, where there are weaknesses, in terms of potential threats and harms and attacks, what the countermeasures are that are built into the spec.

But that is a hundred page specification for those who are used to reading technical specifications like in the ISO, in W3C, in the Linux Foundation, that’s great, we need that, it’s effectively the tablets, that say, you know, in carving this will always be forward compatible.

This has gone through scrutiny from security professionals worldwide, from lots of companies in academia, and it’s ready to be used. But, you know, ready to be used in a specification, which is a lot of words on paper and equations and things like that is pretty far from getting it into your product, especially if you’re a VC-funded startup or an entrepreneur or an individual.

So the next critical layer is open source. Open source enables you to do a lot of things. I mentioned that there’s a variety of tools that we’ve published, and not only Adobe is active in open source, there are other open source tools as well.

We think our tools are the preferred tools for developers to use because you know, not surprisingly, this is the exact same code literally that’s running in Photoshop and Lightroom and Firefly. So, you know, we’re using it. We’re not just putting it out there. It’s like in the beating heart of everything we do.

And there are a bunch of things you can do with it. If you’re building a mobile app, there’s an iOS SDK, you can bring it into your iOS SDK and certainly, soon the same for Android and say, these are the things I want capture.

I wanna do it in a C2PA compatible way. So anybody who subscribes the C2PA standard, whether they’re using Adobe open source or not, can read my content credentials and augment them in whatever way they should fit.

The second piece is very low level libraries for services. So the kind of things that, Stability AI is doing and that Firefly does in the backend is when you generate pixels or when you create something or when something arrives in your content management system, these backend services and libraries can make it very easy for you to sign and add content credentials.

And then perhaps the most important third aspect of open source is user interface components. So you don’t have to design, if you wanna add content credentials to your website and have it do all the cool things that Behance, for example, does with content credentials, you don’t have to build that from scratch.

You can grab some open source tools. Drop them into your website code and for free, you get the same kind of user interface that we show on our various tools. And if you wanna tweak that or style it or make it more of a native experience to your tools, you can do that as well. And we have a vibrant Discord community to help people do that.

We have documentation, we’re always working to improve. and we have lots of examples of people doing this in the wild and they’re sharing back their source code. So it’s really this vibrant ecosystem that kind of feeds on itself.

And I would urge folks interested in implementing or understanding what developers are doing to join our Discord community, join the CAI itself, join the Discord community, and get involved because you can have, you know, very candid conversations about what’s working, what’s not working.

We’re all in that Discord channel. we’re real people, we’re real human beings. We’re there, you can talk to us. But most importantly, it’s not just the Adobe team. It’s like hundreds of individuals who are also working to do this. And just like we talked about with Firefly and creativity, people are doing things with this open source that I myself didn’t imagine they would do from, body cam imagery, for security situations to entire businesses that are built on the idea of provenance.

It’s like, here’s a workflow system for people who care about provenance with enterprise customers and individual customers. So, if nothing else, you can get a lot of inspiration about how this stuff can be used. We’ve only talked about a few use cases, but there are many, many more and they’re all powered by open source.

Helen Todd: That’s amazing. And you had mentioned, the hope, for the social media platform adoption for the content credentials. I think you’ve also mentioned that a one really great application use is also browser adoption, to where you really wanna see that built into browsers. I was curious if you could expand on that and if any browsers have already adopted it into that experience at all.

Andy Parsons: Yeah, so this is, this is a long journey, right? We’re not gonna flip a switch and have everybody adopt content credentials tomorrow. And there’s precedent for this on the internet, right? There was a time when you know, you or your parents or me and my family members might have typed our social security number or credit card number or financial information into a browser that didn’t have the lock icon.

Cause there was a time when there was no lock icon, there was no standard technology for securing the channel between you and the person or company receiving your sensitive information. But now we would never dream of doing that, right? In fact, browsers have figured out how to put a big red X in the browser saying unsafe, go away, turn around now, some not even allowing you to proceed, even if you know what you’re doing.

So we think contact credentials is on the same path. but it won’t get into browsers and operating systems immediately. So we will be releasing a browser plugin for Chrome. So if you want to know that provenance data exists in the media you’re looking at in your browser, it will light up and do interesting things.

But that, you know, to your point, eventually that certainly does belong in the browsers themselves. So if you look at the set of partners like Microsoft and others, you’ll see that, you know, there are browser makers on that list. we’re in conversation with them. There will be news coming up and, you know, I can’t give you a timeframe, but certainly it’s our objective to have this adopted by browsers, even mobile operating systems, from the camera to the fundamental way that they render webpages and application interfaces.

So it’s a, it’s a marathon, so to speak. we’re well on our way there, but part of the urgency I mentioned earlier, to get more partners and more activity is to make sure that there are the right motivations to do it. Which means we need people to ask for it. We need creators and photographers and journalists to say this matters for their employers, for media companies say we wanna see this, and most critically, that we’re all unified in the idea that we wanna push that transparency all the way to consumers.

So that each of us, rather than governments or platforms or others making up trust decisions for us, that where it’s appropriate, that we can make up our own mind about what’s trustworthy. And that requires ubiquity. And we achieve ubiquity by having many, many more, important tech companies, browser manufacturers, makers of operating system, adopt this.

Helen Todd: And how are you thinking about you know, it, it’s very clear on new content and adding credentials, but you know, there’s so much content that already exists online. Is there a way to en masse, add content credentials to libraries of images that already, people own and maybe already are online? just curious, that question came to mind as we were talking.

Andy Parsons: Yeah, definitely. So we have conversations with folks like the Library of Congress who, you know, understand that it’s not super impactful to say, starting from this moment in 2023, everything will have content credentials because, you know, folks, like get images and Library of Congress, archival companies and, and government agencies have historically critical, impactful, significant images and videos that can benefit from authenticity.

So I’ll give you a very tangible example on Adobe Stock. When you go to Adobe Stock now, any asset you download will have content credentials in it. Now, we didn’t like wipe out all of Adobe Stock and start from scratch in order to enable that.

It’s that last mile provenance I mentioned earlier. I think I gave the BBC example, which says simply let’s, let’s, sort of retrofit all of those assets. Either on demand or, in one fell swoop with content credentials. We’re not gonna say, you know, we know who the photographer was necessarily. We’re simply saying, Hey, if you trust Adobe Stock and its sourcing methods, we’re gonna tell you that this was delivered by Adobe Stock and that those sourcing methods and information integrity practices were followed.

So that’s a start, right? We’re not gonna make up, we’re not gonna rewrite history. We’re gonna say, from this moment forward, these images and these videos will have provenance. And that’s, that’s how we think about historical archives. And, you know, we have good conversations going with others, some of whom I’ve mentioned that agree with that approach.

And going forward, things will come into Adobe Stock, for example, with provenance. It will be respected and carried forward, and then you’ll get a richer provenance history, for images that require it. But at the end, you know, if you trust that, who the person who’s delivering that image to you.

And you know, they are who you say, they’re who they say they’re, we’ve already taken a massive step forward in terms of trustworthiness of media that we just don’t have, widely deployed today.

Helen Todd: And I know it’s easy to see visually on some of the examples, but how would a consumer know if there’s a content credential within an image if they’re looking at it online?

Andy Parsons: Yeah, so our vision and, and some of the stuff that we’ll share in the links is you mouse over an image or a video. You see a little counter credentials icon that’s gonna be a little trademarked icon that Adobe’s owned.

That’s the kind of property of the C2PA and the standard. Anybody can use it. And it will indicate not that this is true or false, right? We’re not putting a green check mark on images saying, Hey, this picture of a tank in Times Square is real. We’re saying there’s more information here. There’s context here, and if you want to access it, understand what this is, which you have a right to do, just click on it.

And then we display a little overlay that shows you some of the critical information, like who signed this image or video. And then there’s a little learn more link, which will take you to our verify site, verifycontentauthenticity.org, which will also be open sourced. and that’s where you can see all of the details.

If there’s a genealogical history, if things were composited together, you’ll be able to see all the different things, before and after thumbnails. You know, being able to see what came into Photoshop and what came out of Photoshop. That’ll be all available to you.

That’s more of a forensics view that, you know, we think is probably less interesting to consumers, but certainly interesting to fact checkers and others who need these kinds of tools, to do fact checking at scale. And then for video, we’re working on a provenance video player. Some of the partners in the, in the ecosystem are also building their own, and it’ll show you things like imagine, you know, sometimes I use the analogy of Amazon X-Ray for, for everything.

If you’re familiar with Amazon x-Ray, you know, you can pause a movie or a show you’re watching and it will give you context about what’s on screen, who the actors are, maybe even news about, contextual news about what you’re seeing. And we think that should be everywhere and should be available to everybody.

That shouldn’t be locked in a single ecosystem or player. So these provenance video players basically do that. It’s like, huh, you know, is this an unedited, video of Joe Biden or Nancy Pelosi? Let me, lemme find out. What is this actually cases? There’s a very interesting controversy around a film made about Anthony Bourdain a couple of years ago where his voice was deep faked, very convincingly to read a letter that he actually couldn’t have read, because it would’ve had to happen after, after his death.

You know, it was very controversial because the creators didn’t disclose that that was fake. The controversy about whether they needed to, because in the pursuit of creative agency, maybe you don’t have to, but it’s a documentary. It’s imagining that he said those things but he didn’t actually say.

So, again, without making judgment about what’s the right thing to do, simply giving people transparency to know that that particular segment has a synthetic voice in it, I think is, is really powerful.

So, and the only way it’s gonna be powerful and, and reach full potential is if it’s very obvious that that icon exists. It shows up when it needs to, and that people recognize it in the same way that you recognize the lock in your icon to mean something important.

Helen Todd: Yeah. Thank you for explaining that. I wish we could just fast forward to where everyone already has this adopted, especially going into

Andy Parsons: Me too

Helen Todd: 2024. Yeah, but I think this also is kind of a nice way to segue into media literacy.

I know that that’s something that you care very much about and wanted to expand on on the show today. So how do you or Adobe think about media literacy, especially at this moment in time?

Andy Parsons: Yeah, so just a couple days ago we released our first kind of chapter of the way we think media literacy should be addressed in schools.

We have three curricula that we’ve developed in concert with some external partners from NYU and folks who really understand the academic ecosystem and, you know, efficient methods for teaching kids of all ages. We have a middle school curriculum, a high school curriculum, and an upper education curriculum.

And, you know, we need to rethink media literacy. And this is reified in some of the shows we’ve produced and we hope others will teach in classrooms and online. And I’ll, we’ll share a link for your listeners to take a look at this. It’s free, it’s on Adobe edX. You can download the curricula, the PDFs, and get a sense of this for yourself.

But the basic idea is, you know, some of the things we used to do are still important, like being critical of your media, being skeptical and interrogating your media. The methods to do that have changed significantly though, even a few months ago where you could say, this looks like a portrait. You know, this maybe was made by this person doesn’texist.com, and I can know that if I scrutinize the ears right, because that tool was really bad at ears, it would create an earring on one side and not on the other side.

The eyes were always like perfectly aligned, and we know human faces aren’t symmetrical, but guess what? The people creating those tools, either for good or bad purposes, have figured that out and fixed those problems. You know, months ago you couldn’t create a hand in some of these tools without six or seven fingers, which was a dead giveaway. Well, again, guess what, those problems have been solved, we’re past that.

So we need to think about the things that can be created and manipulated synthetically and with these kinds of tools as perfect. So we need to just understand that if they’re not quite perfect yet, they will be perfect soon. Therefore, there’s nothing you can do with the human eyeballs. These are more sophisticated than our senses or your ears.

You know, there’s a terrible scam that’s been going around and widely publicized where, you know, you can, someone’s voice, You know, make them say or, or do things they never said or did, ask for ransom money when they’re actually perfectly safe. This is just a terrible, evil scam, perpetrated on people.

Understanding what those things are is going to be very important. And media literacy. curricula have to understand that there are other ways, one of them being provenance and content credentials to know what something is. And, but we don’t, this is not a sales pitch for content credentials.

The curriculum that we’re developing teaches people new ways to interrogate their media and understand what something is, including other ways to get context, whether it’s using Google reverse image search or things that you might wanna do just before you share this with your hundreds or thousands of followers, or, you know, make a tweet that says, I can’t believe this happened.

Like, let’s try to be sure that this actually happened. The place to get this into schools and the time to do it is, is now. So we’d like to see it adopted by public school educators. We’re starting that process now, but the materials exist. You know, the best way to understand how we think about what should be taught is to look at those materials, look at summaries.

But it really is, as always, understanding that we have to understand what we’re looking at and experiencing and question it, and then providing some innovative ways to actually do that questioning because that’s the moving target. We’ll continue to update those materials and hope that others follow suit.

Helen Todd: And I would argue that adults probably should go through some of this too seeing the media manipulation in our country over the past couple years as well.

Andy Parsons: Yeah, and this is evidence of like the critical investment we have to all make now, you know, if we’re teaching middle school kids to eventually teach their kids how to do this, that’s, that’s an important investment. But we also have to invest in understanding it’s ourselves as adults and, you know, teaching our parents and others so that this is actually effective.

And, you know, rather than lamenting the kind of the imminence of the 2024 election, I’m very optimistic that some of these techniques can be effective in the hands of journalists and sort of forward-thinking adults and media consumers in the timeframe that matters, even within the next year or so.

Helen Todd: Yeah. I think it was an episode on Hard Fork, or it might have been Ezra Klein as a guest who said, you know, if the Hollywood videotape would’ve come out now versus when it did in 2016, that it could have been easily said, oh, that was just AI generated and not actually real.

So it just kind of punctuates the point of the verification and the content credentials so much so that we do know what’s real and what’s not real in that regard.