The Content Authenticity Initiative Symposium 2023 Takeaways Part 1

Restoring Transparency and Trust Across Our Digital Ecosystem in the Age of Artificial Intelligence

On December 7, 2023, I was honored to attend and join the 200 attendees at the Content Authenticity Initiative (CAI) Symposium that took place at Stanford University in collaboration with Adobe, CAI, and Stanford Data Science. As a proud CAI member, it was an eye-opening and inspiring day spent meeting and learning from leading A.I. developers, technologists, human rights experts, and academics who discussed the implications of A.I.-driven misinformation and the need to drive collective action, including the adoption of the C2PA Content Credentials global standard.

In 2023, GenAI captured the world’s imagination, and as A.I. becomes more powerful and prevalent, there’s an urgency to bring trust to digital content. The need for digital provenance and clear, simple, verifiable “nutrition labels” to combat misinformation is more important than ever.

2024 is going to be a PIVOTAL year for democracy with over 40 national elections taking place globally. This means that more than half the world’s population — over four BILLION people — will have the opportunity to vote. 2024 will see more people vote than any previous year, and we won’t see another election year like this for a quarter of a century. Of course, one of the most consequential elections for the planet will be the one that takes place in the United States as the impact will reveberate globally.

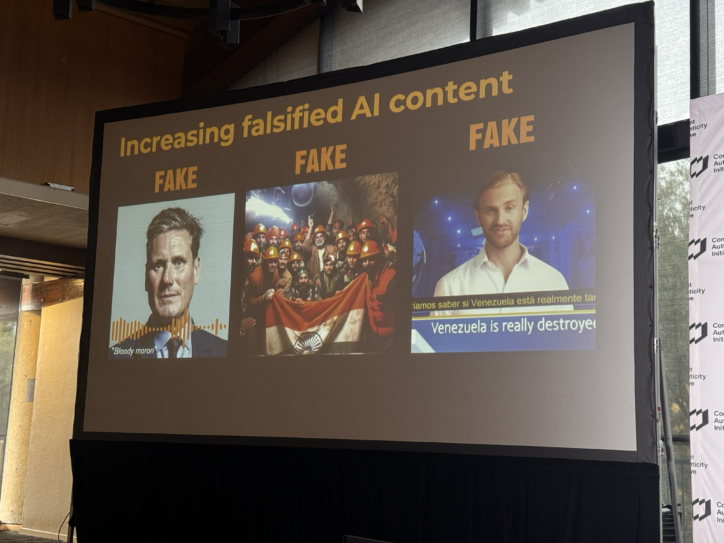

The urgency to bring trust and transparency to the content we consume online has never been more pressing, especially in an era of GenAI enabling the proliferation of mis- and disinformation at an unprecedented scale.

In today’s blog post, we’re highlighting the presentation “Authenticity and Defending Human Rights: Getting It Right” by Sam Gregory who is the Executive Director of the global human rights organization WITNESS.

“🔔 + Deepfakes hype is turning to reality globally as we head into election-palooza 2024

➡ Now must get expanding authenticity infrastructure right – as goes niche to mainstream with legislation + tech.” –Sam Gregory

A heartfelt thanks to Andy Parsons, Madeleine Burr, and Coleen Jose at Adobe and the CAI for the invitation to attend.

Stay tuned for more recaps, and consider joining me and over 2,000 other CAI members today: https://contentauthenticity.org/membership.

Authenticity and Defending Human Rights: Getting It Right

Sam Gregory, WITNESS

Sam Gregory is the Executive Director of WITNESS, an international nonprofit organization that helps people use video and technology to protect and defend human rights.

Sam is an award-winning human rights advocate, technologist, and innovative leader with over 25 years of experience at the intersections of video, technology, civic participation, and media practices. As Executive Director, Sam leads the organization’s five-year strategic plan to “Fortify the Truth” and champions our global team of activists and partners who support millions of people using video and technology globally to defend and protect human rights.

Sam took the stage at the CAI Symposium to share more about the vital work that WITNESS does, the current landscape we find ourselves in, and what needs to be done when it comes to media authenticity to protect people’s human rights in the age of artificial intelligence.

“💭 So…Human rights defenders have always grappled with challenges around trust in their evidence (and threats to them that require balancing visibility and protection). They’ve grappled with harmful shallowfakes for years.” –Sam Gregory

1. Human Rights Context and Media Authenticity: Sam began by contextualizing the importance of authenticity in human rights work. He described the challenges faced by human rights defenders and journalists, particularly in verifying the authenticity of crucial documentation amidst widespread misinformation and risks to personal safety.

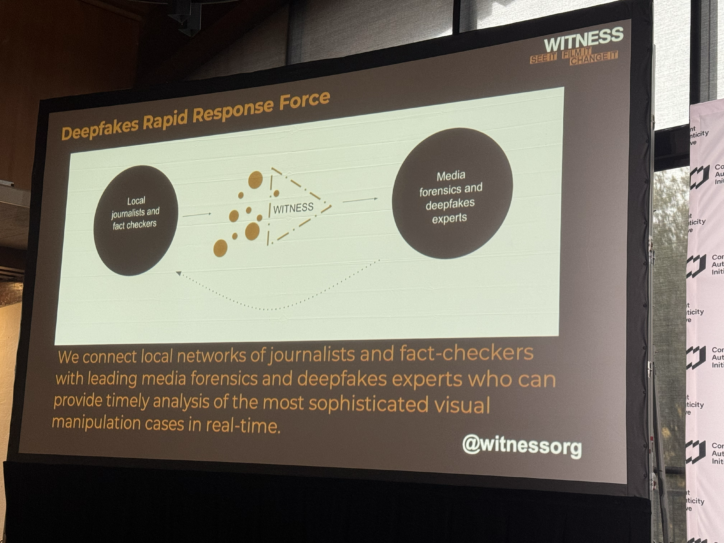

2. Witness’s Role in Media Authenticity: Witness, an organization that supports human rights activists using video technology, has been deeply involved in the space of media authenticity. They have been proactive in addressing the challenges posed by deepfakes and generative A.I. to ensure the credibility of critical societal documentation.

3. Three Areas of Focus: Sam outlined three areas of focus: understanding how human rights work intersects with authenticity, observing the impact of generative A.I. on authenticity, and exploring future strategies to enhance authenticity in human rights contexts.

“🔔 There IS interest in provenance, watermarking etc among frontline HRDs/journalists.

🔔 But caveated! As a signal, not the signal, to fortify their work but only with nuanced design and implementation efforts to ensure global applicability, avoid centralized control and to protect human rights.

🥁And ensure approaches don’t unfairly shift the burden of proof to make it even harder for marginalized voices to be heard.” –Sam Gregory

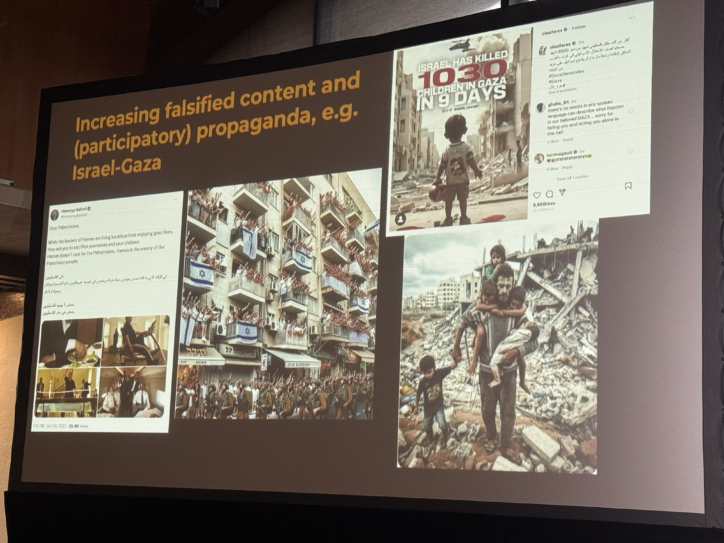

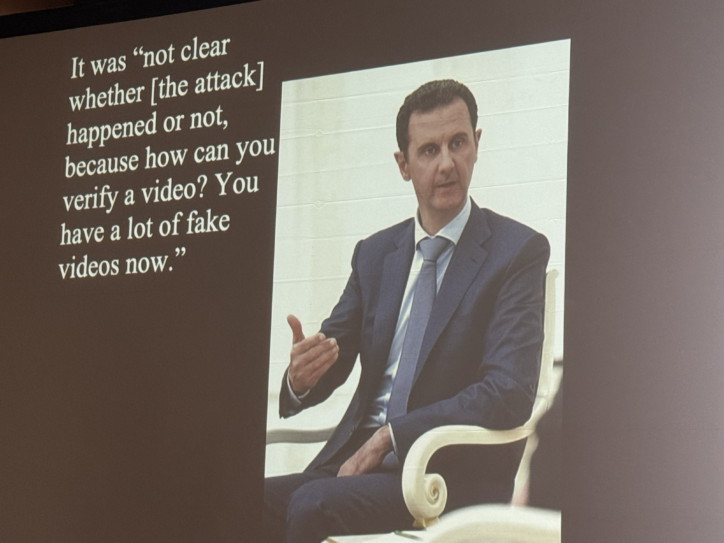

4. Challenges of Verification and Misinformation: One significant issue highlighted was the difficulty in verifying media content, especially in hostile environments. Sam discussed instances where crucial human rights footage was dismissed as fake or manipulated, illustrating the need for reliable verification tools.

“💭 This needs to be about the HOW not the WHO of AI-media making: need to insist that tech protects privacy, opt-in, and not collect by default personally-identifiable information.

➡ For content that is not AI generated, we should be wary of how a provenance approach misused for surveillance and stifling freedom of speech.

🥁Tools must be built and legislated with attention to the global environment: attempts to pass fake news laws intrusive surveillance, and likelihood that ‘deepfake’ laws will entrench democratic drift.

➡ We must not repeat mistakes of the social media era and place overwhelming responsibility to navigate harms on the user.” –Sam Gregory

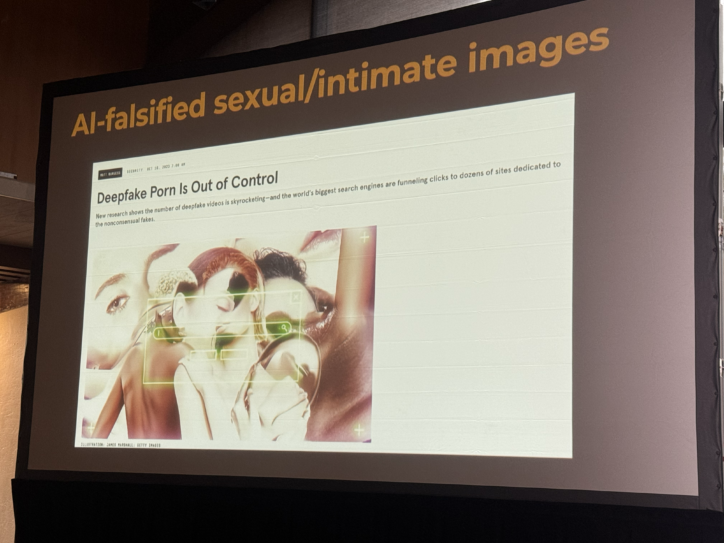

5. Evolving Threats from Generative A.I.: Sam noted the growing concern among journalists and human rights activists about the increasing sophistication of A.I.-generated false content. This evolution calls for more effective solutions to authenticate media and safeguard against misinformation.

“🔔 Tools and options for authenticity infrastructure need to be available broadly, with diverse ecosystem including open-source, options for high-risk environments and diverse global settings and constraints, and outside big tech.” –Sam Gregory

6. Gap in Access and Skills for Detection: A notable point was the disparity in access to media detection tools across different regions. Sam emphasized the need for providing training and resources globally to enable effective media authentication, particularly in less-resourced newsrooms.

“🥁 3 grounding principles: Center most vulnerable and those on frontlines of reliable information; insist on firm accountability and responsibility across the broader AI pipeline; and start with human rights as the basis for legislation, policy and tech implementation” –Sam Gregory

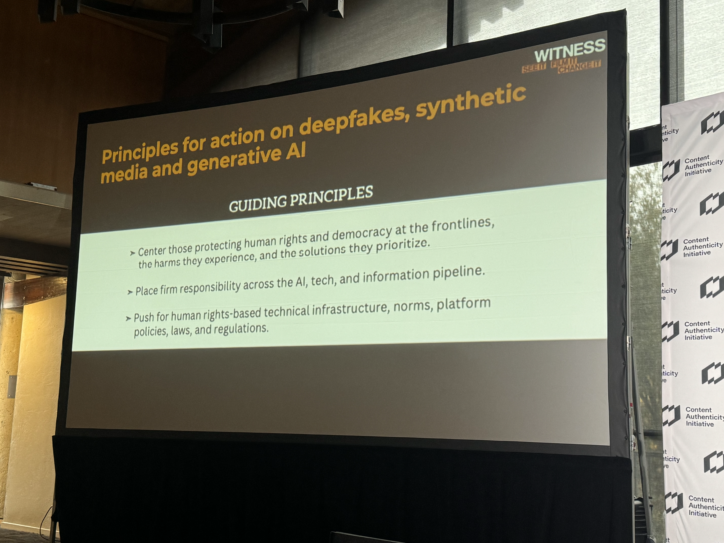

7. Principles for Defensible Technology: Sam presented three principles for developing defensible technology: centering those protecting human rights, placing responsibility across the tech and A.I. pipeline, and pushing for human rights-based infrastructure and regulations.

“➡ Identity can’t be core of authenticity and trust (see lessons learned on citizen journalism and vulnerable HRDs, as well as attempts to suppress satire and political dissent)” –Sam Gregory

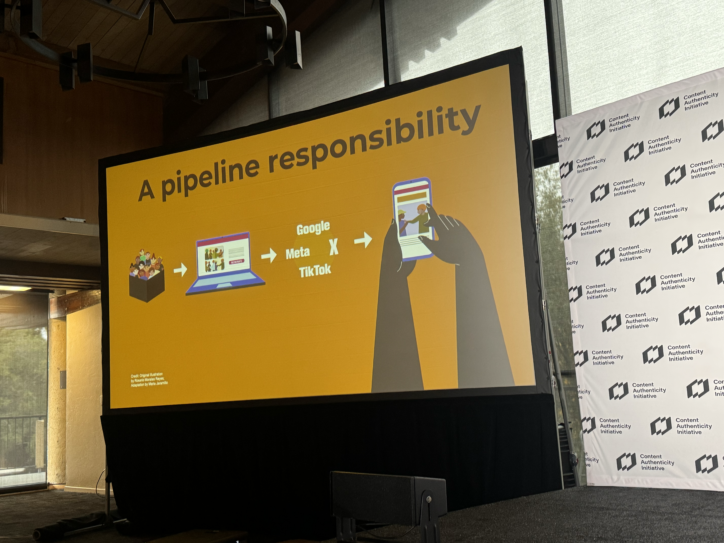

“🥁Must be a pipeline of participation and responsibility…from AI foundation models and developers of open source projects to deployment in apps, systems and APIs to platforms to distribution.

🏹 And we need to always repeat question – ‘on whom this does this redistribute the burden of proof and trust?’” –Sam Gregory

8. Importance of Nuanced Design in Authenticity Tools: The discussion highlighted the need for authenticity tools to be designed with global applicability, avoiding centralized control, and protecting human rights while not unfairly shifting the burden of proof.

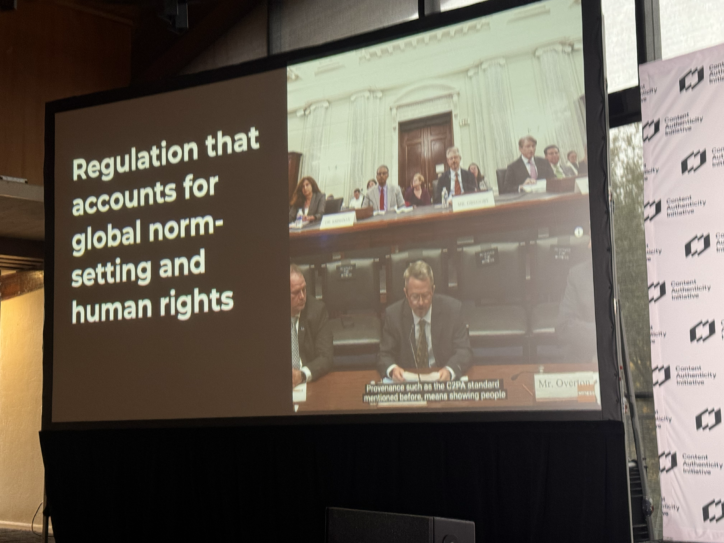

9. Regulation and Global Impact: Sam stressed the global impact of regulations and norms set in the US and EU, cautioning against potential abuses in less democratic regions. He urged for regulations that consider the broader implications for human rights and democracy.

“As I half-joked at the end, authenticity and provenance infrastructure is not a silver bullet, more like a rusty musket. But even with that, it will have power, and we must get it right….” –Sam Gregory

10. Future of Authenticity Infrastructure: Looking ahead, Sam called for a diverse ecosystem of tools for authenticity infrastructure, including open-source options and those fitting diverse global settings. This approach is vital to decentralize trust and support frontline human rights voices.

Sam’s insights shed light on the intricate relationship between media authenticity, human rights, and safety. His call to action emphasizes the necessity of nuanced, globally applicable tools and regulations that prioritize human rights while addressing the evolving challenges posed by A.I.-generated media. As the landscape of media authenticity evolves, the principles outlined by Sam serve as essential guidelines for ensuring that the truth is both protected and respected in the digital age.

Here’s a very recent TED Talk that Sam did on this subject too:

Check out our Creativity Squared podcast interview with Andy Parsons who is the Senior Director of Adobe’s Content Authenticity Initiative (CAI) to learn more about the movement:

PLEASE HELP ME

My name is Aziz Badawi, I’m 27 year old man from Palestine. Our town has recently evacuated due

to bombardments by Israel, now I am staying at a shelter with my 6 year old daughter Nadia. My wife is

dead and I’m the only one left to take care of my daughter as we are not allowed to depart to my parents house

in Nablus, she is sick with a congenital heart defect and I have no way to afford the medicine she needs anymore.

People here at the shelter are much in the same conditions as ourselves…

I’m looking for a kind soul who can help us with a donation, any amount will help even 1$ we will

save money to get her medicine, the doctor at the shelter said if I can’t find a way to get her the

medication she needs her little heart may give out during the coming weeks.

If you wish to help me and my daughter please make any amount donation in bitcoin cryptocurrency

to this bitcoin wallet: bc1qcfh092j2alhswg8jr7fr7ky2sd7qr465zdsrh8

If you cannot donate please forward this message to your friends, thank you to everyone who’s helping me and my daughter.

Comment by aziz — February 2, 2024 @ 11:03 am

Элвис Пресли, безусловно, один из наиболее влиятельных музыкантов в истории. Родившийся в 1935 году, он стал иконой рок-н-ролла благодаря своему харизматичному стилю и неповторимому голосу. Его лучшие песни, такие как “Can’t Help Falling in Love”, “Suspicious Minds” и “Jailhouse Rock”, стали классикой жанра и продолжают восхищать поклонников по всему миру. Пресли также известен своими выдающимися выступлениями и актёрским талантом, что сделало его легендой не только в музыке, но и в кинематографе. Его наследие остается живым и вдохновляет новые поколения артистов. Скачать музыку 2024 года и слушать онлайн бесплатно mp3.

Comment by Madison3354 — February 14, 2024 @ 7:32 pm

hiI like your writing so much share we be in contact more approximately your article on AOL I need a specialist in this area to resolve my problem Maybe that is you Looking ahead to see you

Comment by NeuroTest side effect — February 29, 2024 @ 12:53 am

Thank you for the auspicious writeup It in fact was a amusement account it Look advanced to more added agreeable from you By the way how could we communicate

Comment by NeuroTest cost — March 1, 2024 @ 7:00 am

you are in reality a just right webmaster The site loading velocity is incredible It seems that you are doing any unique trick In addition The contents are masterwork you have performed a wonderful task on this topic

Comment by Fitspresso — March 5, 2024 @ 6:16 pm

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Comment by binance — April 18, 2024 @ 9:14 pm

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Comment by Otwórz konto na Binance — May 25, 2024 @ 3:16 pm

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://www.binance.com/id/register?ref=S5H7X3LP

Comment by Créer un compte personnel — August 19, 2024 @ 1:09 pm

Your article helped me a lot, is there any more related content? Thanks!

Comment by binance signup bonus — August 21, 2024 @ 2:14 pm

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.com/kz/register-person?ref=RQUR4BEO

Comment by Binance - rejestracja — October 12, 2024 @ 4:46 am

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Comment by Cont Binance — October 15, 2024 @ 3:36 am

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Comment by Bonus d'inscription Binance — October 17, 2024 @ 7:24 am

Your article helped me a lot, is there any more related content? Thanks!

Comment by binance us register — November 1, 2024 @ 12:43 am

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Comment by binance — January 1, 2025 @ 9:35 am

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Comment by Binance美国注册 — January 23, 2025 @ 7:09 pm

Your article helped me a lot, is there any more related content? Thanks!

Comment by Binance创建账户 — April 15, 2025 @ 1:24 am

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Comment by 创建个人账户 — May 4, 2025 @ 6:32 pm

Your article helped me a lot, is there any more related content? Thanks!

Comment by binance Регистриране — May 25, 2025 @ 11:20 am

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Comment by binance Register — June 13, 2025 @ 6:50 am

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Comment by binance — July 8, 2025 @ 9:17 pm

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

Comment by tempemail — July 13, 2025 @ 8:13 am

Bafra çekici ile aracınızı güvenle çektirebilirsiniz. Bafra oto yol yardım, şehir içi ve şehirlerarası tüm yollarda hizmet sunar. Bafra oto kurtarma, profesyonel ekiplerle hızlı taşıma sağlar. Bafra oto yol yardım, sürücülere büyük kolaylık sunar.

Comment by bafra oto kurtarma — August 29, 2025 @ 8:51 am

Well-written and practical. Can you share a template for this?

Comment by Eva Archer — September 25, 2025 @ 6:22 pm

I like the efforts you have put in this, regards for all the great content.

Comment by Augustus Potter — October 3, 2025 @ 7:13 pm

With the rapid evolution of modern digital infrastructure, many companies are shifting their focus toward Online Education & Certifications to improve long‑term performance and ensure seamless scalability. At the same time, growing security concerns have increased the demand for Cloud Hosting Services, especially as more organizations move their operations online. Meanwhile, the rise of Cybersecurity & VPN Solutions has empowered professionals to optimize workflows, enhance productivity, and adopt smarter technological strategies suitable for competitive global markets. (2f46f4cf)

Comment by Cloud Hosting Services — December 17, 2025 @ 3:20 am

Your ability to transform complex concepts into digestible professional insights is particularly commendable.

Comment by Mu88 the thao — January 21, 2026 @ 2:15 am