Ep37. Gemini by Google DeepMind: Discover A.I.’s Potential for Positive Global Societal Impact with Dex Hunter-Torricke, Head of Global Communications & Marketing for Google DeepMind

If 2023 proved to be a breakout year for generative A.I. (GenAI), then 2024 may fulfill its destiny as technology’s ultimate change agent, transforming vast swaths of human systems and capabilities.

Yet, the realization of that immense promise first requires navigating profound risks as A.I. rapidly evolves more powerful permutations dwarfing existing oversight frameworks.

In this episode of Creativity Squared, Dex Hunter-Torricke contends that inclusive, ethical A.I. development can channel this epochal force toward uplifting lives globally.

As Head of Global Communications & Marketing for Google DeepMind, Dex helps shape public understanding of A.I. advances like Gemini, Google’s new general intelligence model blending capacities across learning modalities. Dex is passionate about driving large-scale, global societal change and believes that amazing possibilities are coming as A.I. tools transform the way billions of people live and work over the coming years. He sees the recently announced Gemini 1.5 as another exciting step towards that future.

In this far-reaching discussion with host Helen Todd, Dex shares his vision for A.I. to help solve humanity’s grand challenges, while ensuring it’s developed safely and responsibly. The pair also discuss key aspects shaping A.I. in 2024, like Google’s new multimodal model Gemini, as well as governance experiments like Meta’s Oversight Board.

As a writer and fan of science fiction, Dex also shares his views on the power of storytelling and why it’s needed now more than ever to make sense of the complexities of A.I., as well as to capture the public’s imagination about the possibilities of amplifying the best of human potential.

What does the future hold? To discover Dex’s optimism, sense of history in the making, and desire to realize A.I. for positive global impact, continue reading below.

“Things that may seem like science fiction right now are coming, and they are going to have a huge impact on the way that billions of people live their lives…So we need to take this stuff seriously.”

Dex Hunter-Torricke

Science Fiction, Society, and A.I.

Currently based in London, Dex’s impressive career includes managing communications for leaders and organizations reaching billions of people and at the heart of global transformation. He’s been the head of communications for SpaceX, head of communications and public policy for Meta’s Oversight Board, head of executive communications for Facebook, and was Google’s first executive speechwriter where he wrote for Eric Schmidt and Larry Page. Dex began his career as a speechwriter and spokesperson for the executive office of UN Secretary-General Ban Ki-moon. He’s also a New York Times-bestselling ghostwriter and is a frequent public speaker on the social impact of technology.

As the conversation begins, Dex shares his frustration at the beginning of his career trajectory at the UN trying to drive change in resource-constrained public sector organizations.

Now at Google DeepMind heading communications and marketing for Google’s consolidated A.I. division, Dex focuses on conveying Google’s A.I. development and thinking about the future potential to solve major global challenges once integrated into products reaching billions of people.

Before diving deeper into Dex’s thoughts on A.I. as a disruptor, Helen presses him on another topic: His love for science fiction. Dex discusses how readers often overlook the genre’s focus on and relevance to society, rather than simply technology. He references Kim Stanley Robinson’s Mars trilogy and its exploration of economic and ethical issues arising from humanity expanding to Mars as formative reading and a touchpoint while interviewing for communications lead at SpaceX years later.

“Good science fiction mostly isn’t about technology. It’s about society, and how communities evolve when they have access to the kinds of futures that are made possible by technology.”

Dex Hunter-Torricke

Dex suggests most sci-fi depictions of A.I. follow extreme dystopian visions, like the Terminator films. However, he highlights thoughtful examples like Kazuo Ishiguro’s Klara and the Sun as avoiding reductive assumptions by exploring nuanced facets of plausible human-A.I. coexistence.

Dex indicates enthusiasm for ongoing exchanges on governance and problem-solving global frameworks needed to responsibly steer A.I.’s benefits while mitigating risks. He shares optimism about shifting societal narratives toward a more balanced consideration of cautions and opportunities instead of only amplifying warnings in ways impeding progress on pressing priorities A.I. may substantially help address.

The Pace of Progress

Dex outlines the furious pace of A.I. development at Google DeepMind in 2023 since he joined in April, enabled by consolidated efforts from merging top internal A.I. units into Google DeepMind.

Google’s Gemini is the company’s largest foundation model yet promising more advanced reasoning and multimodal understanding to unlock new use cases. Dex expects Gemini integration over 2024 to impact billions through enhanced Google products and developer platforms, especially with the recent announcement of Gemini 1.5 (which this interview was conducted before this announcement).

He suggests seamlessly blending perceptual inputs like humans do — as we’re multimodal — holds immense promise for useful applications versus present disjointed singular-modality models. While current A.I. tends to specialize within narrow tasks, Gemini’s flexibility echoes human context switching, exponentially expanding utility.

Dex argues realizing such potential compels investing in frameworks and cooperation to keep pace with exponential change. He proposes that inclusive solutions balancing risk and opportunity can channel A.I.’s promise while retaining public faith.

Asked about his personal use of A.I. as a writing aid, Dex shares his experience testing ideas through rapid prototypes and outlines to validate choices before major time investments. He feels encouraged when initial impulses prove right.

Dex also employs A.I. to tackle “cognitive manual labor” which are mundane tasks like data entry, freeing capacity for higher-order work. Wanting more familiarity with what he called “the bleeding edge of A.I. tools,” Dex reveals his 2024 goal is to investigate communications/marketing A.I. applications to be ready to transform how he works to stay ahead of the curve.

Dex considers anticipatory tech adaptation essential as A.I. inevitably disrupts workflows. He suggests creatives specifically formulate personal disruption strategies embracing new tools before mandated, avoiding forced catch-up through planned skills expansion leveraging A.I.’s exponential reach.

“I think everything we’re doing, both you and me, Helen, I think everything is going to be transformed in a lot of ways both big and small.”

Dex Hunter-Torricke

A.I. Governance and the Need for New Institutions

Asked about oversight frameworks, Dex indicates that experiments like Meta’s Oversight Board responded to technology novelties straining existing governance. He suggests humility and ecosystem thinking help account for interwoven societal challenges exceeding isolated solutions.

Dex argues A.I.’s global reach compels coordinated action on emerging tools, hinting at more powerful pending systems. He advocates responsible development and use given probable future human-level A.I. capabilities outstripping current safeguards.

Dex suggests effective A.I. governance requires diversity and inclusion. He warns that insular tech discussions ignoring everyday leaders risk misaligned solutions when challenges demand broad expertise, and he proposes inclusive representation builds understanding and prevents shortsightedness, allowing safeguards to better suit comprehensive applications.

“We really do need to think about diversity and inclusion when we build these institutions…in a future where A.I. is going to have this fundamental impact on society, on communities, and on businesses…most of the challenges aren’t necessarily going to be technological, they’re going to be societal challenges.”

Dex Hunter-Torricke

Questioned on influential A.I. governance proposals, Dex indicates openness to wide-ranging institutional lessons balancing localization and international scope. He favors continuing inclusive, multidimensional action advancing opportunities, not just mitigating risks. Dex suggests A.I.’s potential depends on managing threats while unlocking progress to benefit humanity.

In terms of impact, Dex expects further analysis of extensive policies like European A.I. regulations. But he found UK summits encouraging for assembling diverse actors and urging research toward solutions and opportunities alongside awareness of the downsides and threats.

Dex concludes that A.I. promises societal benefits if steered judiciously by ethical, representative cooperation.

Democracy, Elections, and Building Trust in Technology

“Everywhere I go in the world…everyone is trying to figure out what are those biggest points of disruption. What are the things that are exciting opportunities that are going to change the way we live?”

Dex Hunter-Torricke

Helen turns the conversation next to a weighty topic: the vital year for democracy ahead, and A.I.’s role in potential election turbulence.

Dex indicates that despite vital ongoing risk discussions, public curiosity overwhelmingly focuses on grasping A.I.’s impending societal impacts. He suggests mobile and social network revolutions presage A.I.’s coming generational disruption as foundational infrastructure ushers new capabilities.

Dex observes leaders across domains pressing to map disruption vectors and the opportunities afforded. He envisions A.I. elevating healthcare, resource equity, and more over time — provided we can successfully navigate the risks. Dex suggests potential future milestones like mass space habitation hinge partially on model progress.

He expresses, with humility, that while many rewards may await future generations, those taking action today must lay ethical foundations and forge wise governance to best capture positives while limiting harms ahead.

Dex enjoys speculating about coming shifts but suggests urgency in addressing challenges so future innovations optimally uplift lives. He concludes that ignoring seemingly fantastical possibilities of risks forfeits potential ahead when scientifically poised for tremendous change.

Storytelling to Make Sense of Complexity

Dex underlines that A.I. can allow for audacious advances. For example, he references Google DeepMind’s AlphaFold, a breakthrough A.I. system that can rapidly predict the 3D models of protein structures with extreme accuracy, epitomizing A.I.’s potential to dramatically accelerate scientific breakthroughs. Dex expresses awe at the pace at which historical changes are becoming palpable.

Asked about storytelling’s role, Dex suggests impactful tales resonate emotionally while contextualizing complexity. He observes A.I. debates bog down lay audiences lacking specialized preparation. Dex proposes strategic narratives that decode dense technicalities can constitute critical public on-ramps, enabling inclusive discourse guiding development.

Dex argues storytelling proves pivotal in preparing societies, especially given A.I.’s deep disruption. He suggests potent tales drive revolutions by crystallizing imagination and belief. Dex feels communicating A.I.’s societal implications requires fully new paradigms keeping pace with lightning transformation.

Helen then asks Dex about career highlights. In response, Dex names leading communications for Gemini’s launch as humbling and urgent. He describes seeing formidable technology marshaled toward solving humanity’s challenges as proving deeply motivating, given his lifelong focus. Dex indicates that despite playing a seemingly self-described small part, telling stories to accelerate solutions feels meaningful.

In closing, Dex proposes either seriously grappling with the implications of science fiction, or ignoring them at society’s collective peril.

Dex concludes that steering A.I.’s beneficial application requires informed stewardship which appreciates the attendant risks alongside the vast potential of the technology.

“Science fiction is very often science fact before its time.”

Dex Hunter-Torricke

Links Mentioned in this Podcast

- Google DeepMind

- Gemini

- Meta’s Oversight Board

- Kim Stanley Robinson’s Mars trilogy

- Klara and the Sun

- Google DeepMind’s AlphaFold

Continue the Conversation

Thank you, Dex, for being our guests on Creativity Squared.

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 150 arts organizations, projects, and independent artists.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

Dex Barton: Things that may seem science fiction right now are coming, and they are going to have a huge impact on the way that billions of people live their lives, and that is just incontrovertible fact from my point of view. So we need to take this stuff seriously, we need to understand the consequences, because there will be things that are very, very good, and there will be things that are very, very challenging, and we need to understand both if we want to make the most of it.

Helen Todd: Passionate about driving large scale global societal change, Dex Hunter-Torricke is the Head of Global Communications and Marketing for Google DeepMind, one of the world’s leading AI companies. Currently based in London, Dex’s impressive career includes managing communications for leaders and organizations reaching billions of people and at the heart of global transformation.

He’s been the Head of Communications for SpaceX, Head of Communications for Google and public policy for Meta’s Oversight Board, Head of Executive Communications for Facebook, and was Google’s first executive speech writer where he wrote for Eric Schmidt and Larry Page.

Dex began his career as a speech writer and spokesperson for the executive office of UN Secretary-General Ban Ki-Moon.

He’s also a New York Times bestselling ghostwriter and is a frequent public speaker on the social impact of technology. I met Dex back in 2014 at a Facebook Developers Conference in San Francisco, and we’ve been friends ever since. I’m in constant awe at his amazing career trajectory, work, and passion.

Today, you’ll discover Dex’s vision for AI to help solve humanity’s grand challenges while ensuring it’s developed safely and responsibly. We also discuss key aspects shaping AI in 2024, like Google’s new multimodal model, Gemini, as well as governance experiments, like Meta’s oversight board. Dex also shares the importance of keeping humans at the center of critical societal decisions, especially as AI plays an increasingly assistive role in all facets of our lives.

As a writer and fan of science fiction, Dex also shares the power of storytelling and why it’s needed now more than ever to make sense of the complexities of AI and also to capture the public’s imagination about the possibilities of amplifying the best of human potential. What does the future hold?

Join the conversation where Dex shares his optimism, sense of history in the making, and desire to realize AI for positive global impact. Enjoy.

Theme: But have you ever thought, what if this is all just a dream?

Helen Todd: Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox, on YouTube, and on your preferred podcast platform. Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space.

The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Theme: Just a dream, dream, AI, AI, AI

Helen Todd: Dex, welcome to Creativity Squared.

Dex Barton: Great to be here.

Helen Todd: So I’m so excited to have you on the show. Dex and I met back in 2014 at the Facebook Developers Conference out in San Francisco when Dex was the head of executive communications for Facebook. And, you know, Dex, you’ve worked with a lot of slacker companies over the years, you know, a few small companies that people have heard of, like the United Nations, SpaceX met as oversight board, and now your current position as the head of global communications and marketing for Google DeepMind. So I’m so excited to do a deep dive into all things AI for you this year. So thanks again for being on the show.

Dex Barton: Great to be here.

Helen Todd: So let’s dive into your back story. So what is your origin story? And what do you do in your role for Google DeepMind?

Dex Barton: Yeah, great question. So I started my career at the UN as you mentioned, and I figured I would probably spend most of my career actually in the public sector. And, of course, there was no faster cure for that ambition than being at the UN and realizing just how frustrated a lot of the people who wanted to drive change were, you know, working for, you know, organizations that had, you know, tremendously good missions, but did not have the resources and certainly I think didn’t have the kind of expertise to deal with some of the new technologies that were arriving in the world and creating enormous societal change.

And so at the end of 2010, I decided to go over to work for Google. This is my second time actually working for part of the Google family. And I went to go and be speechwriter for Eric Schmidt, who at the time was the CEO of Google.

And really, you know, that has been, you know, a transition that, you know, II’ve always thought was you know, the sort of next natural step in my career, really. It was very much a continuation of the things that I cared about, you know, growing up, which was how do we drive large scale, global societal change, you know, how do we go about solving some of the enormous global challenges that, you know, people face?

And you know, very much, you know, all the different places I’ve worked in my career have been part of this journey, you know, trying to explore how different leaders and organizations are able to build tools and technologies that I think can help solve some of those critical problems.

And my most recent role, you know, I joined DeepMind in April, 2023. And I lead communications and marketing for the organization. And Google DeepMind, of course, is a new combined unit at Google for AI. It was formed actually in April, just when I joined with the merger of a company which was DeepMind as well as an entity on the Google side called GoogleBrain.

And these two AI labs came together to form this one world class AI unit. And really what my role is focused on is telling the story of how Google is developing AI and how we’re thinking about the future with AI.

So really, I mean, working on some of the cutting edge technologies which we expect over the next few years will reach billions of people and hopefully lead to solving some more of those big global challenges.

Helen Todd: I love your career trajectory and you’ve sat in so many interesting positions. And I love that you really do care about making such global impact. So I’m so excited to talk about AI cause it’s definitely a massive disruptor, similar to all these other companies you’ve worked at.

But before we dive into that, I know you’re a big sci-fi fan, and I’m curious, what’s some of your favorite sci-fi that’s influenced where you’re currently at, or maybe influence how you’re thinking about AI right now just out of curiosity?

Dex Barton: Yeah, I mean, actually probably. A lot of the science fiction that, you know, I consumed earlier in my career was all very space-related. So, it’s kind of funny, you know, definitely, I think a lot of it is still very applicable, actually, to what we’re going through with AI.

You know, the thing about science fiction, which I think people often don’t understand about the genre, or, you know, malign it for because they don’t understand it is that good science fiction mostly isn’t about technology. It’s about society and how, you know, communities evolve when they have access to the kinds of futures that are made possible by technology.

So, you know, for me growing up, you know, it was Kim Stanley Robinson’s Mars trilogy. that tended to be like incredibly formative, you know, when I was a teenager. It was the first time I had read really good science fiction, which was, you know, something that was trying to be realistic and was depicting the evolution of human society with a chain of events that was unleashed by people going to Mars in the early 21st century.

And, you know, most of those stories are not about, you know, the technology, they’re about governance, they’re about the economy, they’re about, you know, ethics. You know, and actually, when I went to interview with Elon Musk to be head of communications at SpaceX, we ended up talking for an hour all about the first book in that trilogy, Red Mars, you know, which is very much about what does governance look like in a future where humanity is facing these enormous global challenges.

And of course, global challenges on two worlds in those stories. And so when it comes to AI. You know, absolutely, you know, there are, there are lots of, you know, amazing portrayals of that, you know, I think, naturally, because, you know, fiction and science fiction are still trying to be exciting and they’re trying to appeal to a mass market, they tend to veer towards the extreme predictions of what that future looks like.

So, you know, of course, I think a lot of people when they think about science fiction depictions of AI, they’re probably going to think about things like the Terminator. You know, I think there’s other more thoughtful depictions though. I mean, actually, Kazuo Ishiguro’s Klara and the Sun, you know, was a story which I thought was very, very beautiful.

And that’s very much about what does, you know, one facet of human and machine existence look like in a future where AI is playing a big part in people’s everyday lives. So, yeah, I’m still reading a lot of science fiction amongst lots of other things.

Helen Todd: We’re definitely going to dive into governance in a little bit in the conversation and to the Terminator dystopian narrative, which dominates, I think too much of the AI conversations, to be honest, our last episode for Creativity Squared in 2023 was a Terminator 2 remake done as a parody where AI saves the day.

So it’s hopefully we’ll see more positive AI stories in 2024 as well too.

Dex Barton: Oh, actually, Terminator 2, the AI did save the day, right? It was Arnie who was playing a Terminator who was reprogrammed.

Helen Todd: That, that is true. That is true. So if you haven’t seen that episode, you’ll definitely have to check it out in the film.

Okay, so you’re at Google as Head of Communications for DeepMind. There’s a lot of AI projects at Google and Google you know, it’s been in the AI space for a long time for search. But can you give us a quick rundown of some of the, at a high level of like all the different Google AI initiatives?

Cause I know that there’s a lot that comes out. It seems like almost at a weekly basis, there’s a big new announcement being made.

Dex Barton: Yeah. So 2023 has been a year of incredible pace for AI at Google. I joined Google DeepMind in April and, within two weeks we’d announced Google DeepMind, which was this new AI unit, which brought together those two teams you mentioned.

So DeepMind, you know, based in London, as well as a team, GoogleBrain. which was part of GoogleResearch and, Google basically have these two world class AI teams and bringing them together, you know, to build this new unit, Google DeepMind, so that we could bring together world class talent, compute resources for the next phase of Google’s AI journey, that was really the goal there.

And, you know, we’ve seen incredible momentum over the year because of that.just in the last couple of weeks, we announced Gemini, which is Google’s largest and most capable AI model, our most general model. And this is something we’re really, really excited about, because Gemini is something that, you know, it’s been built as a multimodal foundation model from the ground up.

And that promises to give us all sorts of incredible new capabilities, you know, as well as, you know, very sophisticated reasoning, you know, world class coding [00:12:00] capabilities that then allows us to go and create applications that are much more useful for people. So we’re really excited to talk a lot more about Gemini over the next year.

You know, billions of people are going to be using Gemini and experiencing the capabilities through Google products. Developers can start building with it as of just in the last few days when we released the GeminiPro API. So we’re really excited by that. There’s so many other things we’re working on though.

So, you know, there was a week just a couple of months ago where we announced both GraphCast, the most advanced weather forecasting model in the world, at the same time as we announced Lyria, which is the most sophisticated music generation model. And so, there’s lots of things we’re really excited about and, you know, actually, this is what makes communications for DeepMind so exciting.

We’re just working on so many different things that are, you know, starting to reach people in the world.

Helen Todd: I’m sure you have a very full plate as head of communications at DeepMind. But as a speech writer and ghost writer and writer yourself on the intersection of creativity and AI, how, how do you personally use the tools when you’re writing or do at all?

I’m curious from a personal angle, how you, if they augment your writing or how you think about it, as you write as well?

Dex Barton: Well, you know what I use Bard and other AI tools at Google for is really, it’s actually testing my bad ideas really quickly. So like, you know, I think one of the things, you know, when you’re a writer or, you know, even if you’re not a writer, you’re just working on projects, you know, as a knowledge worker.

There are so many ideas you can have and different ways of doing a thing, right? Like, there’s never just one right way to do something. And there’s a cost involved. And the biggest cost, of course, is time just to explore what a bunch of those paths look like. So actually, what I start, what I’m starting to use AI for now is very quickly giving me you know, an outline or helping me build a thought upon a thought upon a thought to see what might that path look like if I choose to go down it just a [00:14:00] little bit further.

And it’s, it’s been something which, you know, in most cases, it doesn’t actually change the way I would write something like actually, you know, exploring those alternate paths confirms actually my initial impulse, you know, to write in a particular way. But actually like just being able to test this a little bit more gives me more confidence in the final output that I’m delivering that actually, Hey, the choices I’ve made here are the right ones.

So I think actually, you know, that kind of rapid prototyping of ideas is a thing which I think is really, really interesting. There are other things, you know, where, where I’ve started to use AI to tackle what one person described to me, you know, a few months ago, which I loved the phrase, cognitive manual labor.

There’s a sort of bunch of administrative or logistical things that you just have to deal with as you’re managing a project or you’re, you know, synthesizing information together in order to reach new insights about new content. A lot of that stuff isn’t very interesting. It’s not very exciting. It’s not very high value, but you just have to do it as a knowledge worker and actually being able to use AI to just speed it up a little bit.

That ends up unlocking more time for the higher value, you know, the higher order thinking. So, that’s, it’s a piece I’m really excited about actually next year. I’m actually setting myself a personal goal to spend a lot more time investigating the sort of bleeding edge of AI tools and figuring out how they intersect with communications and marketing.

Because of course, I think everything we’re doing, you know, both you and me, Helen, I think everything is going to be transformed in a lot of ways, you know, both big and small over the next few years. And I think, it’s really contingent upon all of us to have a sort of personal disruption strategy as I call it, you know, to be ready to transform the way we work before it is, you know, required for us to transform.

Helen Todd: I love that. Well, we’ll have to check back in, six months or so next year, how the challenge is going. And I keep on saying we’re recording this before the holiday break of 2023, that I’m going to all the time I haven’t had to play with all the AI tools and stuff, I’ll do it over the break. So, I might adopt your challenge too. I think that sounds great.

And it seems like multimodal is kind of like one of the buzzwords going into 2024. And when Gemini was released, recently, I think it was December 6th, there was some really compelling video of, I don’t know if it was machine vision that captured it, but of someone drawing and then the computer reading out like what they’re drawing almost like a, what was it? Pictionary with a computer more or less.

So is, is that kind of like a key trend that you see going into 2024 too, is multimodality? And if so, you kind of shook your head for, our listeners who can’t see, what are some of the other implications of a multimodal feature with AI?

Dex Barton: Well, the way I think about this, right, is humans are multimodal. We’re completely multimodal creatures. We’re processing, you know, vast number of inputs at any given time. You know, we’ve got multiple sensors. You know, the way that the first sort of generation of you know, these, these mass AI tools showed up to people, you know, the sort of first wave of conversational chatbots and things, they were very much focused on a single modality, or where they appeared to have some sort of multimodal capabilities, right, you know, you can use a chatbot, which also generates images, it tended to actually be, you know, basically, you know, cobbling together of different models.

It actually wasn’t, you know, a richer synthesis of capabilities where the models actually were able to do things that were more advanced, which combined the learnings and the understanding across many different types of modality. And with, you know, with Gemini, for example, which is multimodal from the ground up, we are, you know, heading towards a future where that kind of capability will be something that very much is something that people want to make use of in their everyday lives.

And I think what it promises, you know, at the high level is we get towards tools that are genuinely more useful and are much more sophisticated because they can blend together seamlessly the understanding and the operations across all those different types of content, right? You know, so, you know, one of the demos that we gave an example of when we, we announced Gemini was researchers using Gemini in order to sift through scientific papers to find insights.

And, you know, if you’ve looked at, you know, scientific journal entries, right, like you can have vast amounts of valuable data that are hidden in, you know, tables of contents and spreadsheets and so on. And it’s a real devil to actually extract, you know, that stuff from those papers using the existing generation of tools, you know, you can actually get text out quite easily, but then sifting through the numbers and, you know, then actually being able to, you know, figure out how to interpret all of that data together, much more challenging.

And so we we have with Gemini the capability to go and do that. That promises just in that one area right with researchers, you know, to speed up the way that they’re, you know, pursuing [00:19:00] new breakthroughs and new discoveries.

And I think it will be much the same right in the ways we work. There are all the different types of content we’re using on a daily basis. You know, you may be watching a YouTube video, you may be reading a whole bunch of blogs, you may be listening to a podcast and actually being able to connect the dots across all of that is going to end up tremendously valuable.

Helen Todd: Yeah, it does seem like all of the connecting the dots, well, I guess just AI in general is kind of like trying to replicate our minds and how we work and operate. And it’s getting closer and closer to that. So, and even I think one of the wildest things that came out of this year that I’ve seen is, some of the research about taking what’s happening in our brains and then using AI to decode and translate it into images and moving images.

So on the sci-fi front of literally taking what we’re thinking and turning it into digital content where like that technology already exists, which is pretty wild. But the more that you can read my brain, the better. So I welcome all of the, the AI tools on that front to, to get it out.

But one thing I definitely wanted to talk to you about on the show is kind of where we sit on the regulatory and institutional front because you sit at such an interesting seat having worked at Meta for the oversight board, which, and now at Google DeepMind.

And I’ve said on the show before that, you know, I think the oversight board was actually very imaginative and forward thinking of Facebook/Meta to do to it, you know, we live in a world of borderless technology. We’re all connected digitally and we don’t really have adequate institutions to make everyone equal digital citizens.

You know, we have different levels of digital citizenship depending on where we live and whatnot. The problem with the oversight board is that it’s derivative of Facebook and it’s kind of like a child telling a parent, like, this is how you’re going to parent me. But I do think it was very, I don’t know, ahead of its time in thinking that way.

Yeah. You spoke at the AI safety summit and are thinking about these things. So I’m really curious from the seat that you sit kind of where we’re at and what’s kind of needed for AI and the global impact that it has.

Dex Barton: Yeah, absolutely. I mean, I think the oversight board, right, was a really interesting and important experiment because it reflected the fact that we are facing novel challenges from technology and existing mechanisms for governance clearly were insufficient.

And, you know, I think, you know, everyone who’s involved in the oversight board project, you know, certainly myself, you know, came at this, you know, with a great deal of humility, which was this is a small part, a very small part of a much larger ecosystem of solutions that’s needed. Nobody was saying that the oversight board is going to solve all of the problems, you know, people were experiencing with, you know, [00:22:00] Facebook and Instagram.

But it was certainly an interesting route to go and explore and to see if there were particular things, you know, such as, you know, building in a much more principled human rights driven approach towards content moderation, having a much greater focus on digging into challenges in the Global South, you know, facing users, you know, in places that have historically been underrepresented, you know, amongst Western technology companies and how they think about platform moderation.

That was really, you know, the sort of impetus that drove all the work we were doing there. You know, and I, I think the commonality right with AI is we’re coming again. At this very novel set of applications and tools, recognizing that again, we’re probably going to need new types of governance and involved types of governance and, you know, Bletchley Park, as well as, you know, all the other sorts of recent, you know, momentum we’ve seen, you know, in looking at new solutions, you know, whether it’s on the US side with the White House declaration or you know, with the EU act, I think it’s very much driven again by seeing how do we build up this architecture designed to solve problems, you know, in areas that we don’t currently necessarily have the right, you know, frameworks, you know, to fully account for the new kinds of challenges?

And, you know, one of the things, you know, we think about a lot at Google DeepMind, right, is what are the new mechanisms you’ll need in a world where AGI might exist, you know, which, you know, might be a decade away or more than a decade.

But still is something that we have pretty strong confidence is on the horizon. You know, we have foresight of much more advanced systems that are coming into the world. You know, Gemini as the most capable, you know, model from Google is just a sort of proof point on the road towards that, that future.

Absolutely, you know, in a world where you have, you know, human like intelligence, human level intelligence, you know, from AI, do we have the right current set of solutions? You know, probably not. We probably need to invest much more in thinking about how companies develop these tools responsibly, as well as how these things are used in the outside world.

And, you know, what we’ve seen over the last year with the summit and other things is a lot of different actors, including governments starting to come together around the table and to agree on what is a shared research agenda, what are the things that we need to.absolutely, you know, have a point of view on before we then develop those solutions.

And, you know, I think the progress has been really, really encouraging over the last year, and I think we’ll see more of it next year.

Helen Todd: Yeah. Thank you for sharing that. And, and I, it’s so easy. People love to hate Facebook in and all its capacities. And I do think, cause I followed what was happening at the Meta oversight boards pretty closely that it was very impressive who they brought to the table, especially on the human rights front. So I don’t want to discount that, but I think how you framed it as an experiment is a good way to, to look at it.

In having worked at the oversight board and knowing that we don’t have, I guess, the adequate institutions now, what is your biggest like takeaway that we need for a future institution or similar entity?

Dex Barton: I think it’s probably that we really do need to think about diversity and inclusion when we build these institutions. You know, it’s easy for people to, you know, sloganeer. You know, and to talk about how they’re building inclusive entities, but actually building bodies that really do bring together lots of different points of expertise, including points of view that you don’t agree with necessarily, and represent just very different perspectives, you know, outside of just a, you know, hardcore technology, you know, point of view.

I think that’s really, really important.you know, in a future where, you know, AI is going to have this fundamental impact on society, on communities, on businesses, like, actually, most of the challenges aren’t necessarily going to be technological. They’re going to be things that, you know, obviously have a technological root, but they’re societal challenges.

And that means, you know, the points of view you need are going to be much, much broader. And when you have very introverted conversations, you know, within the tech industry or within the ecosystem, and they aren’t things that engage, for example, you know, actors from the Global South or business leaders or, you know, civil society, you know, leaders or, you know, experts from fields outside of, you know, the sort of usual suspects within STEM, I think that’s when you’re doing yourself a huge disservice, right?

Because actually, you’re probably not going to build tools that fully account for the way that the world is going to be using them. And particularly when you’re dealing with a technology that is this powerful, you know, where the potential for misuse of this technology is certainly one of the greatest risks, this is something we absolutely have to think, you know, in a much more long term perspective, we need to get those points of view in order to develop that technology so it is safe and responsible.

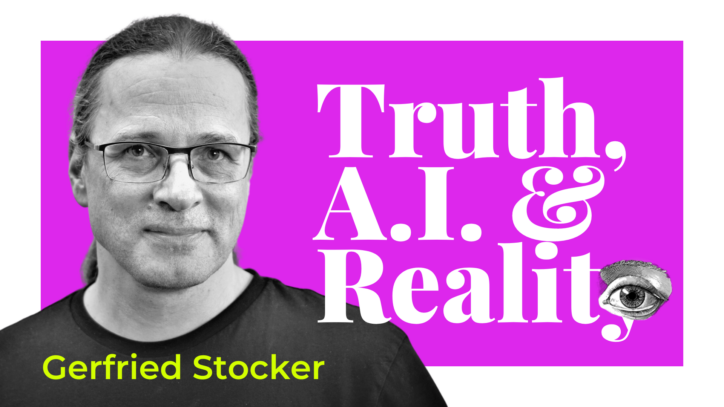

Helen Todd: I couldn’t agree more. And the voice of a previous guest is kind of popping up in my ear, Gerfried Stocker, who’s the head of programming for Ars Electronica.

We were kind of talking about a similar thing. And he also mentioned that it’s, it’s also a very Western thing to say, we need to come up with the answer. And then, think that we have all the answers, that we really do need to be inclusive of all ideologies around the world to and even wanting the answers can be very Westernized to, to a certain extent as well.

And one thing that was kind of interesting when I was preparing for the interview, there was an article in the Guardian, it was an interview with Demis Hassabis, and he actually proposed in the article, very specific examples of bodies that a new entity could be based off of including the intergovernmental panel on climate change and really looking into like the UN as, maybe a housing for some of these watchdogs. Is that something that seems like in the right direction to you to, from the seat that you sit in?

Dex Barton: Yeah. And so what what Demis was talking about there wasn’t necessarily that any of these existing institutions should be the right model, you know, or a sort of analog for what we need, but that there could be interesting lessons from again, a wide range of these entities.

So, you know, for many, many years, there’ve been lots of people who’ve been talking about things like building a, you know, IPCC for AI, which is a real mouthful to say, you know, a cop for AI, you know, a CERN for AI, you know, which has been popular amongst a lot of engineers and researchers.

And again, none of those bodies is a direct fit for this, but there’s something in the lessons learned from building these, you know, very different frameworks for international action at large scale that potentially could be useful as we think about that governance for AI.

And I think, you know, what has really driven the way we think about these things is the fact that AI, you know, could be one of the most powerful technologies in human history. You know, it is absolutely something that we think about as having an effect across humanity. And that means we do need to think about what does a global framework for action look like.

You know, we’re in an age where technology diffuses so quickly, you know, through, you know, the World Wide Web, you know, obviously, there are multiple services out there, which have billions of users, there’s many, many Google services, which have 2 billion users.

So, as we think about that future where the capabilities of AI are getting more and more advanced, and certainly, you know, people around the world will want to make use of AI tools, we do need to think about, again, how do we really drive global action to think about these things together rather than just individual jurisdictions or individual communities racing to get ahead, but not actually understanding that, you know, ultimately we’re all in this together.

Helen Todd: Well, I know that you had the privilege to get to attend the, UK Safety Summit. And then recently the EU passed the EU AI act as well. And I know Europe, you know, really does lead the way when it comes to regulating AI. What were some of your biggest takeaways for the current, you know, conversations at the summit and, or the EU AI Act that just passed that, you know, probably has implications and ripple effects around the world too?

Dex Barton: Yeah, I mean with the EU AI Act, I think we’re still digesting that and what, you know, the full, you know, implications are. Obviously something that’s very, very important, you know, within the global AI ecosystem.

You know, and certainly I think, you know, at a high level, you know, the sort of direction of travel, the principles that Europe is driven by and seeking to, you know, safeguard the impacts of of AI on populations. I think that’s something we very much agree with. You know, with the UK AI safety summit, you know, I think we were very encouraged at the fact that so many diverse actors were willing to come together around the table and commit to understanding the challenges of AI and building a shared research agenda to really, you know, unpack these, as well as I think the focus on not just talking about the challenges and what, you know, the potential risks of AI are, but really ensuring that action also drives, you know, progress on, on realizing the opportunities.

And it would be a mistake to only focus on one aspect of that, and ignoring the other, I mean, really, you know, AI is something that we, you know, believe will have a positive societal impact if we manage the risks well, you know, we wouldn’t be building this technology unless we believed in it very, very strongly, and we need to ensure that the debate doesn’t end up lopsided, and we think only about it in terms of the pitfalls, because that’s when we will lose sight of the opportunities.

That’s where we will end up just, you know, throttling this, you know, rather than actually saying we need to build it safely. We need to build it responsibly. Absolutely. That is essential. But we also have things to do with this AI that are about improving life for billions of people. So actually seeing that governments and industry and civil society and others around the table really did want to continue driving that action to see, you know, the value of AI realized, I think it’s something that we were very encouraged by

Helen Todd: Hearing you say all that it is very encouraging to hear as well. And also again, not to concentrate just on all the dystopian futures, I’m a big believer and I’ve said on the show kind of we manifest our realities. And if we only talk about the dystopian, that’s, you know, what’s going to happen.

And I think you said on another interview, you know, AI opens up all of these possibilities, but it’s up to us to choose which path we go, which, I really appreciated what you said about that.

I know you’ve also said transparency is a very big element to help build trust. And since you said it a little bit earlier in our conversation about, you know, the risk of bad actors, I did want to surface that because we’re going into 2024, this episode will be out sometime in 2024 and it is a massive election year and I didn’t realize how big until I went to the Content Authenticity Initiatives Symposium.

All these AI things have these big mouthful of words and acronyms, but one thing that they shared at the symposium is that there’s going to be over 40 national elections taking place globally.

And I’ve seen two different metrics. The Economist reported out that over 4 billion people will be going to the polls, which is you know, half of the planet voting next year, and we won’t see another election year like this for a quarter of a century, which is pretty wild when you think about the bad actors with misinformation, disinformation, deep fakes hitting the election, you know, news cycles and whatnot.

And then also, you know, from the seat that you sit from the Meta oversight board of content moderation, you know, it, it opens this whole can of worms. But how, how are you thinking about AI going into 2024 through the lens of it being a pretty wild election year?

Dex Barton: Yeah, absolutely. And I think just a few hours ago, actually, Google published a blog outlining all the different steps we’re taking to secure elections in 2024. And there’s obviously a lot of different things in there about, you know, establishing, you know, best in class security and really, you know, having the kinds of tools and systems that allow us to have confidence there.

You know, I think there’s, there’s not a single, you know, easy fix for securing elections. Obviously, AI is something that is going to be used in a lot of different ways, you know, to generate content, you know, to analyze information. There are probably many, many different things that are needed as part of the solutions that we need, you know, to really ensure that in lots of different areas, not just elections that people do have trust and confidence in the way institutions function more broadly.

And that’s obviously important for democracy, but also business and society, you know, earlier in the year, you know, Google created new disclosure rules for election advertising, for example.

So, if you’re using AI in, in your messages or visuals, you know, that that’s something that needs to be disclosed. That’s just like a small example, right, of where changing policies can have an impact. And that’s the sort of thing that, of course, you know, as you talked about, you know, in some of my previous roles, you know, other institutions have been, you know, exploring how to have that kind of impact.

But I think there’s more to come. Obviously, you know, the last few years have been incredibly challenging for, you know, the way that elections and democracy and democratic institutions have functioned as they’ve had to adapt to new types of technology. And this is something where we’ll obviously have a bunch of insights and expertise from the technological side.

But then, of course, we need to work together with policymakers, with regulators, with civil society, with experts to then figure out how do we all work together, you know, to really ensure that, you know, ultimately democracy is something that, you know, remains, you know, secure and is supported well by, by AI and other technologies.

Helen Todd: I think it will be a very pivotal year for democracy. And I think one maybe another buzzword going into 2024 and just the future in general is collaboration from industry, academic, across the whole board to really solve some of these big challenges that that you’ve outlined. So yeah, buckle up.

2023 was a wild year in 2024, between the election and everything that’s going to be released and the new tech, it’s going to be pretty wild.

Well in talking about 2024, you’ve done some over 50 talks in 2023 and talked to a lot of different audiences. What’s been kind of a main theme or a main question that you’ve been asked a lot when you’re up on stage that you can share with the Creativity Squared community?

Dex Barton: You know, I think more than anything, you know, even with all the, you know, very important, you know, conversations we have to have about, you know, safety and responsibility and elections and, you know, other challenges that AI presents, you know, I think people are just trying to understand what does the future look like?

You know, obviously when the smartphone revolution came along, you know, we saw tremendous, you know, change across every aspect of our lives, you know, billions of people ended up using smartphones. There was a whole new, you know, economy that was powered, you know, by apps, you know, you saw the implications from the economy to foreign policy, to civil society to obviously elections.

And people this time they’ve gone through that experience just in the last, you know, 15 years. So, we’re all, I think, very cognizant that AI potentially is going to be a much greater transformation, right? Because it’s something that builds upon the previous generations of change and, and innovation, including, what happened with the internet and social networks and smartphones.

So, you know, very much everywhere I go in the world, you know, business leaders, civil society leaders, you know, government leaders, everyone is trying to figure out, what are those biggest points of disruption? What are the things that are exciting opportunities that are going to change the way we live? You know, obviously, you know, I think all of us dream of living in a world in which, you know, cancer is defeated.

You know, and healthcare is massively transformed, and you know, some of the challenges with accessing resources and providing resources for, you know, many millions of people around the world, all those things are solved. And that is something which, you know, AI potentially is going to have a very, very important part in doing that.

And then the sort of long term possibilities, right? You know, you think about a century from now, which is not very long at all in human history. And potentially you’re looking at much more dramatic changes in the way that humans live and work because of the arrival of technologies that are being developed right now.

And I think that’s something that’s, it’s very humbling to think about that, right? Because of course, many of these things will unfold after our lifetimes, but they certainly will happen in the lifetimes of our children and grandchildren.

And, you know, one leader sketched out for me when I went to Google DeepMind what the trajectory was between developing AI now to solve certain things in fields like energy and what it would take to get humans to mass habitation of the solar system.

And it actually wasn’t as far flung a chain of events and inventions and discoveries as I would have thought. It certainly was a whole sequence of steps that I thought I hadn’t thought about before. And, you know, as somebody who, you know, who previously had been arguing over what does a two world economy look like with Elon Musk, actually, that floored me to think that now I might be part of building the sort of social and economic model for, you know, human habitation of the solar system in a way that, you know, SpaceX is very much focused on just the transportation system to get to another world.

So even being able to think about some of those debates and some of those very different opportunities, I think that is the incredibly exciting and sometimes slightly overwhelming thing about the age of AI.

We actually can start to contemplate these very grand futures. And so, you know, I certainly love having these conversations with people around the world. And I think people are hungry to have them.

Helen Todd: I love that. I know I put in one of my Instagram posts that Oftentimes AI is described as a mirror reflecting us back to ourselves, which there’s so much truth in that, but I think it’s more exciting to see AI as a portal into what can be and exploring all these potential features.

And one gentleman, Ramin Hasani, who’s the co founder and CEO of Liquid AI, just announced his neural, Liquid Neural Networks, company that came out of MIT, when I had the chance to talk to him about AI, he described it as instead of having one Einstein trying to solve, you know, one of the biggest physics questions or about the nature of our reality, AI is just going to open up 10 or infinite Einstein’s trying to solve all these questions.

So to your point about just expanding our minds and what’s possible and the steps to get there is so fascinating.

Dex Barton: Yeah, actually, I mean, there was a technology that DeepMind worked on right AlphaFold, which I think I mentioned earlier. And, you know, this was something that was designed to solve the protein folding problem in biology.

And after more than 50 years, scientists had figured out the structure of about 170,000 proteins, which sounds like a lot, except that we reckon there are more than 200 million proteins. And, so of course, you know, very, very long way to go. And then within the space of just a few years, DeepMind was able to go and build a system, which was able to figure out and predict the structures of more than 200 million proteins and to do it with an incredible amount of accuracy.

And so, you know, that pace of advancement now that’s happening in science is truly, you know, astronomical. You know, and that’s, that’s what makes it a really interesting challenge and, you know, an adventure as a communicator, because the story now is unfolding at such lightning speed, even faster than any of the previous generations of tech that I’ve worked on.

And, you know, I was working on the mobile revolution that happened and, you know, obviously the social, you know, revolution and then, you know, the space industry and everything that was happening there. But what is happening now, I think is an order of magnitude greater at scale and speed.

You know, we’re living through history right now. And that’s something which is, you know, very, very profound.but it’s also something that, you know, we’re going to have to work in entirely new ways just to keep up with that.

Helen Todd: I love that you’ve been at so many companies at the cutting edge of tech pushing society our societies forward and that you love story so much and understand the power of story with things moving so fast as they are right now, it’s overwhelming there is a lot of fear and it’s almost like yeah I mean as someone who has a podcast that explores it, it’s hard to stay on top of all of the news I’m sure you’re in the space. It’s hard to stay on top of all the news across all the different platforms.

Like how how does the role of story in our slow human mind sometimes to wrap our heads around the technology? Maybe we could talk a little bit about like the power of story because how you said it was just so beautiful.

Like how does story like address the AI overwhelm? Like and how do you get a story to break through the noise? Because there’s so much noise around AI too. I’d love to hear your thoughts about how you think about story related to AI.

Dex Barton: Yeah, I mean stories are the ways that people communicate. They have been since the dawn of time, you know, being able to repeat things to people and things that are more than just information.

They are things that resonate with the way people feel, and the way that people think about the world more broadly beyond just that single point of information. That is the, the nature of the challenge, right? And the nature of the opportunity.

When you tell a good story, It stays with you. It becomes part of you. It has an emotional impact as well as a pure transmission of knowledge. You know, and that’s what makes you then repeat it to other people and then has that kind of transmission effect, which can lead to very powerful change within societies.

And, you know, you think about all the, you know, great moments in human history and changes and revolutions and, you know, breakthroughs, you know, in so many cases they were driven by the power of storytelling, you know, done in very, very artful ways.

And with AI, you know, in this world, which is moving so fast now, and where I think all of us feel, you know, this pressure between, you know, trying to just keep up with what’s happening now, but also trying to prepare for things that are more longer term and maybe less urgent, but still really, really important.

I think stories can help us cut through it. They can help us focus on the things that are important but also allows us to communicate with lots of different people in ways that, you know, do resonate, you know, much more powerfully,

You know I think with a I storytelling is particularly important because so much of the technologies so many of the technological debates are incredibly dense, like they’re actually things that are far too complicated for the vast majority of the community to actually understand, at least without, you know, spending a lot of time preparing, you know, to understand, which we just don’t have.

And so actually the ability to tell the stories where you can unpack them for a broader audience. I think that’s key to allowing us to take along the community as we develop those technologies.

Helen Todd: That was so beautifully said. This might be the most important story that you work on in your career. But as you were saying that, and that since popped into my mind, I’m actually curious because you’ve had such a fruitful, amazing career so far, what has been like a career highlight for you?

Like, if you could like, look back because you’ve got so much more ahead of you and I’m, it’s always so fun following you and everything that you’re up to, but as like a snapshot of Dex right now, what’s, like a career highlight for you or maybe even one or two since you probably have more than one?

Dex Barton: Yeah. I mean, honestly, like I would say it was most recently the launch of Gemini and, you know, being able to work as obviously part of a much larger team, you know, from across Google that were shaping that story. But I think, you know, as we worked on that and, you know, the day, you know, when we announced this to the world, you know, happened, you know, just a few weeks ago, you know, I think everyone involved was struck by just what a potentially significant milestone this is on the road towards building AGI.

And it’s something that felt personally you know, incredibly motivating, you know, to work on that, because I do want to live in that world where, you know, we are able to bring AI to solve those global challenges. I mean, that has been the constant theme of my career. We are starting to see what this technology can do, even in its much more basic form, to go and attack some of these problems.

And, you know, 10 years from now, which is an unfathomable long time in technology, you know, we have very strong confidence and foresight of what’s coming. That we think will have much more powerful and valuable technologies that are brought to bear on these problems. And so, you know, I certainly feel a great deal of urgency in the work that I’m doing, you know, even though I’m obviously playing a very small part in, you know, in telling the story and, you know, the work that’s going on across, you know, organizations and experts around the world to advance this technology.

But, you know, I think everyone who works in communications, you know, wants to work on things that are ultimately meaningful for our society. You know, we want to tell stories that matter. And this feels like something which I, you know, I think you put it, you know, well, right, this might be the most important story that I tell in my career.

Helen Todd: I know that we could keep going and going, but I try to keep these interviews to under an hour and I could definitely hear so many more of your stories.

But if there’s one thing that you want our listeners and viewers to remember, whether about AI or from today’s conversation, what’s that one thing that you want them to, to walk away with?

Dex Barton: I would probably say science fiction is something that we dismiss at our peril. You know, science fiction is very often science fact before it’s time. That’s what I always tell people. And we’re starting to see technology allow us to do massive and audacious things.

And I think there’s two ways that a lot of people approach that: they can take it seriously and understand what’s coming and the technologies that are powering that change and try to learn more and to adapt to that. And the other very human reaction is to not take it seriously and to sort of dismiss it and say, well, you know, I’ve heard lots of things get hyped before, you know, and like, I’m not going to rise to the bait this time and I’ve got other things to worry about.

And I think that would very much be the wrong approach. Like, things that may seem science fiction right now are coming, and they are going to have a huge impact on the way that billions of people live their lives.

That is just incontrovertible fact, from my point of view. So, we need to take this stuff seriously, we need to understand the consequences, because there will be things that are very, very good, and there will be things that are very, very challenging, and we need to understand both if we want to, make the most of it.

Helen Todd: Again. So well said. Well, Dex, thank you so much for all of your time and sharing your insights and optimism for AI on the show. It’s really been an absolute pleasure. So thank you so much.

Dex Barton: Great to join.

Helen Todd: Thank you for spending some time with us today. We’re just getting started and would love your support. Subscribe to Creativity Squared on your preferred podcast platform and leave a review. It really helps and I’d love to hear your feedback. What topics are you thinking about and want to dive into more?

I invite you to visit creativitysquared.com to let me know. And while you’re there, be sure to sign up for our free weekly newsletter so you can easily stay on top of all the latest news at the intersection of AI and creativity.

Because it’s so important to support artists, 10% of all revenue, Creativity Squared generates will go to Arts Wave, a nationally recognized nonprofit that supports over a hundred arts organizations. Become a premium newsletter subscriber, or leave a tip on the website to support this project and Arts Wave and premium newsletter subscribers will receive NFTs of episode cover art, and more extras to say thank you for helping bring my dream to life.

And a big, big thank you to everyone who’s offered their time, energy, and encouragement and support so far. I really appreciate it from the bottom of my heart.

This show is produced and made possible by the team at Play Audio Agency. Until next week, keep creating

Theme: Just a dream, dream, AI, AI, AI