Ep3. A.I. Art Superpowers: Discover Chad Nelson’s Collab with OpenAI DALL·E on the First-Ever A.I. Animated Short Film & Vogue Cover

In episode three, we talk with Chad Nelson, an award-winning creative director and technology strategist with over 25 years of expertise in designing cutting-edge interactive experiences and entertainment. His portfolio includes industry-leading video games, TV shows (both traditional and interactive), mobile apps, and 3D tools for digital artists. As co-founder of the technology start-ups Eight Cylinder Studios and WGT Media, Chad has collaborated with Fortune 100 companies such as Microsoft, Intel, Sony, and Virgin. Chad also serves as a creative collaborator with OpenAI.

“A.I. tools aren’t replacing me — they’re giving me superpowers.”

Chad Nelson

In April 2023, Chad released CRITTERZ, the first animated short film that is 100% designed using A.I.-generated visuals with DALL·E. The short was also released on the OpenAI DALL·E Instagram channel. It was created as a collaboration with Native Foreign to celebrate the one-year anniversary of DALL·E and showcases how human creativity can adopt and push A.I. tools.

His A.I. work recently appeared on the cover of Vogue Italia’s May 2023 edition with cover model Bella Hadid! Vogue Italia partnered with Carlijn Jacobs to shoot Bella Hadid at an empty studio in New York City. From there, Chad and Carlijn utilized DALL·E to ideate, visualize, and generate the backgrounds and extend the fashion.

In our conversation with Chad, you’ll get to hear all about his creative process, what questions he’s thinking about related to A.I., how he approaches prompts, the making of CRITTERZ, and much more!

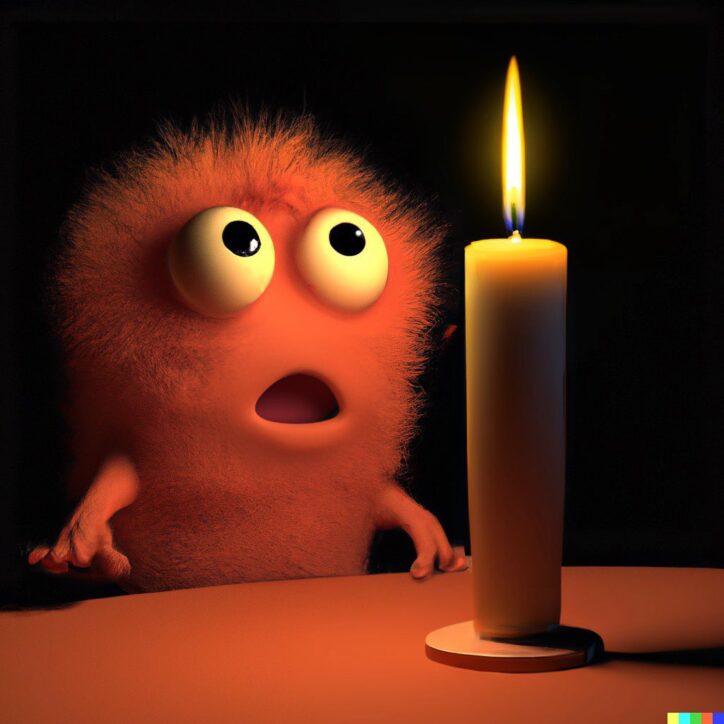

“a red furry monster looks in wonder at a burning candle” Source: @dailydall.e

Discovering DALL·E

When Chad first heard about DALL·E, he was right away interested in experimenting with the technology and tried to find a way in! It was through an Instagram Live he watched where he was able to connect with someone from OpenAI and receive access to an early beta for artists.

It became the start of what turned into an amazing, unexpected year of just creativity and discovery.

Chad Nelson

He sees his work as “before DALL·E” and “after DALL·E.” When he first got access to DALL·E there were a lot of guardrails about depicting people and celebrities. So he turned to his old animation days and typed in “A furry monster looks in wonder at a burning candle.” He was immediately blown away and knew A.I. had the ability to create something people could emotionally connect with. That day he produced more than in a single day than ever before in his life at a high-quality. So much work, he was inspired to set up the Instagram account @dailydall.e. He shot off an email to OpenAI with some images to thank them, and around, he posted the image of the furry monster looking in wonder at a burning candle on his new Instagram account and then went to bed.

CRITTERZ

Chad started to build these “muppet-type/Jim Henson/Pixar” characters, and OpenAI’s researchers couldn’t believe that DALL·E had fully made the images without Photoshop. Chad wanted to push the technology and see if it could tell a story, and pitched the idea to OpenAI to collaborate on a film together. CRITTERZ was born!

The whole point of this is to share information so that people can see how A.I. actually can empower them.

Chad Nelson

The short film is a David Attenborough-style documentary that collides with a Monty Python sketch. It explores themes of identity issues and is a societal reflection through the characters. Chad worked with NativeForeign to animate the film and bring it to life with voice. CRITTERZ was released on April 6, 2023, on Chad and OpenAI’s Instagram channels to celebrate the one-year anniversary of DALL·E.

For Chad, it’s about being open with the process, and he’s not doing this in a closed bubble. He wants people to see how A.I. can allow them to succeed in realizing their dreams and visions as opposed to just feeling they have no outlet.

The DALL·E Difference with Inpainting and Outpainting

Why DALL·E is Chad’s tool of choice is informed by DALL·E’s inpainting and outpainting capabilities which sets it apart in the space. DALL·E is an AI model created by OpenAI that generates images from textual input. Inpainting and outpainting are two techniques that DALL-E uses to create these images.

Inpainting is the process of filling in missing or damaged parts of an image. DALL-E uses inpainting to generate images that contain missing or obscured elements based on the textual input it receives. For example, if the input is “an armchair with a torn cushion,” DALL·E can generate an image of an armchair with a visible tear in the cushion.

Outpainting, on the other hand, is the process of generating an image that extends beyond the boundaries of the input. DALL·E uses outpainting to create images that are larger than the input it receives. For example, if the input is “a penguin standing on an iceberg,” DALL·E can generate an image of a larger landscape with the iceberg and penguin as a small element within the image.

These techniques allow DALL-E to create more complex and detailed images that go beyond the limits of traditional image generators. By combining inpainting and outpainting with other image generation techniques, DALL·E can create a wide range of images that closely match the input provided to it. And in Chad’s case, bring his story for CRITTERZ to life larger than a square image for Instagram.

“A striped hairy monster shakes it’s hips dancing underneath a disco ball” Source: @dailydall.e

The Implications of A.I. for Artists

But does all this mean Chad will eventually be out of a job? No. He steers the ship. He did ask himself if he was training a model to do his job and he would become extinct. However, he realized he was a curator or ultimately the art director. DALL·E can produce these images but can’t tell if they are great or terrible.

Let’s work through these things together, and really build the future that we all dream of that we all want. And that at the end of the day is empowering to humans.

Chad Nelson

A.I. is really just enhancing his work and making him think through his ideas much faster than before. In that moment, his thoughts went from fear to empowerment. At the end of the day, A.I. is supercharging his art and he’s always in the driver’s seat.

Chad does realize there are many implications when it comes to A.I. and copyright and protecting artists. Technology will always move faster than the law. He believes in asking questions and figuring out the answers together. Since the law is behind, companies have to take a more responsible stance and take the more ethical approach over the capitalistic approach.

As a collaborator with OpenAI, he has found it to truly be a two-way street with the researchers. The conversation is open about what DALL·E can and cannot do, and he’s encouraged how much the company listens to artists like himself.

Source: @dailydall.e

Chad’s Approach To Prompts

As we’ve said previously, 2023 is the year of the prompts! We were eager to hear how Chad uses them for his work. He likes to begin with a simple, conversational statement, and then build on it by describing the setting, characters, atmosphere, and aesthetics. Chad likens it to writing a screenplay and suggests that prompts should be logically structured and easy for the computer to understand. Some artists take a more poetic approach to their prompts and that can result in amazing art that Chad compares to a visual slot machine. Try what works for you!

It’s almost like more of a visual slot machine, you don’t even know what you’re gonna get back.

Chad Nelson

He also has found that DALL·E has knowledge gaps as it’s still learning. When he can’t push the images any further, he calls it the DALL·E dead end!

Image credit: Vogue Italia

From CRITTERZ to a Vogue Cover

Conde Nast partnered with OpenAI and Chad for the Vogue Italia’s May 2023 cover featuring Bella Hadid, and we were lucky enough to talk with Chad about the project before it hit newsstands.

“The age of A.I. is here… and this is only the beginning.”

Chad Nelson

The vision for this project was to meld traditional high-end fashion photography with A.I.generative visuals. Vogue Italia partnered with fashion photographer Carlijn Jacobs and supermodel Bella Hadid to make it happen. Bella was photographed in an empty space, and Chad used DALL·E to create surrealistic backgrounds and extended fashion to create imagery that couldn’t be achieved through normal photography. Chad shared:

“Within each photo (15 in total, including the 2-page foldout cover), we generated hundreds of images to get DALL·E to match Bella’s lighting and camera perspective so that we could build the wardrobe extensions and surrounding environments. The images range from realistic to surreal as we wanted to showcase the extent of Dall.E’s visual capabilities.”

When Chad first started DALL·E, he could have never imagined the doors being opened and the opportunities being presented. He told us that never in his life did he expect that his work would be on a Vogue Cover.

Image credit: Vogue Italia

A.I. Opportunities

Tools and technologies are making it easier for anyone to create artwork. You don’t have to afford art school or walk into the Louvre to pursue a career. Chad believes A.I. tools will open up doors and allow artists to explore new ideas at a rate that has never been possible before. Plus, you don’t have to stay in your lane. You don’t have to only pick graphic design or animation or editor. In his own career, Chad has exciting opportunities that he didn’t know were possible in his “after DALL·E” chapter and has connected with artists to build a community.

Chad believes in having important conversations around A.I. and asking the tough questions about how it will affect artists and the world. However, he sees more optimism ahead than pessimism. In one year, A.I. has allowed him to produce more art and push himself creatively more than he has in his entire life. He has used it as fuel and truly believes that A.I. can empower humans.

Additional Links Mentioned in the Podcast

Connect with Chad on Instagram

Connect with DALL·E on Instagram

Try DALL·E 2 for yourself

Native Foreign, collaborator on CRITTERZ

Washington Post article on Hollywood filmmaking and A.I.

Connect with Karen X on Instagram

Connect with Don Allen Stevenson III on Instagram

Bella Hadid on the Cover of Vogue Italia: When Photography Plays with Artificial Intelligence

Continue the Conversation

Chad, thank you for being our guest on Creativity Squared!

This show is produced and made possible by the team at PLAY Audio Agency: https://playaudioagency.com.

Creativity Squared is brought to you by Sociality Squared, a social media agency who understands the magic of bringing people together around what they value and love: https://socialitysquared.com.

Because it’s important to support artists, 10% of all revenue Creativity Squared generates will go to ArtsWave, a nationally recognized non-profit that supports over 100 arts organizations.

Join Creativity Squared’s free weekly newsletter and become a premium supporter here.

TRANSCRIPT

Chad Nelson Episode Transcription

Chad Nelson: You know, ultimately I’m the one that’s driving this ship, and the AI is really just enhancing me. And so to a certain degree, I, almost, it hit me, I’m like, no, what these tools are doing, they’re not replacing me. They’re giving me superpowers.

Helen Todd: Chad Nelson is an award-winning creative director and technology strategist with over 25 years of expertise in designing cutting-edge interactive experiences and entertainment.

His portfolio includes industry-leading video games, TV shows, both traditional and interactive mobile apps and 3D tools for digital artists. As co-founder of the technology startups Eight Cylinder Studios and W.G.T Media, Chad has collaborated with Fortune 100 companies such as Microsoft, Intel, Sony, and Virgin.

Chad also serves as a creative collaborator with OpenAI. Chad and I met after the release of one of his recent creative ventures, the animated short film Critters. This short is a groundbreaking achievement as the first film with visuals generated entirely by OpenAI Dall-E then brought to life by incorporating classic Hollywood animation techniques.

And we waited to release this episode because OpenAI’s DALL-E has changed the trajectory of Chad’s career and hitting newsstands this May 3rd, you can see Chad’s AI artwork featuring real photos of Bella Hadid on the cover of Vogue Italia.

When it comes to visual artists and pioneers at the forefront of collaborating with AI, look no further than Chad, who sees AI empowering him with superpowers to push his own output and creativity, and who never in his life imagined that his art would end up on a Vogue cover. Today, you’ll get to hear all about Chad’s creative process, what questions he’s thinking about related to AI, how he approaches prompts, the making of Critters, and so much more. Enjoy.

Theme: But have you ever thought, what if this is all just a dream?

Helen Todd: Welcome to Creativity Squared. Discover how creatives are collaborating with artificial intelligence in your inbox on YouTube and on your preferred podcast platform. Hi, I’m Helen Todd, your host, and I’m so excited to have you join the weekly conversations I’m having with amazing pioneers in this space.

The intention of these conversations is to ignite our collective imagination at the intersection of AI and creativity to envision a world where artists thrive.

Chad, so excited to have you here today. For those who don’t know you, can you introduce who you are and what you do?

Chad Nelson: Yeah. So thank you for having me first of all. I’m Chad Nelson. I’m a creative director. I’ve been doing this for, I. Almost three decades. And I’ve directed creatively video games, television shows, social media, advertising campaigns, brand strategies. So I’ve had it had a very interesting background playing with a lot of different ways creatives can essentially touch content, touch digital technology, and then get it to essentially audiences.

Helen Todd: And one thing that you had said is, you’ve kind of made a career out of testing new tools.

Chad Nelson: Mm-hmm.

Helen Todd: And we’re here today to talk about DALL-E and your new film, but I’d love to hear kind of, I guess, how you came to, to find DALL-E and how this tool is really different than the other tools that you’ve been playing with.

Chad Nelson: Yeah, it’s, it’s been fascinating because OpenAI emerged on the scene, I think in late 2 or mid 2001 when GPT3 was kind of getting a lot of press and people were starting to talk about natural or large language models. And I was very interested in how that could be applied to say, a video game type application where you could almost use GPT 3 to do dialogue in games as opposed to just pre-recorded scripts or script trees, as we kinda like to call it.

And so I was very interested to see how that could be applied, but then, and so I started to kind of figure out, okay, well, should I write them? Are they licensing? And it was still in a R&D phase for them.

And then they dropped this DALL-E news and I knew, okay, wait a second. I’m a visual artist. I’m a designer. I live for visuals, art major. And so I, I’m like, I have to try this. I have to experiment with what this technology can do. Because when I saw the renders and the first outputs, I mean, they weren’t just primitive, you know, like chicken scratch or cave paintings. Now these look really, they started to look very good.

So, gosh, long story short, I didn’t know anyone at OpenAI at the time, so I was like, you know, barking up various trees through LinkedIn and so forth. And then one of the artists I follow on, on Instagram, is a guy named Don Allen III. And, there’s also Karen X.

And they, these are two influencers. They’re very, they’re, you know, very well known in the creative community. And I saw this, this pod or Instagram announce, that Don was going live with OpenAI. Like, wait, whoa, whoa, whoa, whoa. I stopped. I literally, I think was at a farmer’s market. I stopped, I raced home. I’m like, I need to watch this and see what’s going on here.I wanna learn as much as I can.

And then a woman from OpenAI was on the livestream as well. And at the end she said, anyone who is here, and I think there was about 60 of us, send me an email. Here’s my contact info and state why you would like to have access to DALL-E. So of course I wrote her immediately.

I think about a week later, I got access. And that is when this whole journey began. I mean, it’s literally began the start of what’s turned into an amazing, unexpected year of just creativity and discovery. And, and I, I can, yeah. Looking back now, it almost is ironic that it was almost like that, that time of before AI and or before DALL-E and now after DALL-E, like that literally was the weekend.

Helen Todd: That’s amazing. And, I also just, the social media person in me loves the Instagram throughline of how you got connected with OpenAI and that’s also how you and I got connected too.

Chad Nelson: That’s right.

Helen Todd: Which we’ll get to the animated film in a second, but tell me like the first day that you played with DALL-E, cuz that was kind of like an aha moment.

Like what, what your first experience was and then you also started an Instagram account that, that day too.

Chad Nelson: Yeah, so Open had been showing, OpenAI had been showing images of like things like a squirrel on a skateboard in Central Park or an astronaut, you know, eating cereal on the moon. You know, just they, you know, they’re kind of fun novelty images and they seemed like little party tricks to a certain degree or just like, and it was almost like a lot of the images to date were like, take A plus B, and the AI or DALL-E would produce, you know, C.

And that was cool, and I thought that was fun. But when I got access to it, I was very interested in how well could it simulate emotion? How well could it simulate something that we essentially connect with as a human, within, like, as an image that is presented to a human – would we emotionally respond to it?

And one of the things that I was almost instantly, in a way disappointed was, is that OpenAI said in the beginning, we could not do renderings or generations of people, of actual people. They didn’t want the deep fake concerns. They didn’t want people doing celebrities or public figures. And so they threw up those guardrails.

And so I felt, well, I still wanna explore this, this concept of emotion and, and how we can simulate that. And so going back to some of my old animation days, what do you do? You personify it through characters. And so one of the first prompts I essentially typed in was, a red furry monster looks in wonder at a burning candle.

Which just sounds like, okay, I don’t know what the red monster looks like. I don’t know anything about the room or the setting, but I know he’s looking in wonder. Hit, I hit, you know, enter generate, and 15 seconds later his image popped up and it’s the first images that’s on my Instagram account now. But I was blown away because the character, you could see it immediately in its face, its eyes, its expression.

Yes. It was like this little imaginative, almost Sesame Street, Jim Henson sort of looking Muppet sort of creature. Maybe a hint of Pixar in there as well, but it truly captured wonder. And I related to it immediately as like, as a person, I was like, oh my gosh. This was like, this is a moment now where the AI has created something and I’m emotionally connecting with it.

And so that started me on a journey for the next, almost somewhere between six to eight hours. I could go back and look at the log, but it, of just exploring different emotional states and joy to sadness, to I literally was like, oh, can I have these characters – can I make characters dance? And I did a whole DALL-E disco theme.

And so I basically spent that whole day just creating character after character until it finally I was like, all right, I’m tired. I better, you know, call it a night. And I looked at the body of work that I had produced and it hit me. I have never produced in a single day as an artist of almost, well, you know, of multiple decades, I’d never produced that much output in a single day like that ever before in my life at a quality that I thought was, maybe not everything was great or everything was perfect, but oh my gosh, most of it was, it seemed like finished quality renders.

And so I packaged up a few of them. I sent them to OpenAI and thanked them and then I created the Instagram handle, Daily DALL-E, cuz I was like, wow, I have enough content here I could post for the next, you know, 30 days, 60 days with, like, great content just once a day, Okay. I guess I’ll call it Daily DALL-E.

And so, yeah, that’s how the handle came to be. So I think I made it like that midnight, around midnight that night and I put up the candle or the red furry monster with the candle as the first post and then went to bed. And that was, that was literally my first day with DALL-E.

Helen Todd: I love that. And for all of the listeners, and if you’re watching on YouTube, we’ll put,the images and the links to where you can follow Chad and see this for yourself. Cuz I think one of the cool things that I’ve noticed with any of these tools is like, really when you demo and start playing and see for yourself, that’s when the like aha moment, you know, really clicks and people start really understanding the power of these tools.

So I, you had said I think in your Instagram Live with Natalie recently that, or she shared that the researchers at OpenAI didn’t believe that the images that you were creating were coming from DALL-E. Was that, did that start with that first, red monster looking at the candles?

Chad Nelson: So yeah, so the email that I sent OpenAI, I essentially made a collage or like, of I think 30 of the, my favorite creatures or critters essentially that I, that I built that day or generated that day, I should say.

And, and, and I sent that off to them. And that’s when Natalie wrote me back a day later and said, oh my gosh, these are amazing. I’d love to show them to the whole team.We wanna find out more about you. And so I’m like, sure. Absolutely. And then they wrote me again saying the researchers wanted to know how much of this was Photoshop versus DALL-E.

And, and I, and I, no, no, it’s, it’s all DALL-E. I mean, you obviously could check my logs. And so then a day later from that, then they replied with, the research team would like to meet you. They wanna find out more about how you went about the process you used to get these results because they didn’t think it was able to produce at that level, at this stage, in terms of its training, and its, and its, you know, I guess you could say based on its training thus far,

Helen Todd: Yeah, which is really amazing. And I know one thing that you and I have talked about recently is like that it’s so interesting that the creators of these tools don’t fully understand what they’re fully capable of yet.

And like they’re looking to artists like yourself and users to kind of see how far it gets pushed.

Chad Nelson: Yeah, no, I mean, that’s definitely one thing that OpenAI has done very well, I think over this last year, is the researchers definitely have a way of, you know, they have their, their own likes and their own personalities and their own quirks or interests and so forth. And so I’m sure they’re testing through a lot of different elements.

But when all of a sudden you have a collection, I think they’ve built around a dozen or so, maybe two dozen artists kind of in a little circle, if you will, a trusted circle, to say, experiment with the tools, push it, take your unique style, or your unique interest, be it fashion or architecture, or in my case, in doing more kinda entertainment and animation and so forth and characters.

So each of us pushes it in different ways and gives that result back to their team. But in the beginning, they hadn’t established that yet. That was like, so in a way it was still, very much the early days, like the clearly, the pioneering, opening moments if you’ll of, let’s explore this nation and see what’s, what’s out here in the wild West.

Heln Todd: So April 6th, 2023 was a big day for DALL-E. It was the one year anniversary. And on this date was a special day for you two, where you released Critters, the first ever AI-generated, animated short. So tell us, you know, the story of how Critters came to be.

Chad Nelson: Yeah, no, it was incredibly exciting. We were, I was so thrilled to launch the film. We had been working on it for now a number of almost a few months actually, since the holidays. And it all came to be because in the beginning when I started working with DALL-E, as you, as I mentioned, you know, I started creating all these characters. And in my mind I was like, well, I have backstories for these characters.

I have, like, you know, there’s motivation. You know, it’s like Disney with the three or Seven Dwarves. It’s, it’s like there’s stories behind these, these funny little personas that I’d love to tell, but I didn’t really think anything of it for a while. And then in September, OpenAI released out painting and then out painting suddenly allowed the image to go from squares, you know, almost like little Instagram posts to now you could do full Letter Box HD images.

And now I thought, well, now this is now taking us from what was kind of a fun little, almost a photo, like a Polaroid. And now I could actually do something cinematic. And it’s that point where it hit me. I’m like, oh, no, no. Now is the time we need to start making and tell, like, really, let’s tell a story.

Let’s make a movie. And so I pitched it to OpenAI, I think around right after Labor Day, and just said, Hey, what, what, what’s your thoughts? And then their marketing team and comms and Natalie, and, and they were like, oh my gosh, could we do something; that would be incredible? Do you have an idea? And so I said, yeah, I have an idea.

I’d love to take the characters that I created in the first day that you all know, and you’ve all now seen and everyone’s seen, and let’s bring them to life and let’s do it through a David Attenborough-style documentary. But instead of it just being that, let’s have it all of a sudden collide with a Monty Python sketch.

And all the little critters speak and all the, the narrative that the Attenborough character says about who they are and what they, you know, what they do in their daily routines is wrong. And what you really find out is these characters are a little bit more, these critters are a little bit more like us in that they’re going through identity issues, they’re in therapy.

One’s maybe a conspiracy theorist or you know, like one, one is a female executive of the forest. And she’s still always struggling with the idea of getting respect. And so I wanted to be a little bit of a reflection of our own society through, of course, these characters and do it as a short film and make it just a fun, you know, kind of clever, humorous, five-minute experience to really push the technology.

And let’s see, could it, could it actually tell a story? And I think that’s how it all, yeah. That’s how it all came to be.

We basically then said, all right, once open, agreed to it…

Helen Todd: Yeah. I, I think, I think that’s a key point I didn’t say in the introduction, is that not only did you release it on April 6th, you released it in conjunction with OpenAI through their Instagram account as well.

Um, so big congratulations on that. But sorry, I didn’t mean to cut you off.

Chad Nelson: No, no, no, no, at all. I mean, we, we wanted it to be, it was always a collaboration. I mean, OpenAI, this was, this was really for them to showcase the tools and I’m so glad that they trusted myself. And then I, one of the producing partners that I had worked with in the past for a number of years, company called Native Foreign, and they’re down in Los Angeles.

They do advertising campaigns, commercial campaigns, a lot of production. And I knew they could really jumpstart, essentially Critters in helping out with casting, getting very talented voice actors. I didn’t want it to be, essentially AI voices are simulated. I really wanted to utilize real Hollywood talent to bring the characters to life.

And then they also had a repertoire of animators, Emmy Award-winning and nominated animators who had done known cartoons and a ton of commercial work. And so in a way, I was providing, if you will, a storyboard or kind building the world and setting the stage. And then I, in partnering with them, they could then run with it and really bring it across the finish line.

So, yeah, it was really, it was a wonderful collaboration between myself, Open and Native Foreign that actually allowed it to come into fruition.

Helen Todd: Yeah. And it’s really fun. We’ll, we’ll link to it and make sure to embed it in the show notes so everyone can watch it. It’s definitely worth the five minutes. And I know you wanted to share a little audio clip so do you wanna tee up, a little line from, some of your critters, for audience to listen to now?

Chad Nelson: Yeah, sure. I mean, this, the basic setup is we, I’m gonna have you listen to, the characters when the narrator David Attenborough, who’s actually not David Attenborough, he’s David Attenborough’s neighbor Dennis, who is trying to basically be his own David Attenborough type, you know, documentary filmmaker.

And this clip is going to be when he suddenly hears the critters speak for the first time as they start talking back to ’em. So yeah, let’s let it roll.

Critters clip: Looking upward, we find the red jackal spider. It’s hairy, distinctive for its, excuse me. Wait, I, I’m sorry, who is speaking? I’m speaking. Do you? Oh dear.

I’ve eaten the wrong Paris. Nope, it ain’t the berries. What? Who are you? I’m Frank, and I’m blue, which is weird cause I’m a red spider. I could not call you Frank or blue. This is a science documentary. Science. Ha. You think secretly filming someone while they sleep is science? What? Yes. Why no? It’s creepy. So creepy.

Helen Todd: Definitely watch the full one. I don’t wanna give any spoilers. And we’ll also link to the making of the behind the scenes too, and we’ll get a little bit more into that. But one thing I know that you’ve said that really resonated with me is like, kind of the intent and goal behind Critters, is to kind of start a larger conversation about what these tools can do and also, you know, be an inspiration.

So I’d love for you to kind of talk about, you know, sounded like you had a lot of fun, but kind of some of your goals, going into creating this short.

Chad Nelson: Yeah, I mean, I think it also stems back to even what my whole goal with the Instagram community that has now just emerged. I mean, I, my personal goal was never to become an influencer in social media.

It just somehow happened because of all the interest that I started gaining followers. And so in a way it’s kind of a happy accident that this has all happened. But one of the roles that I’ve learned that I guess I wanna take is how can I help inspire and how can I entertain, you know, the audience that I’ve at least been able to grow thus far.

And then in partnering with Open, you know, how can I continue to entertain and or more so to educate and inspire their community? Cause their community is much, much larger. And I think there are a lot of questions that people are asking about what does AI mean to creativity? What does it mean to the Hollywood community, the art world?

What does it mean in terms of, there’s numerous ethical issues and, and so forth, which I’m sure we can talk about more deeply. But my point with Critters was, I like the idea of going through a real case study, let’s say, okay, we’re gonna make a movie, we’re going to write a script, we’re gonna hire actors, we’re gonna bring on the animators, we’re gonna, you know, edit it, put in music, and do all the visual effects and take it across the finish line.

And at the end we can say, what did we really learn from this? Where did AI really speed up the process? Where did it maybe, where is it still kind of, weak or, or actually not even providing any benefit at all?

And not doing that in a closed bubble for our own knowledge, but more so to say, hey, no community, you know, wherever you are in the world, Hollywood, just a storyteller of any sort. Like, yeah, come, come find me. Send me a message. I’ve happily talked because that’s the whole point of this, is to share information so that people can see how AI actually can empower them. And allow them to succeed in realizing their dreams and visions or ideas in their head as opposed to just feeling like they have no outlet to explore them.

Helen Todd: Absolutely. And we’re definitely gonna get more into those questions.One thing that really resonated when we spoke too is to be sure to be asking these bigger questions, but also like, have fun and explore and like, tap into the possibility that these tools offer. But since we did mention the harder questions, let’s dive into that just a little bit.

Yeah. Especially since you’re like so close to it and are creative in the space. And then we’ll go more into the technical and fun things too. I think one of the things that we discussed kind of is, you know, when I, even when I tell people about this podcast, I see sometimes a really visceral reaction of like, fear.

And I’ve already got a few emails about the downsides, and I’m sure you have to, and I know jobs comes up a lot, but how, how do you respond and how do you think about it, when you have these conversations?

Chad Nelson: Well, I first start with my own personal journey with it, which is, in the beginning I was amazed, as I said. When I got DALL-E, I was playing with it, building characters, you know, all of that was true.

There was definitely a moment, maybe a day or two later, that it started to hit me that this, could this replace me? Am I basically, am I training a model to suddenly do my job and then thus I’m slowly going to become extinct? That was a very, of course, real fear. And then, and I had to go through this process of kind of rationalizing and, and or kind grasping with that thought, but then all of a sudden it kind of at a certain point it hit me.

Which was, I see what these AI tools can do, and they, they definitely can produce a myriad of amazing images. But what I found is that it has no idea if its one image is great and one image is just terrible. I’m still in a way, a curator or a ultimately an art director, a visual designer, a creative, well, really a creative that’s saying, no, no, let me steer this ship, let me guide these tools to produce the work that I think would be essentially best resonate with what that ever, that spark that’s in my head, like the tools are not gonna deliver that on their own.

I need to be the one that kind of still drives it. So artistically, I found, no, I’m still in the driver’s seat and I still find that yes, I mean, the tools will get even more advanced from where they are now. And don’t get me wrong, I think that’s going happen, but I find that as an artist, as a creative, I’m still, I really like the idea that I am driving, essentially using these as tools to drive a vision and to create a vision and not really the other way around.

In going through that process of building all these characters, I definitely felt that sense of fear for a good while where it’s like, oh my gosh, am I training something to essentially take my job or replace me at some point?

But then it hit me where I realized, no, I’m in the driver’s seat. I’m the one that’s actually determining if, you know, one image is great and one image is terrible, I’m the one that’s actually steering if will these visuals through in painting and out painting, which we should talk about in a second too, but, and what that means.

But, you know, ultimately I’m the one that’s driving the ship and, and the AI is really just enhancing me. And so to a certain degree, I almost, it hit me, no, what these tools are doing, they’re not replacing me. They’re giving me superpowers. They’re actually making me think through my ideas much faster than I’ve ever been able to think through ideas before.

And I think that all of a sudden is when it hit and it turned from fear to empowerment, and so that’s where it was like for myself, I’m like, I wanna continue to perpetuate that message and in a way be evangelist of that so that others can say, yeah, you should, you know, give it a try and learn these tools, because I think what you’ll find is if you have any creative sparks in your head, that these tools are gonna help you explore those ideas much faster than you’ve ever been able to before.

Helen Todd: I love that. And, and one thing that you said, with the Instagram Live with Natalie after the or before the release of, or before the release of Critters, is it also helps you determine or figure out the bad ideas too. And that that’s a really important process of that you do as well.

Chad Nelson: Yeah. No, and the creative process is not a, is not a vector, you know, you don’t just say, Hey, I have an idea, and then one path, and then all of a sudden you hit execution or the finish line and you’re like, oh, that’s it.

No, creativity is it’s, it’s far more like wandering through like a web of spaghetti in terms of exploration and experimentation. Sometimes the happy accidents, if you will, of just, of not like, oh my gosh, what just happened? Oh, I like that. You know, that actually can influence your art.

And I feel in a way the, that, that, that process of going through just exploring ideas, the random ideas. If you have that voice in your head that says, ah, that might take too long, I don’t wanna do that, well then that means you might have cut off an idea prematurely and not really explored it. And now what I find again with AI is that, that time as a barrier and maybe that effort, you know, since that somewhat goes away, you know, almost entirely, you can, you can really explore a lot more ideas and push the boundaries of that sandbox or that playground to really explore what you really, you know, like or don’t like, I think in a, like I said, much faster and more efficient way.

Helen Todd: Before we go too much into the more fun side and technical sides, what are some of the tougher questions related to these tools that you’re thinking about? Cuz I know you said yesterday, you know, you have probably the most problems with Midjourney from an ethical standpoint, just kind of how they train their data sets

Chad Nelson: You had to point that out, did you?

Helen Todd: Didn’t know if you wanted to speak to that?

Chad Nelson: No, I just look

Helen Todd: Well, you know, I do wanna have those hard conversations or at least

Chad Nelson: Yeah

Helen Todd: Or you know, have them on our radars to be thinking about.

Chad Nelson: All right. One of the reasons why I, I mean, don’t get me wrong. I think Midjourney is incredible in producing unbelievably good results. I mean, the Midjourney 5 is out now. I’ve of course played with it. It’s phenomenal. But I think we have to take a step back and say, well, where are these tools getting trained? Are they, is that training data licensed? Is it scraping just the public web? Is it looking at celebrities and IP owners or brands and saying, all right, we’re just taking every Porsche, every Star Wars character and every celebrity, and we’re just going to utilize them freely without any sort of agreement with those license holders or brands or celebs. And, and then also not compensate them at the same time.

So I, I, to me, there’s, there’s something as an artist where I think that’s just, there’s something that I don’t, it doesn’t sit well with me. Now, of course, the law, and I’m not a lawyer, so I’m not gonna speak to how copyright law and all these, the legal challenges that lie ahead, I, there’s, there’s much better, smarter, more experienced individuals they can speak to that.

However, I do know as an artist. That is not the way I would want the world to work, which is, oh, once it’s on the internet, just anyone can just use it however they wish. I like how OpenAI puts up guardrails. They do. I mean, I’m sure you can find, and let’s, let’s not say, I’m sure, I know for a fact if I typed in SpongeBob, I would get a SpongeBob image in there, but it’s not based on the licensed SpongeBob imagery that they just stole from, or, and maybe steal is a harsh word, but just lifted off the public web because it happened to be on a Viacom website for Comedy Central like that. Or a Pinterest library or wherever they might have gotten those images.

And then on the flip side is there’s also things you can do to prevent people from searching for keywords. And so, although I know OpenAIr loves to create guardrails on known public figures and certain celebrities and so forth, they, and even living artists, I know there, there’s, there’s numerous talks about how do you control making sure that people can’t just go type in someone who’s famous and alive right now, who’s practicing and, and living a life as an artist, and let’s just allow you to just kinda replicate their style immediately. So there’s ways of creating guardrails for that as well.

But I think, but these are the questions that we have to talk about. And then if you do wanna have your art go into a database to let it be trained, is there compensation? Do you get actually some monetary value back based on usage or some other structure?

I think these are the questions that we’re asking. And it goes back to my point earlier about it. This is the Wild West. I look at it as there is a lot of wonderfully pioneering spirit, spirited companies out there and individuals like myself, but I like OpenAI because they’re definitely encouraging the dialogue with artists, with corporations, with brands, with governments.

I know, I mean, at least I, I know that these have some conversations, if you will with agencies and so forth about what the implications of AI are. But that said, I just feel like, yeah, we’re all figuring out the answers together and, and as much as we wanna say the law could keep up, the technology is still moving so much faster that it, it’s almost impossible for the legal side to, to be right at, you know, equal step with, with where the technology is advancing.

So that means companies have to take a more responsible stance and take the more ethical approach,versus just pure, I guess, capitalistic approach.

Helen Todd: And, and that’s one reason for this podcast to explore all the fun, but also, you know, to be part of that dialogue as well as we’re, as you said, it’s the Wild, Wild West and we’re all just trying to figure it out.

So let’s talk about more fun things unless there’s any other bigger questions that you’re thinking about.

Chad Nelson: Job loss is always the one that everyone’s like, oh gosh, the AI is gonna take my job, it’s going to take my job, and look, I’ve been in the business of creativity for almost three decades. And in all that time I used computers essentially, or the computers internet, you know, essentially digital technology.

I can tell you from when I started my career to mid-career to today, I am not using the same tools. I’m not using the same computer. I’m like, it is always evolving. Those, even the, like, if you think about it, a web developer, remember when a web developer or a web designer more so was like a job that you could like, and now it’s like, is anyone really hiring like a dedicated web designer?

Like, I mean, it’s just like, like that job came and went in a blip. And so I feel like these, whatever the next jobs are, we have no idea what they’re going to be, but I know they will exist. And, and one way I look at it as the analogy of like say film editing. So you had film editors used to use, you know, reel to reel and, and you know, of course actual film strips and the tape and, and it was a very kind of hands-on tangible process and people kind of loved that element of it, but very few people could do it.

Then digital, non-linear editing came out – AVID and so forth – and there was a lot of, of, of almost like, oh, this is not editing. This is gonna ruin the craft. There’s an artistry to it, and it takes your hands off the, you know, it’s like, it’s all now you’re separated. The human elements separated and that was a, I guess, a concern for a bit.

But if you think about it now, there are more people editing content with essentially digital editing tools than there ever were in the eighties or seventies or sixties before that. I mean, we have far more people editing on an iPhone daily than we had in an entire Hollywood industry before. So to me it’s like, it’s fascinating how these things just, you know, we can’t predict all the new jobs.

I actually look at it and say, well, there’s a lot of optimism ahead as opposed to pessimism.

Helen Todd: Yep. And I share that viewpoint too. Like before the smartphone, you know, how many photographers were there? Now everyone’s a photographer just because it’s one tap of a button on your phone.

Chad Nelson: Right.

Helen Todd: And I think you said something funny yesterday, like the irony of the, the camera too.

Chad Nelson: Oh, yeah. I mean, an iPhone has, I mean, the latest iPhone has more software and one could even argue AI software in it than any camera ever created throughout history. And yet, I am still a photographer just as if I’m using a manual camera with an iris that’s mechanically or like No, no, no. You’re talking basically to an electronic device that is almost a supercomputer in historical standards with advanced AI that’s taking a picture for you.

So to me it’s like, but yet you’re still a photographer. So I think that’s just, yeah, it is the irony.

Helen Todd: So for the viewers and listeners who, like are hearing about DALL-E for the first time, can you kind of just give like a little 101 intro, to bring everyone into the fold of what is DALL-E?

Chad Nelson: Yeah. I mean, the best way to think about what DALL-E is doing it, imagine yourself as a human and you were given images, images of, say cats or of houses, or of cars or just of things, fish, et cetera.

And you’re given thousands and thousands of images. And so when I would type, or sorry, as an artist, if I were to type in, oh, I’m looking for a fish in an aquarium, you know, that with these attributes, the, just like a human would say, oh, okay, you want a fish, you want an aquarium? Oh, you want it on a table with a plaid, you know, tablecloth.

Okay. You have all these things recorded in your head. And you can say, okay, now great. I can then take all those items and essentially create a new image. In a way, DALL-E’s doing the same thing. It’s being taught all these things that are exist in our world and not just like material things. Also qualities.

And, and so what is, what is depth of focus? What is golden hour? What is, you know, like, so if you think of all these terms, it’s given images to describe what, or to represent what those, those descriptions mean. And because of that, when you type in a prompt, it taps into all this knowledge that it has.

And then it says, great. I’m now gonna take all of that and turn it into a brand new image. It’s not Google where it’s an image search and it’s not Photoshop where it’s taking a bunch of images and then just doing some sort of magic collage. It’s literally generating a brand new image based on all the knowledge it has of the, based on your keywords that you used in the prompt.

So it is an original piece of art. It is an original creation that has been inspired by preexisting material and which is really at the, if you think about it, that’s no different than who we are as humans. All we do is we see things in the world, we store it in our brains, and then when it comes down to time to create and to express ourselves, we tap into those, that, that knowledge that that still exists up here, to then come up with something that’s maybe a new interpretation of it.

To a certain degree, DALL-E, in a way is very much like us. It’s an artist just like us, but its knowledge set might include, I don’t know, 2 billion or no, I think it’s like one point something billion images. I don’t know what the official number is, but, you know, but we also have a lot of imagery too. So in a way it’s like, yeah, it’s only getting smarter, but you know, it’s doing what we do.

And I think that’s where it’s just, it’s important for people to understand it’s not just stealing an image that’s existed on the web. It’s literally generating something anew from all the images that it has in its training model.

Helen Todd: Well, let’s talk about some of the technical aspects, cuz you did mention that you’ve tested Midjourney and a bunch of the different tools, but you keep on coming back to DALL-E.

So tell us why you keep coming back to DALL-E and some of the differences between the tools outside of the ethical stuff, which we already addressed.

Chad Nelson: Right. No, I think so, so creatively I, this is one where I think it’s very interesting because every tool out there, I mean, Adobe now has, you know, their own AI generative models. There’s Stable Diffusion, obviously Midjourney and DALL-E.

They all will do this a very similar thing, which is, okay, let me type in a prompt and I get a result. And in a way that is kind of like you’re curating a piece of art. That’s like, you’re, they, it’s almost like there’s an artist sitting in your room or at your house and you’re saying, Hey, here’s what I wanna describe in, or sorry, here’s what I imagine. Can you paint me this picture?

And the better you are describing, I think the better result might be. But you kind of aren’t really an artist at that point in some ways cuz it’s not like you’re really doing anything. You’re just asking the, in the way that that artist to, you’re commissioning it.

And I feel like that’s what Midjourney is. I’m talking to an incredibly advanced Pixar rendering engine or you know, ILM rendering engine. It’s just, it’s incredibly advanced. It’s super cool. But I don’t really feel like I’m ever getting my hands dirty. I’m not getting my, you know, fingers wet in the paint, if you will.

And the difference with DALL-E is, is I think, comes down to the very simple fact is, it has inpainting and outpainting. Inpainting basically allows me to take an area within the AI generation. And so, for example, let’s say I did a person, a portrait. I could just paint on the eyes and say, no, no, no, let’s just, let’s do additional generations on the eyes or on their lips or their expression.

I could, oh, maybe I just wanna change the whole background or paint in some clouds, and not as a painting, but as an AI generative, essentially, created clouds or landscape. And so because of that, I feel like I’m manipulating and massaging or evolving the image more like an artist would in Photoshop, or if I’m working, painting on a canvas than just commissioning a piece from a rendering engine.

And I think as an artist, that to me is a kind of a real fundamental difference because. You know, some people say, well then you’re not the artist if you use AI. And that’s where I kind of have a little bit of an issue. I mean, yes, AI is a very general term, and if I was arguing, maybe I would accept that argument, maybe if it was around just a pure Midjourney approach, but knowing what I do with DALL-E.

And so for example, with Critters, one of the characters Blue the Red Spider, I literally have probably 50 different generations for just the eyeballs. Another like 50 to 60 generations of the mouth. I played with the legs. And so it’s like ultimately that’s a creative process that you’re working the image, you’re working the ideas, you’re massaging it and pushing it.

Like I said, that is not just, oh, AI give me something and oh, see, it’s mine. You know, like, it just, it just feels different. And I feel like that is, as a creative, I really love that fundamental feeling of I’m the one that’s creating it. I’m the one that’s driving it. I’m the one that’s pushing it.

And that’s why I like the DALL-E tools. For me personally, because they are built around that type of experience.

Helen Todd: I know, I’m sure our listeners are very curious about your prompts because one thing that stood out to me too is just kind of your three decades of experience, how that your expertise informs your prompts and how you still need to know how to talk to these things.

So I’d love to hear or have you share with our listeners and viewers, you know, how you approach prompts, if you, if you don’t mind.

Chad Nelson: So in the beginning, they started very simple, almost just conversational. And that’s why the, the red furry monster, you know, looks in wonder at a burning candle. You know, it’s just a simple statement.

And some of the times I, or, or some of the prompts I’ve seen from other artists get very keyword or almost like they’re like hashtag theater where it’s like ultra realistic 4k and, you know, it’s just like, know. And don’t get me wrong, that can sometimes work, as, again, depending on the, on the app as well.

But I found that I like to describe it more like you’re writing a little bit of a screenplay where. You first describe the setting at a broad level, it’s almost like a, think of a funnel. The, you start at the top, which is like the broad setting. It’s a bedroom or it’s a misty bar, or it’s a misty forest, or, you know, and then you start going into from setting to then the characters that, or the subjects within it.

And then from the subjects within it. Then you get into maybe the lighting or the time of day or the atmosphere and the mood. And then usually where I end with is the aesthetics, the camera. The film grain or the other maybe visual details that would kind of ultimately like, oh, it was printed on a, via a Polaroid or on an old Kodachrome, you know, you know, camera print or seventies print, et cetera.

So in a way, it’s like, I would never write a screenplay by describing the camera and lighting first before anyone even knew what you were talking about. And so that’s kind of how I approach the prompt writing. When people write me and ask like, why are your prompts working out so well? And I, mine are just not working.

And I say, well, send me one of your prompts. And I realize that sometimes people just are describing things in a way where a computer wouldn’t know logically what you’re talking about. It’s almost like they’re bouncing through ideas. And so it’s like, oh, there’s a dog in the room, but the camera’s up high, but then there’s another dog and it’s behind.

Like, you’re like, wait, like, the computer’s not gonna know, like how this super bowl of thought, you know, you know, is bouncing around in a box. It’s not gonna know how you’re thinking and approaching it. So no wonder your images aren’t working as out as well.

Helen Todd: Yeah, because they’re reasoning, machines in the intelligence. They can’t read your mind.

Chad Nelson: No, no. You have to speak to them logically. I think the more logically you can speak to it, where it just feels like you’re, like I said, if you were reading a screenplay, the first thing you’d wanna know is like, okay, where am I then, okay, what am I looking at? And then tell me about what makes it unique or what are the attributes then of that scene in that setting.

Helen Todd: And, you mentioned, you know, you, you’ve been kind of surprised at how other AI artists that you’ve, connected with in there’s like a burgeoning AI artist community, which is really great to see, kind of some surprising things about how they approach it. So can you kind of share, so what surprised you about some other AI artists approaches?

Chad Nelson: Yeah, I mean, I, everyone that I’ve met, I mean, there’s so many talented individuals and some are almost more like they’re, the, the way they talk to it is more like poetry. You know? Like I’m much more logical and rational or kind of factual with my prompts and descriptive. And I’ve seen some artists that they almost describe the mood and the feeling less descriptive about the exact subjects.

And, and to me it’s fascinating because it leaves a lot more to chance, but those, that chance of that more poetic approach yields some amazing results. And visually, like when I’ve tried to do that myself, I’m like, oh my gosh. Like you feel like you’re, it’s like almost like more of a visual slot machine.

You don’t even know what you’re gonna get back, but when it does what you do get back is astonishing. And I, I do love that. But that, yeah, that’s his, that I wouldn’t have even thought about that until I had met some, one artist in particular that’s kinda their whole tactic if you will.

Helen Todd: Amazing. Well, and one thing that kind of stood out too from our conversation and just kind of watching the film and the behind the scenes,are also the limitations of these tools, and that you’ve kind of said with Nick that there’s still a lot of work to bring it from of with traditional filmmaking, to bring it from where you started in DALL-E to what everyone can see today.

So can you kinda talk to us about, you know, the limitations and where the divide and conquer where you stopped and where Nick’s team kind of picked up the baton?

Chad Nelson: Well, yeah, I mean, in terms of visual storytelling, We’re only now just beginning. And again, depending on when this airs, I mean, this is how fast this industry works.

It’s like this could air and all of a sudden it’s like, everyone’s now doing text to video, but right now text to video is still very experimental. Most of the work I’ve seen from R&D departments or, or, you know, I mean, Google’s released some, Facebook’s released some, Runway, obviously is showing off some things with gen two.

It still kind of looks like almost like a dream world. It looks very like it’s like, you know, that, LSD vision where it’s like, you know, you’re kind of going through a surreal world where nothing is quite intact, nothing feels truly material. Everything feels a little surreal and amorphous, if you will.

That’s gonna change at some point. I don’t know if it’s, if it’s two months from now, heck, maybe by the time this launches, I mean, that we might, we might overcome that. But for right now, we know we can create incredible-looking stills and we know there’s this theory that you’re gonna be able to create, ultimately, essentially motion pictures.

This tech is, there’s still a pretty big jump. It’s still, I wouldn’t call it comedic, where we’re at right now, but it’s definitely in a little bit of that uncanny valley. No one would ever accept it as real. Stylistically you might be able to work through it a bit to deal with those limitations.

But, you know, it reminds me of like the days, even when like the first A-Ha “Take on Me” video came out and said, oh, let’s try doing animation on video. It was an experiment and obviously it became a signature piece in terms of video and animation because it took some new approaches to combining two different mediums.

And I think that’s, we’re in that kind of phase right now where we’re just really at an experimental playground, an R&D phase that we’re in. And eventually it’s gonna hit primetime. When that hits, I’m not sure, but probably more so months than years.

Helen Todd: Yeah, I think I saw Karen demoing one of the, she got preview for one of those tools.

Chad Nelson: Yeah.

Helen Todd: So you did the, you set the scenes and the characters and then any movement in Critters that was really Nick and his team? Is that kind of the handoff point?

Chad Nelson: Yeah. So going back to now Native Foreign, I, you know, I helped kind of design the world and build the world and figure out what I want it to look like and then, I would have a still. I’d have a beautiful, you know, landscape 16:9 HD or 4K actually landsc- you know, image.

I would then give it to Native Foreign and then they, using their talented artists and animators would essentially cut it apart and break it into layers and then bring it into After Effects or Premiere, or, I’m pretty sure it was mostly those two tools, predominantly. Maybe there was another 3D tool like Blender or something.

But let’s just say it’s predominantly After Effects. And from there you could then say it was like your flat image is now you have a tree in front of another tree and you can basically create these layers where then as you move a camera through it, it suddenly has three-dimensional depth and you could add mist, you could add dust, you could add motion and movement, and leaves falling, and all of a sudden the world started to become alive.

And so yeah, that’s what, that was kind of the handoff that we used for this project. Because yeah, like I said, the tools weren’t able to deliver what I was hoping in terms of motion, right out of the, you know, there’s no package that could do it. So we used, yeah, like I said, traditional techniques with, combined with the AI.

Helen Todd: Yeah. And something that you said that I love is that you never even imagined like being able to create an animated film at the quality and level, cuz you’re not really, you know, an animator in that way. Right. But this kind of opens that up for you.

Chad Nelson: This is the, I think a general theme overall with my experience with AI is that when you start your career and you start, you’re thinking about like, what kind of artist am I going to be? What type of creative I’m going to be?

When I was in art school, one of the things that was taught to you is like, almost like pick your lane. Like do you wanna go into computer animation? Great, well then, but also what, what do you wanna go into computer animation for? Is it lighting? Is it modeling? Is it animation? Is it special effects and particle systems?

Or, and so it almost became very like, task and or technique oriented. And what I’m finding now with, with the AI and this, these opportunities is no. I mean, I might understand a lot of those techniques. I am not an expert in rigging. I’m not an expert in lighting. I’m not an expert in modeling. I understand all the principles behind it.

So as long as I can actually describe what I want, the AI can really take me across a finish line and get me the work that I think is almost, I wouldn’t say equal to what a traditional artist might do if it went through a true Pixar-type production path.

But it gets me pretty darn close. And that’s what enabled me to or allowed me to say, oh, I can make a short film. I could actually do this. I could make something that might not be truly a $100 million Pixar budget-style film. But it’s going to be pretty darn close in terms of quality. And as long as the writing and the cleverness and the humor works, I don’t think that shortcoming will even be missed.

And that’s what I hope to prove.

Helen Todd: Yeah. And it’s so exciting. Well, we’ll get to the possibilities in a second. Well, you mentioned, or we’re kind of talking about the limitations of these tools too. And one thing that you had said is the DALL-E deadend and kind of, you kind of learned a little bit about their training and their limitations of training.

So I was curious if you could share kind of the creative limitations in working with these tools too?

Chad Nelson: Well, yeah, I mean if you think about it, it’s just like working with a human. It only can produce what it knows and what it’s been taught. So if I asked it for an obscure Italian ceramic designer’s, you know, pottery from say a certain year, I mean unless it knew who this sculptor or this artist was and their work, it wouldn’t know what to do. It would give you probably something similar that would say pottery or Italian. It would just give you some sort of amalgamation of what it thinks might be best at recreating this very specific thing.

And I found that. That’s where I think it’s been interesting. As I’ve gotten deeper into DALL-E, I’m starting to find some of these knowledge gaps and holes. For example, I feel, I mean, here’s one example your listeners might respect or relate to, which is like, I needed to do this one photo shoot campaign, and we wanted to do, have a model in front of a vintage Italian sports car.

But the stylists specifically wanted a very space age wedge style design from the late sixties to early seventies that Lamborghini and Ferrari and a couple others were, you know, had experimented with. And so I’m like, oh, no problem. We’ll start creating, we can, we absolutely can create that.

And then I realized, no, no. DALL-E had no knowledge of that. DALL-E actually had no knowledge of that specific model of Lamborghinis or Ferraris. It knew generic Ferraris and more of the popular ones, but it had no idea of that, that wedge based space age, design aesthetic, that was for probably about, I don’t know, four years kind of in the auto industry. So again, it was just like, well, I guess I found a knowledge gap.

The other side of it is, as you mentioned, the DALL-E, dead end is DALL-E, and the way these image generators work is it knows, it knows those are clouds, or it knows, oh, that’s a person sitting on a picnic blanket or, you know, there’s a, a puppy on, you know, on the side. It knows those things.

But then what I find is if you do enough inpainting, and again, going back to DALL-E and inpainting, if you continue to massage it and manipulate it and, and, and change the imagery through inpainting, eventually you hit a dead end where it just realizes it, it can’t go any further.

So, for example, like you could say, I don’t know, again, let’s describe a family having a picnic. And you’ve done all this, these things to make this picnic setting perfect. And then you say, no, no, no. I wanna have a flying whale in the sky, something completely abstract.

Well, you’ve probably done so much manipulation to this image that there’s so many keywords and tags all over the place that for it to put a whale there, it’s like, I don’t know what you’re asking for. I don’t know how to do this. And so it just doesn’t. It, you’ve almost, in a way, the soup is too saturated.

I don’t know how else to describe it, but that’s what I call the DALL-E dead-end. It’s just you’ve manipulated the image

Helen Todd: Yeah

Chad Nelson: to the point where it cannot be touched or manipulated any further.

Helen Todd: No Twitter fail whales over families with

Chad Nelson: Yeah. I don’t, I dunno why that example first, but it’s a classic example, the whale that’s above the picnic.

Helen Todd: Oh, I love this, talking to creatives cuz this is, you never know what you’re going to get. Well, and one thing, that you’ve shared is that you are encouraged, about like working with OpenAI. So what makes you encouraged, in your collaboration and partnership with OpenAI as we kind of look to the future and the excitement around the space?

Chad Nelson: Yeah. I, I think it’s just they’ve been a very, they’ve been very good about keeping this conversation, very much a two-way street. Their researchers have shown me some things to test where they’re like, we’d love for you to just experiment with this, play with this. Tell us what you think.

And in return, I can answer back with my thoughts on either current DALL-E on any R&D things that they happen to show me. And in a way, I feel like the fact that they’re going into the artist community, and knowing kind of the group that I work with, I mean, I can speak to myself, I can’t speak to the others, but we are all pretty diverse though, and in terms of just even who we are as humans, but our backgrounds and our, even our professional kind of day-to-day lives.

And so each of us has a unique perspective of how we think the tools should work, how they, what we expect of the tools are concerned with the tools. And I, I just really appreciate the, this notion that a technology company is not just racing to create features. And get it out there and just launch it, in a way that, well, we’ll ask those questions later. Let’s just, let’s just get it live and, you know, and then see what happens, and then we’ll adjust.

I think they’ve been very in tune with what it is, the implications of these technologies, might mean for humanity. And then certainly they’re, even if they don’t know the answer, they’re at least wanting to hear conversations from everyone involved just to help figure out what that answer might be.

And so that’s, that’s been my experience thus far.

Helen Todd: I love that. And I know the tools come a long way from like saving prompts and that.

Chad Nelson: Mm-hmm.

Helen Todd: Is there any, like, wishlist items that you want from the tool that

Chad Nelson: Oh yeah, of course

Helen Todd: you could share of like,

Chad Nelson: Well, I mean, there’s, look, there’s, yeah, there’s some things I can speak to, some things I cannot, but I mean, as you can imagine. Like, I’ll just, actually, I’ll also speak to Adobe, what they’re doing.

I mean, obviously they just came out with launching Firefly. I am of course very excited about that. One, I think it’s cool that they’re doing a database of, or a training model of all licensed imagery that, so you don’t have to worry about these copyright issues and, you know, stealing from other artists.

And they’re also creating a training model so that you can and a compensation model. So as an artist, I can help them get better and, and figure out a way of getting some beneficial return from that.

But I think what’s most important from their side is, okay, great, I can take this right into Photoshop or Illustrator or Premiere, After Effects, and so forth. And it just seamlessly fits into my workflow. That is a huge advantage that as they continue to develop Firefly, I think will be, you know, strategic for them and, and certainly will be.

It’ll take AI from kind of a novel thing that maybe a few artists are doing to now, millions and millions of people are using essentially generative tools in their, in their kind of daily task as artists.

I think GPT has certainly made mainstream AI for a much larger mass of people than say DALL-E has. And, you know, just because it is the potential for what it can do. But yeah, I think, I mean as far as features specifically for DALL-E, I mean, obviously I just released a movie, so I’m very curious on how we can take stills and translate those individuals and what those tools might look like.

Very personally passionate about those types of applications, and yeah, and either how professional or more consumer friendly. Is it like, are you going after prosumers, are you going after really hardcore coders that can actually do this? Or are you just really going after people that just need that almost like as easy as it, as it is to use a PowerPoint just to drop an image in? Like should it be that their focus?

And I think, you know, each of these different companies will figure out where their target audience is. But yeah, there’s definitely some ways where I would say I’m more in the prosumer to professional and I’m far more interested in those types of improvements to the tools.

Helen Todd: Yeah. Oh, thank you for sharing. I’m sure a lot of people will be playing and experimenting. If they haven’t, we encourage you to after listening. Well, one thing that you had said is,you know, the d these tools really are kind of opening up this like democratization of access to being creative.

So I was wondering if you could kind of talk to that and like, even, you know, you said that it was easy for you to bring your animated, I put easy in quotes. There was still a lot of work that went into Critters, but made it possible to bring to life, but that we’re gonna see a whole new crop of storytellers that we might not otherwise hear from. And like, that’s exciting.

Chad Nelson: Oh yeah. I mean, Let’s face it, art school is not cheap. I think some people, you know, if we look at this planet at large, there’s, there’s a whole group of people that would just never even have access to go study. You know, to be able to walk into the Louvre or into the MoMA or any other museum or have the library and the access to all this visual imagery, for them to feel like, oh, wow, I could actually pursue this as a career.

I think some of these tools, it’s amazing because in a way, yes, ideas go from spark on the head to something that could look professional grade in moments, which means there are probably millions of people in this world who never thought of themselves as an artist who suddenly now are creating artwork.

Now what they do with it, and if it turns into a professional career or not, who knows? But I just think it’s gonna open up far more doors in the visual art world than anything that we’ve had to date.

And I think that’s per, I mean, as you mentioned, photography, The iPhone has made more photographers than, and people now are far more passionate about, oh, I gotta get the right picture and, you know, and let me, and they’re probably taking more pictures than they’ve ever taken in their lives thanks to the iPhone. I think more people are gonna create art because of these tools than ever before in human history, and I think that’s amazing.

The second side of it is, I think about a career. So we talked about like my career being roughly three decades. I think it’s pretty safe to say you could almost measure how much output any given individual based on the set of tools that we’ve had for these last 30 years.

You know, how much output could someone actually deliver in the, in you know, per decade? A student coming outta art school now with these tools, they might lap me in five years. They might literally lap my entire career output in under a decade. And so in their 30 year career, they might have gone through three lifetimes as an artist.

And to me that just is staggering because that just means people are going to allow them or they’re gonna have the ability to explore new ideas at a rate and a depth and breadth that, that we just haven’t had in human history. And I think, again, as an individual artist and creative, if I was starting my career with this, how exciting is that?

That is in, to me, that is like where I get the most joy in thinking about the young minds right now, where they’re gonna be, even just in 10 years, it’s gonna be incredible.

Helen Todd: Yeah, I got chills, listening to you as you said that. Yeah. I have an 11 year old niece who’s super creative and I’m so excited to see what she does. But also you’re not, you still have a long…

Chad Nelson: Yeah, no, no, no. I’m not at the end

Helen Todd: span of your

Chad Nelson: I’m not at the end

Helen Todd: I’m excited to see what you’re gonna do in the next decades too with, expanding your own portfolio.

Chad Nelson: I mean, just in this last year and just, I mean, we, DALL-E had, when I got access to DALL-E, it was in May, so it came out April 6th.

I had it in mid-May, so we’re not even, again, depending on when this comes out. But I haven’t, let’s just say it’s been a year. I have produced more art and more visuals and pushed myself creatively further than I’ve ever had in any single year in my life. Thanks to these tools. And to me, that has just been, it fuels the soul as a creative, it just feels like you’re, I mean, I love the idea, you know, some people are getting into fitness, you know, it’s like they need that, that kind of constant routine, if you will.

This has become my creative routine. It keeps my mind fresh. I give myself little daily challenges. In fact, that’s one of the reasons why I love Daily DALL-E so much, is I might just say, okay, what’s this week’s theme gonna be? Might just be completely outta my comfort zone. And I’m like, all right, well, let’s just dive in this week and let’s see if I can explore that theme, and create some imagery as best I can in that theme. And then I’ll post it and we’ll see what people say.

So in a way, it’s pushing me to explore my own creativity in ways that, again, I just didn’t think I would ever be doing in, you know, over a year ago, I would’ve never even have thought about this at all, like.

Helen Todd: Yeah. That’s so exciting. And I’d love for you to also share the story about one of the creators you came across who had the stroke.

Chad Nelson: Oh Yeah

Helen Todd: Cuz I thought that was a really amazing story about how it opens up people to create things that might not otherwise as well.

Chad Nelson: Yeah, I mean, I’ve, numerous people have written me thanks to Daley DALL-E, and they, they’ll tell me their stories or they’ll tell me maybe their background and maybe why they’re inspired by my work.

And then I’ll see their work, and I’m blown away. And then I’m like, you’re inspiring me. I mean, it’s really more so the reverse. And so when you start meeting some, you know, various individuals from around the world, I mean, honestly from all around the world, you start to find out what is, what is this, why did they get into AI? Like you asked me in the very beginning, like, why did I get into it?

And so one individual that I have recently met was an artist, a practicing artist. They’re very into, you know, the physical arts and, and sculpture and so forth. And unfortunately due to a stroke, lost the ability to really manipulate art with their hands and essentially lost that dexterity and that ability, and as you can imagine, as an artist with that’s your whole life to that point, losing that would be very depressing.